The system undergoes testing and validation using two LiDAR devices, RS-LiDAR-M1 and Benewake-Horn-X2. Both LiDARs have a maximum range equal to or greater than 200 m, with ranging accuracy within ± 3 cm. The IMU used is the STIM300, with an accelerometer resolution of 1.9 µg, zero offset instability of 0.05 mg, gyroscope resolution of 0.22 °/h, and zero offset instability of 0.3°/h. The camera has a resolution of 1920 × 1080 and a frame rate of 25. All sensors are installed on the control console in the locomotive’s driver’s cab, with specific installation and arrangement as shown in Fig. 7.

In tunnels, where satellite data is unavailable to trigger PPS second pulses for the LiDAR and camera, a software synchronization approach based on PTP was employed. The industrial computer served as the PTP master, while the LiDAR and camera functioned as PTP slaves. The IMU timestamped data using the computer’s system time, ensuring a time synchronization error of less than 1ms.

Map consistency analysis

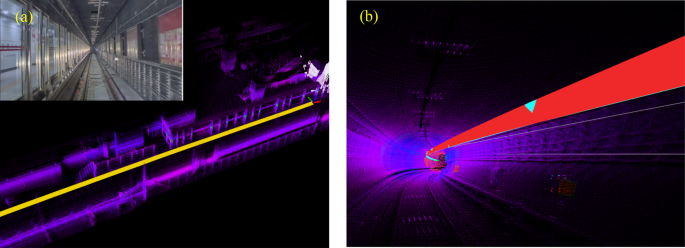

The algorithm in this paper is based on the three-dimensional point cloud generated by the RS-LiDAR-M1, as shown in Fig. 8(a) and Fig. 8(b). In the original point cloud map, features such as platform edges, tracks, and tunnel walls are clearly visible. Figure 9shows the mapping results of mainstream open-source algorithms27, where severe degradation occurs after entering the tunnel due to the lack of integrated back-end multi-factor constraint optimization. This indicates that our algorithm exhibits high accuracy in local areas and demonstrates robustness, making it suitable for high-precision map construction in tunnel scenarios.

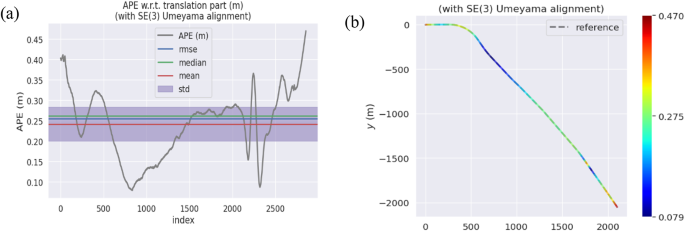

In subway tunnel scenarios, due to the lack of ground truth for quantitative analysis, we can only compare the trajectory consistency of data collected multiple times in the same tunnel section. We use the trajectory from the first mapping session as the ground truth and observe the error in overlap with the trajectories from five additional mapping sessions. Since ensuring the same starting point for each data collection is challenging, we use the evo tool to align the trajectories of multiple datasets. The evo tool, developed by Michael Grupp, is a Python-based package specifically crafted for the assessment of odometry and SLAM outcomes. The experimental results are shown in Fig. 10, with a maximum Absolute Pose Error (APE) error of 0.25 m.

Next, we verify the trajectory consistency of the algorithm’s data across different LiDARs. We simultaneously collect data from RS-LiDAR-M1 and Benewake-Horn-X2 LiDARs, using the trajectory drawn by the RS-LiDAR-M1 as the ground truth. We observe the error in overlap with the trajectories from the mapping sessions of the Benewake-Horn-X2 LiDAR. The experimental results depicted in Fig. 11 reveal that the maximum APE error is under 0.5 m, while the Root Mean Square Error (RMSE) of 0.26 m. Additionally, the cumulative error of map trajectory consistency is less than 0.02%. The results indicate that our algorithm exhibits nearly identical trajectories on both LiDARs.

Localization accuracy analysis

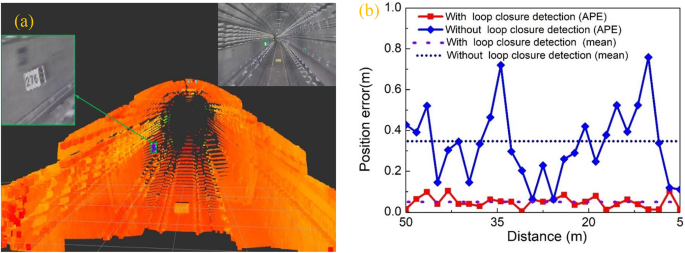

In this section, we analyze the accuracy of train positioning. Since GNSS cannot be used as a ground truth in tunnel scenarios, we use the distance measured by the LiDAR from the HMM to the center of the train’s front as the ground truth.

First, based on the trained model, the visual recognition of the coding digits of the HMM achieves an accuracy of 93.8%. The coding digits of the HMM are unique in the map; therefore, for the recognized HMMs, their precise pose in the map can be obtained. Simultaneously, the fusion positioning algorithm can provide the pose of the train’s front center, allowing the calculation of the distance from the train’s front center to the HMM as the value to be evaluated for positioning accuracy.

Second, due to the metallic properties of the HMM, its high reflectivity and stable height to the LiDAR beam, as shown in Fig. 12(a). Therefore, the point cloud information of the HMM detected by the LiDAR can be extracted through a method combining LiDAR point cloud intensity and visual recognition results. The distance from the centroid of the extracted HMM point cloud to the train’s front center is taken as the ground truth. By comparing the difference between this ground truth and the value to be evaluated for positioning accuracy, the positioning accuracy is assessed.

Since the LiDAR can reliably detect the HMM within a 50-meter range, the comparison is limited to this range to ensure the accuracy and stability of the ground truth. As shown in Fig. 12(b), in the tunnel, the maximum APE error of the loop closure detection system based on the HMM is within 0.15 m, with an average error of less than 0.05 m, while in loop closure detection without the HMM, the APE error reaches 0.7 m, with an average error of approximately 0.3 m.

Time analysis

The industrial computer used in the experiment is based on the x86 platform, equipped with an Intel i9-12900 K×24 CPU, 64GB of memory, an NVIDIA RTX4090 graphics card, and running the Ubuntu 20.04 operating system. The algorithm’s source code is written in C + + and employs multi-threaded programming using the OpenMP library. Given the relatively static nature of subway tunnel environments, we adopted a strategy of constructing the map first and then performing localization based on matching.

Since the map is constructed offline, it can be used for the long term, thus real-time performance is not a primary concern for this process, and it will not be discussed in detail here. Because real-time performance is crucial for localization, we focus on the time consumption of this process. In the localization process, since the back-end graph optimization does not need to run, the total average system time is 41 milliseconds. Specifically, the data preprocessing module has an average time consumption of 17 ms, the loop closure detection module is about 20 ms, and the front-end odometry module is approximately 25 ms. The primary sensor, the LiDAR, scans at a frequency of 10 Hz with a scanning cycle of 100 ms. Therefore, as long as the total runtime of the system is less than 100 milliseconds, it can operate normally. The total runtime for the localization system is only 41 ms, indicating that the proposed algorithm meets the real-time localization requirements for subway trains in tunnel environments and demonstrates a certain redundancy in computational capability. Further research could explore optimizing the algorithm to reduce the demands on the computational platform.