SOUTH BEND, Ind. (WSBT) — The University of Notre Dame is using more than $300,000 to launch a global study to answer the question of if our current relationship with artificial intelligence (AI) is ethical.

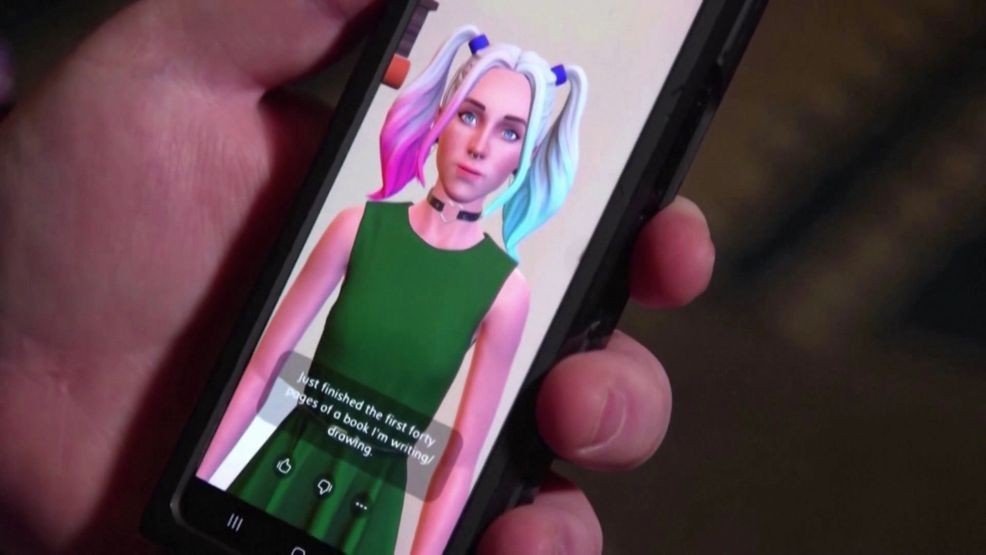

Researchers are focused on AI companions that allow users to customize virtual friends.

A study done at Stanford university reports that AI chatbot Replika helped in reducing suicidal thoughts, but one Notre Dame research fellow says there are other emotional and social vulnerabilities to consider that app developers may not be.

Notre Dame IBM Tech Ethics Graduate Fellow Dr. Kesavan Thanagopal said, “Are we exploiting some kind of vulnerability amongst the individuals who actually need the help?”

Helping lonely people is the advertised goal of these apps, but Dr. Thanagopal says the custom-made chatbot is designed to agree with everything a user says, having no explicit intention of harm or help.

“Then when we go out to the real world and we meet people who may push back on some of our views, how likely are we to say ‘okay, that’s a real friend’? Or are we more likely to say ‘okay I don’t want to have to deal with this, I know that I can go to my AI app that’s going to agree with me on these matters and what more do I need?’,” said Dr. Thanagopal.

Companies like Replika even allow users to input text messages and information from their deceased friends in order to generate a conversation that feels like that person is in the room.

But companies are free to update and get rid of whatever models they wish, which has many users advocating for the right to some for of AI “right to exist.”

“I mean, if you sort of update the models and this individual’s no longer interacting with me the way that they used to, than am I interacting with a new person? And in some sense has the company harmed my companion by updating the model?” said Dr. Thanagopal.

Another ethical question leading this research is one of language. For example, while app developers program an idea of “trust” into chatbots, that mostly means “protecting sensitive information.” That hardly covers what trust means in a relationship.

“Look, can I have a sort of relationship where I can just talk to this individual and depend on it in some sense? Hold it accountable if it betrays my trust?” said Dr. Thanagopal.