The research concept for the analysis of the precious metal content in bottom ashes from municipal waste incineration was developed in 2020 at the Krakow Thermal Waste Treatment Plant26. The Thermal Waste Treatment Plant in Krakow was commissioned in 2016; the total net cost of the project was approximately EUR 155 million net. The plant has two independent incineration lines connected to each other by the electricity generation and transmission node (extraction condensing turbine). Each line processes up to 15.5 Mg of waste per hour, and the total processing capacity of the plant is 245,000 Mg/year. The feedstock used consists of two streams of municipal waste from the city of Krakow. The first and main stream is waste with the EWC code 19 12 12, originating from the mechanical and biological treatment of municipal waste (approximately 65% share). The second waste stream, accounting for about 35%, is a non-recyclable municipal waste with the EWC code 20 03 01, collected directly from residents, as part of a separate waste collection system. The combustion technology used uses a grate furnace integrated with a natural circulation steam boiler. The bottom ash produced is directed to a slag trap equipped with a water lock, from where, after cooling, it is passed through a conveyor belt unit onto ferrous metal separators. The obtained bottom ash is then subjected to a two-week weathering process, where the moisture content is reduced from around 30% to around 12–20%. After the pre-weathering process, in the incineration plant, bottom ash is processed in an installation where ferrous and non-ferrous metals are partially recovered and the produced metal concentrates and impurities in bottom ash with different grain sizes.

Sampling and analysis

The samples used for the research were taken from bottom ash and subjected to a preliminary two-week weathering process and a partial metal recovery process. The sampling was carried out on one day of each week, beginning in January and ending in December 2021. The samples were taken according to the requirements of PN-EN 14899 sampling of waste materials. Framework for the preparation and application of a sampling plan27 and the guidelines for bottom ash sampling set out in the study “List of methods—Sampling and preparation of samples and analysis of solid residues from thermal treatment of waste and products generated during treatment”28. Eight primary samples of bottom ash weighing 3.5–4.0 Mg were collected per day. Samples were taken from the end of the conveyor belt (from the falling waste stream) using a wheel loader equipped with a weighing system. The eight samples taken in this way, with a total maximum weight of 32.0 Mg, formed a composite sample, which was then directed to be reduced in size; finally, a longitudinal two-row prism was formed. There were four primary samples in each row of the pile. Reducing the sample size involved dividing the pile into two equal parts. Splitting was done by digging up the pile from the shorter side. The first pile was directed to the next stage of reduction and the second pile was rejected. In the last stage, two piles with a mass of about 8.0 Mg were obtained, and the collection from these piles was carried out along their diagonals. At this stage, approximately 300.0 kg of material was collected, which was fed to a sample divider (DP, Multiserw); as a result about 10.0 kg laboratory samples were obtained. The samples were crushed to 3, HCl, H2O). The results obtained for the precious metal content expressed in ppb are presented in Annex 1. Values under detection limit (UDL) and above detection limit (ADL) are marked with a majority or minority sign, respectively. Determination of precious metal content was carried out using a Perkin Elmer ELAN 9000 mass spectrometer. Quality control was conducted using multi-element custom-certified reference materials STD BVGEO01 and STDOREAS262. Analysis of a blank sample and a control duplicate analysis of one of the samples was performed to assess the repeatability of the results.

Data analysis

The assessment of the potential recovery of precious metals from bottom ash from municipal waste incineration at the Thermal Waste Treatment Plant in Kraków was carried out in accordance with the cross-industry standard Process for Data Mining (CRISP-DM) methodology, which is one of the most widely used methodologies for data analysis29,30,31,32. This methodology defines six key phases that enable the execution, evaluation, and implementation of models supporting business decision-making. The advantage of the CRISP-DM methodology is the possibility of returning to earlier phases in case of unsatisfactory results29,31.

The first CRISP-DM Phase- Business Understanding- task is aimed at defining the objectives of the planned analysis. It can be considered that if the elemental content of IBA does not change over time, i.e. is stationary, and occurs in average quantities like those of other waste incinerators with similar processing capabilities where precious metal recovery has been implemented, then its recovery can be cost-effective. Furthermore, if only single precious metals are present in high values, it may turn out that potentially unprofitable precious metals, will also be profitable if the recovery is carried out completely, i.e. by choosing the right recovery technique. An additional argument for the implementation of the precious metal recovery project from bottom ashes is the observation of the occurrence of outliers and extremely high values.

The Data Understanding phase involved performing a descriptive analysis of the data33. The data analyzed constituted a data frame containing a time series of four precious metals: silver, gold, palladium, and platinum. A single time series contained 52 observations measured in week intervals. The time series for silver and palladium contained “censored” observations, which means that part of the obtained results was below or above the limit of quantification of the analytical method used. In the case of silver, there was one observation above the detection range of the measuring device, the so-called ADL, while the time series for palladium contained 14 observations below the detection range of the measuring device, the so-called UDL.

The next phase—Data Preparation—involved preparing data to assess the possibility of extracting precious metals from bottom ash after municipal waste incineration. Proper data preparation is crucial to obtain optimal results of the analysis. Censored data should never be deleted from the dataset to avoid a strong bias in estimating descriptive statistics and model results. Censored observations should be replaced. In the imputation method, a value is assigned to each censored observation. In datasets with more than 15%, and less than 50% of censored observations it is advisable to use extrapolation methods, Robust Regression on Order Statistics (ROS), Kaplan–Meier method, Cohen’s method, Weibull regression, or Maximum likelihood estimation (MLE)34.

At this stage, the UDLs for palladium were replaced by values calculated using the Robust Regression on Order Statistics (ROS) method35,36. It is one of the recommended methods to replace censored values for environmental data for small datasets37.

After replacing the censored value with the ROS method, basic descriptive statistics were performed. In addition, bar charts, autocorrelation function plots, seasonality plots, and ANOVA analysis were performed38,39. In this phase, stationary data analysis—the Augmented Dickey-Fuller test—was also performed40.

The next phase—Modelling—has been modified for the requirements of the project. In this phase, the focus was on assessing the relationship between precious metals. Therefore, the Pearson and Spearman’s rank correlation matrices41,42 were used. Pearson’s correlation assesses linear relationships, while Spearman’s correlation assesses monotonic relationships. The use of both Pearson’s linear correlation and Spearman’s ranks allows assessing the correlation power, direction, and shape of the correlation41. To supplement the correlation matrices, scatter plots were made for statistically significant correlations.

In this phase detection of the high and very high content of precious metals in individual samples was performed. Moreover, attempts were made to find out whether the high values of one precious metal were related to the high values of another. An analysis of the occurrence of outliers and extreme values was carried out using the Rosner test43.

The last step was to compare the mean values using a one-sample t-test44. The average values of the IBA precious metals content were compared with the given average values for the Hinwil incineration plant45, in Northern Italy21 and their average content in the Earth’s crust46.

The Evaluation—phase is presented in the Discussion and Conclusions sections. At this stage of the project, the analyzes performed were interpreted and conclusions regarding the recovery of precious metals from IBA were presented.

The Deployment—phase of the CRISP-DM methodology involves implementing a ready-made solution—in this case, the beginning of the recovery of precious metals. This phase was omitted.

Theoretical issues of data analysis

Robust Regression on Order Statistics (ROS) is a semi-parametric statistical method that computes a regression line to estimate values for censored data. ROS assumes that the underlying population is normally or lognormally distributed. Additionally, in the data, there should be at least three detected values and a detection frequency greater than 50%. The ROS method is based on a simple linear regression model that uses ordered detected values and distributional quantiles to estimate the concentration of the censored values. ROS imputes the censored data using the estimated least-squares linear model parameters and estimates the overall sample mean and sample deviation.

Robust regression on order statistics can be presented as follows35:

for \(n={n}_{0}+{n}_{1}\) independent normally distributed data, with mean \(\upmu\) and variance \({\upsigma }^{2}\),

\({n}_{0}\) is the observations are non-detects, \({n}_{1}\) is the observations are larger than the detection limit.

$${y}_{i}=\upmu +{{\sigma \Phi }}^{-1}({P}_{i})$$

(1)

where: \({P}_{i}=P\left({Y}_{i}\le {y}_{i}\right)\) and \({\Phi }^{-1}\)(.) denotes the inverse cdf of a N(0.1) distribution.

The procedure replaces probabilities with adjusted ranks; the regression equation becomes:

$${y}_{i}=\widehat{\upmu }+\widehat{\upsigma }{\Phi }^{-1}\left(\frac{i-3/8}{n+1/4}\right)+ {\upvarepsilon }_{i}$$

(2)

where: \(i={n}_{0}+1, {n}_{0}+2 ,\dots , {n}_{0}+{n}_{1}\), \(\widehat{\upmu }, \widehat{\upsigma }\) is the least squares estimates of \(\upmu\), \({\Phi }^{-1}\left(\frac{i-3/8}{n+1/4}\right)\) is the normal score calculated using Altman’s formula, \({\upvarepsilon }_{i}\) is the residual errors.

One-way analysis of variance (ANOVA) is one of the most frequently used statistical methods to determine the significance of differences between data subsets. Analysis of variance is used when there are at least three mutually independent groups with normal distribution and similar variance. ANOVA uses the F -for statistical significance. The F-test compares the variance in each group mean from the overall group variance. If the variance within the group is smaller than the variance between groups, the F-test obtains a higher F value, and thus a higher likelihood that the observed difference is significant. The null hypothesis is that there is no statistically significant difference between group means. The alternative hypothesis is that there are at least two groups that are statistically significantly different from each other39,44.

A one-sample t-test is a statistical test used to determine whether an unknown population mean is different from a specific value44. This test should be performed for large samples (more than 30 observations). The t-test is highly sensitive to non-detects47.

The one-sample t-test can be presented as44:

$$t=\sqrt{n}\frac{\overline{x }-\upmu }{s}$$

(3)

where the test has the t-distribution with n – 1 degrees of freedom for n (n ≥ 30), p = 0.05, and: \(\overline{x }\) is the mean value in sample, \(\upmu\) is the value of comparison, \(s\) is the standard deviation in the sample.

The null hypothesis (H0) is that the true difference between the sample mean, and the comparison value is zero.

The alternative hypothesis (Ha) is that the true difference is different from zero.

There are several methods to test the stationarity of time series. The initial information is obtained thanks to the Autocorrelation function (ACF). The ACF helps to recognize the stationarity, trend, and seasonality of data. The autocorrelation function for lag k is Pearson’s linear correlation coefficient between a given time series and the same time series separated by k intervals38. The ACF function is well discussed in the literature38,48,49.

The Augmented Dickey–Fuller test (ADF) is a unit root test for stationarity that can be used with serial correlation. The null hypothesis is there is a unit root in the first-order AR model, which implies that the data series is not stationary. The alternative hypothesis is stationarity or trend stationarity and depends on the test version50.

ADF statistics can be presented as:

$$\Delta {y}_{t}=\upmu +\upgamma {y}_{t-1}+\sum_{i=1}^{P}{{\alpha }}_{s}\Delta {y}_{t-s}+{\upvarepsilon }_{t}$$

(4)

$$\text{ADF}=\frac{\widehat{\upgamma }}{SE(\widehat{\upgamma })}$$

(5)

The maximum lag value is expressed as follows50:

$$n\text{lag}={\left(\frac{4\bullet n}{100}\right)}^\frac{2}{9}$$

(6)

where: \(\upmu\) is the mean value, \(\upgamma {y}_{t-1}\) is the lagged values of the dependent variable, \({\upvarepsilon }_{t}\) is the white noise, n is the number of observations.

The computed ADF value can be compared to the relevant critical values for the Dickey–Fuller test. This test is asymmetrical, so concerned with negative values of test statistics. If the calculated statistical test is more negative than the critical value, then the null hypothesis is rejected, and no unit root is present50.

The Rosner test detects up to 10 outliers among the selected data values. The test can detect outliers that are much smaller or much larger than the rest of the data and avoids the masking problem (when two outliers have similar values, one of them could go undetected). Data are ordered in ascending order. In the next step the k number of suspected outliers is specified. Then a series of statistics excluding the largest and smallest observation from the data is calculated. The test equation is as follows:

$${R}_{i+1}=\frac{\left|{x}^{\left(i\right)}-{\overline{x} }^{\left(i\right)}\right|}{{s}^{\left(i\right)}}$$

(7)

Critical values for \({R}_{i+1}\) are denoted \({\lambda }_{i+1}\) and can be presented as:

$${\lambda }_{i+1}=\frac{{t}_{p,n-i-2}(n-i-1)}{\sqrt{\left(n-i-2+{t}_{p,n-i-2}(n-i\right)}}$$

(8)

where \({t}_{p,\nu }\) denotes the p’th quantile of Student’s t-distribution with ν degrees of freedom, and can be presented as:

$$p=1-\frac{\alpha /2}{n-1}$$

(9)

where: \({\overline{x} }^{\left(i\right)}\) is the sample mean, \({x}^{\left(i\right)}\) is the observation in data subset that is the furthest from \({\overline{x} }^{\left(i\right)}\), \({s}^{\left(i\right)}\) is the data standard deviation after removing the i most extreme observations, \(\alpha\) is the Type I error level (0.05, as long as n ≥ 25).

Rosner’s test is based on k statistics \({R}_{1}, {R}_{2},\dots ,{R}_{k}\) which represent the extreme studentized deviates computed from successively reduced samples of size n, n − 1, …, n − k + 1. A series of hypothesis tests is performed after all test statistics \({R}_{1}\dots {R}_{k}\) are computed. Null hypothesis: there are no outliers in the data. Alternative hypothesis: there are k outliers in the data. The first test assumed that there were k outliers in the data, by comparing \({R}_{k}\) to the critical value \({\lambda }_{k}\) for a specified significance level α. If \({R}_{k}>{\lambda }_{k}\), then the test is significant and the null hypothesis is rejected, and in data k the most extreme values are outliers43,51.

Software used

During the data analysis, the R 4.2.3 package was used (Shortstop Beagle released 2023-03-15), together with the integrated RStudio 2023.03.1 (Cherry Blossom released 2023-05-09). The following packages were used: aTSA v3.1.2, corrplot v0.92, dplyr v1.01.10, e1071 v1.7-11, EnvStats v2.7.0, forecast v0.5.2, ggplot2 v3.3.6, NADA v1.6-1.1, stats 4.4.0, tidyr v1.2.1, and tseries v0.10-52. The calculations were performed on a computer with the Windows 11 Pro 64-bit operating system with an i7-10710 processor with 1.10 GHz and 16 GB RAM.

Profitability analysis

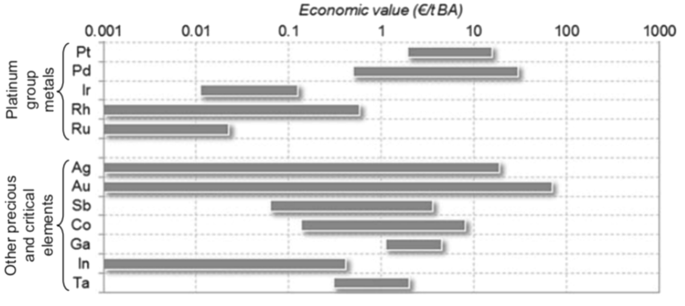

The following average precious metal prices from the London Metal Exchange for June 2023 were used to assess cost-effectiveness: 0.797 EUR/g (silver), 64.916 EUR/g (gold), 47.021 EUR/g (palladium), and 34.399 EUR/g (platinum)52. The estimated capital expenditure for the construction of a state-of-the-art facility for the recovery of metals from bottom ash was based on the capital expenditure incurred for the construction of the Afatek A/S in Denmark with a capacity of 180,000 Mg per year. The amount of capital expenditure was then increased by the producer price index, whose value between 2016 and 2023 was 1,55153; the capital expenditure associated with the construction of a 50,000 Mg/year plant was then estimated on this basis. Investment expenditures in 2023 were estimated at EUR 3.447 million. The recovery efficiency was determined based on data from the Hinwil plant at the level of: 57.4% for silver, 28.8% for gold, and 30.0% for platinum and palladium54. Electricity consumption was determined at 8.28 kWh / Mg IBA, based on data collected by Bruno, M. et al. 202155, and the cost of 1 MWh of electricity was assumed at 129.1 EUR56. Maintenance costs were assumed for years 1–2 at 1.25% CAPEX and for years 3–20 at 2.5% CAPEX. The costs of laboratory tests were assumed at EUR 25,000 per year, and the costs of salaries for employees operating the installation were assumed at EUR 80,000 per year Subsequently, the obtained data were used to determine the internal rate of return. The IRR is used to estimate the return on potential investments57. Then, based on the internal rate of return obtained, the payback time was calculated. The internal rate of return was calculated from the formula:

$$IRR={\sum }_{T=1}^{n}\frac{{CF}_{t}}{{(1+d)}^{t}}-{I}_{0} [EUR]$$

(10)

where: CFt is the cash flow, I0 is the investment cost, d is the discount rate (4.4%), t is the plant lifetime (20 years).

The determination of discount rate was carried out in the range from 0 to 10%. The cutoff value was between 4 and 5%. In the first case, the NPV was positive and close to zero, while for a rate equal to 5% the NPV was negative, but still close to zero. The exact discount rate was determined using the following formula:

$$d\approx {i}_{1}+ \frac{{NPV}_{1} \times ({i}_{2}-{i}_{1})}{{NPV}_{1}+ {NPV}_{2}} [\%]$$

(11)

where: i1 is the discount rate for NPV1 [%], i2 is the discount rate for NPV2 [%], NPV1 is the positive NPV value for 4% discount rate [EUR], NPV2 is the negative NPV value for 5% discount rate [EUR].