The electronic search conducted through PubMed and Scopus identified a total of 776 studies out of which 51 duplicate records were removed. Out of the remaining 725 studies, 665 studies were excluded based on the irrelevancy of their titles. Following this, 60 studies underwent abstract and full-text screening, resulting in the inclusion of 34 studies from the electronic search. An additional manual search using Google Scholar yielded 123 studies, out of which 10 were included. In total, 44 studies were included in this review.

Summary characteristics of studies

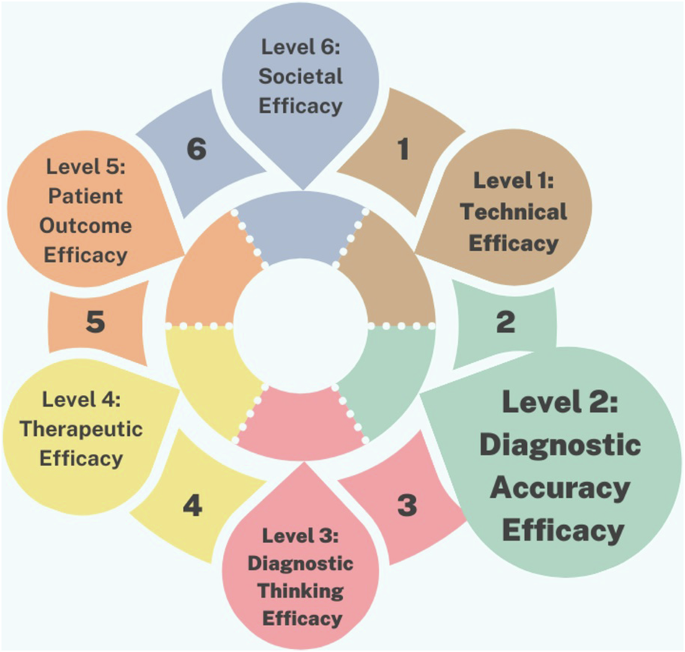

The studies included in this review were published between 2011 and 2024, with most of the studies reporting a sample size ranging from 10 to 68 patients. Among the 44 studies analyzed, 26 studies [12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37] were retrospective, while 7 studies [38,39,40,41,42,43,44] utilized a comparative design. The remaining studies comprised of 3 observational [45,46,47], 5 validation [48,49,50,51,52], 2 cross-sectional [53, 54], and 1 prospective study [55] (Supplementary Table). In terms of AI-based application utilization, Diagnocat was the most frequently employed tool, used in 26 studies [13,14,15,16, 18,19,20,21,22,23,24, 27, 28, 30, 32, 33, 35,36,37, 41, 43, 51, 52, 55], followed by CephX in 16 studies [12, 17, 25, 26, 29, 31, 34, 38, 40, 44, 45, 47,48,49,50, 54]. Additionally, 2 studies [39, 53] utilized Denti.AI, while Smile.AI [42] and Smile Designer [46] were each reported by 1 study. The deployment levels of the studies according to Fryback and Thornbury’s model varied. Notably, 27 studies [13,14,15,16, 19, 21,22,23, 25, 26, 28, 30, 31, 33, 36, 39,40,41, 43,44,45,46, 50,51,52,53, 55] achieved level 2 deployment that assessed the AI software and tested their efficacy without any real-world deployment. However, 6 studies [20, 24, 38, 42, 47, 49] attained level 1, while another 6 studies [17, 18, 34, 35, 37, 48] reached level 3, and 3 studies [12, 29, 54] reached level 4. Only one study achieved level 5 [32] and level 6 each [27], respectively assessing the effectiveness of the AI-based application in clinical workflows. Regarding discipline, 20 studies had AI-based applications focusing in the field of Orthodontics [12, 17, 21, 25, 26, 29,30,31, 34, 36, 38, 40, 42, 44, 45, 47,48,49,50, 54], followed by 7 studies in Endodontics [13, 33, 35, 39, 41, 43, 52], 6 studies in Dental Radiology [14, 15, 22, 27, 32, 53], and 5 studies in Oral and Maxillofacial Surgery [16, 18, 23, 24, 28]. However, only two studies were in Prosthodontics [19, 46] and Periodontics [20, 51], and only 1 study for Restorative Dentistry [37] and Oral Medicine [55].

Contacting companies

To assess the validity and deployment, we attempted to gather more information about AI-based applications for dentistry by reaching out to different companies via email claiming to offer AI solutions to dental practitioners, as presented in Table 1. The developers that we contacted included Smilo.AI ©, Smile.AI © Videa.AI ©, Diagnocat ©, Denti.AI ©, Smile Designer ©, Overjet ©, CephX ©, Pearl ©, and DentalXR.ai ©. Despite our multiple inquiries via email, only CephX company’s developer replied offering a free trial instead to complete access which was needed to evaluate the real-world deployment of the application.

Deployment levels refer to different stages of studies, ranging from technical reports (Level 1) to assessing the broader societal impact with levels 5–6 specifically denoting applications in real-world settings. The multi-level framework, as presented in Fig. 1, for evaluating AI in dental care is predominantly populated by studies focused on deployment Level 1: Technical Efficacy and Level 2: Diagnostic Accuracy Efficacy. These studies primarily involve principles of concepts, and validation that assess AI algorithm performance and diagnostic accuracy compared to expert human dentists. Level 1 studies emphasize parameters like algorithm accuracy, sensitivity to imaging artifacts, and computational efficiency, while Level 2 studies focus on metrics such as sensitivity, specificity, and positive predictive value. In contrast, there are comparatively fewer studies that explore the higher levels of effectiveness, such as Level 3: Diagnostic Thinking Efficacy, Level 4: Therapeutic Efficacy, Level 5: Patient Outcome Efficacy, and Level 6: Societal Efficacy.