In this section, we present the system model for heterogeneous multicore processors (HMPs) in edge computing environments and formally define the energy-efficient task scheduling problem.

System model

We consider an edge computing system consisting of a set of heterogeneous multicore processors (HMPs) denoted by \(\:P={p}_{1},{p}_{2},\dots\:,{p}_{m}\), where \(\:m\) is the number of HMPs. Each HMP \(\:{p}_{i}\) contains a set of heterogeneous cores denoted by \(\:{C}_{i}={c}_{i,1},{c}_{i,2},\dots\:,{c}_{i,{n}_{i}}\), where \(\:{n}_{i}\) is the number of cores in HMP \(\:{p}_{i}\). The cores within an HMP can have different performance and power characteristics, such as high-performance cores (HPCs) and energy-efficient cores (EECs).

The system receives a set of tasks denoted by \(\:T={t}_{1},{t}_{2},\dots\:,{t}_{n}\), where \(\:n\) is the number of tasks. Each task \(\:{t}_{j}\) is characterized by its workload size \(\:{w}_{j}\), arrival time \(\:{a}_{j}\), and deadline \(\:{d}_{j}\). The execution time of task \(\:{t}_{j}\) on core \(\:{c}_{i,k}\) is denoted by \(\:{e}_{j,i,k}\), which depends on the workload size and the performance characteristics of the core.

The power consumption of core \(\:{c}_{i,k}\) is denoted by \(\:{p}_{i,k}\left(f\right)\), where \(\:f\) is the operating frequency of the core. The power consumption can be modeled using the dynamic voltage and frequency scaling (DVFS) technique, where the power consumption is a function of the operating frequency.

Table 2 presents a comprehensive list of notations and their corresponding definitions used throughout this paper. These symbols and terms are essential for understanding the proposed algorithm, mathematical formulations, and analysis presented in our work.

These notations will be used consistently throughout the paper to describe the task scheduling problem, the proposed algorithm, and the analysis of results. When introducing new concepts or equations, we will provide additional explanations to ensure clarity.

Example usage:

The overall priority \({\text{P}}\left( {{{\text{t}}_{\text{i}}}} \right)\) of task \({{\text{t}}_{\text{i}}}\) is calculated as a weighted sum of its deadline-based priority \({{\text{P}}^{\text{d}}}\left( {{{\text{t}}_{\text{i}}}} \right)\)and dependency-based priority \({\operatorname{P} ^r}\left( {{{\text{t}}_{\text{i}}}} \right)\):

\(P\left( {ti} \right)=\alpha \times Pd\left( {ti} \right)+\left( {1 – \alpha } \right) \times Pr\left( {ti} \right)\)

where α is the priority weight factor that determines the relative importance of deadline and dependency considerations in task prioritization.

By providing this comprehensive notation table and example usage, we aim to improve the readability and understanding of the mathematical formulations and algorithms presented in this paper.

Problem definition

The energy-efficient task scheduling problem in edge computing with HMPs can be formally defined as follows:

Given:

A set of heterogeneous multicore processors \(\:P={p}_{1},{p}_{2},\dots\:,{p}_{m}\), where each HMP \(\:{p}_{i}\) contains a set of heterogeneous cores \(\:{C}_{i}={c}_{i,1},{c}_{i,2},\dots\:,{c}_{i,{n}_{i}}\).

A set of tasks \(\:T={t}_{1},{t}_{2},\dots\:,{t}_{n}\), where each task \(\:{t}_{j}\) is characterized by its workload size \(\:{w}_{j}\), arrival time \(\:{a}_{j}\), and deadline \(\:{d}_{j}\).

The execution time \(\:{e}_{j,i,k}\) of task \(\:{t}_{j}\) on core \(\:{c}_{i,k}\).

The power consumption function \(\:{p}_{i,k}\left(f\right)\) of core \(\:{c}_{i,k}\) at frequency \(\:f\).

Objective:

Constraints:

Each task should be assigned to exactly one core.

The execution of a task should not exceed its deadline.

The total execution time of tasks assigned to a core should not exceed the available time budget.

The objective of the energy-efficient task scheduling problem can be formally expressed as:

$${\text{min}}\mathop \sum \limits_{{i=1}}^{m} \mathop \sum \limits_{{k=1}}^{{{n_i}}} \mathop \sum \limits_{{j=1}}^{n} {p_{i,k}}\left( {{f_{j,i,k}}} \right) \times {e_{j,i,k}} \times {x_{j,i,k}}$$

(3)

where \(\:{f}_{j,i,k}\) is the operating frequency of core \(\:{c}_{i,k}\) when executing task \(\:{t}_{j}\), and \(\:{x}_{j,i,k}\) is a binary variable indicating whether task \(\:{t}_{j}\) is assigned to core \(\:{c}_{i,k}\).

The constraints can be formally expressed as:

$$\mathop \sum \limits_{{i=1}}^{m} \mathop \sum \limits_{{k=1}}^{{{n_i}}} {x_{j,i,k}}=1,\forall j \in 1,2, \ldots ,n$$

(4)

$$\mathop \sum \limits_{{i=1}}^{m} \mathop \sum \limits_{{k=1}}^{{{n_i}}} {e_{j,i,k}} \times {x_{j,i,k}} \leqslant {d_j} – {a_j},\forall j \in 1,2, \ldots ,n$$

(5)

$$\mathop \sum \limits_{{j=1}}^{n} {e_{j,i,k}} \times {x_{j,i,k}} \leqslant {t_{i,k}},\forall i \in 1,2, \ldots ,m,\forall k \in 1,2, \ldots ,{n_i}$$

(6)

where \(\:{t}_{i,k}\) is the available time budget for core \(\:{c}_{i,k}\).

The energy-efficient task scheduling problem in edge computing with HMPs is a complex optimization problem that aims to minimize the total energy consumption while satisfying the task requirements and system constraints. In the following sections, we propose a novel task scheduling algorithm that addresses this problem by leveraging the heterogeneity of cores and employing energy-saving techniques such as DVFS and core sleep states.

Algorithm designTask priority assignment

In order to effectively schedule tasks on heterogeneous multicore processors (HMPs) while minimizing energy consumption, it is essential to assign priorities to tasks based on their characteristics. Task priority assignment plays a crucial role in determining the order in which tasks are executed on the available cores. In this section, we present our approach for task priority assignment based on task deadlines and task dependency relationships.

Task deadlines are a critical factor in determining the priority of tasks. Tasks with earlier deadlines should be given higher priority to ensure that they are completed within their time constraints. We define the deadline-based priority \(\:{P}_{d}\left({t}_{j}\right)\) of task \(\:{t}_{j}\) as:

$${P_d}\left( {{t_j}} \right)=\frac{1}{{{d_j} – {a_j}}}$$

(7)

where \(\:{d}_{j}\) is the deadline of task \(\:{t}_{j}\), and \(\:{a}_{j}\) is its arrival time. Tasks with smaller values of \(\:{d}_{j}-{a}_{j}\) (i.e., tighter deadlines) will have higher priority.

In addition to task deadlines, task dependency relationships also influence the priority assignment. Tasks that have a higher number of dependent tasks should be given higher priority to avoid delaying the execution of their dependent tasks. We define the dependency-based priority \(\:{P}_{r}\left({t}_{j}\right)\) of task \(\:{t}_{j}\) as:

$${P_r}\left( {{t_j}} \right)=\mathop \sum \limits_{{{t_k} \in {R_j}}} \frac{1}{{{d_k} – {a_k}}}$$

(8)

where \(\:{R}_{j}\) is the set of tasks that depend on task \(\:{t}_{j}\). The dependency-based priority of a task is calculated as the sum of the reciprocal of the deadline minus arrival time of its dependent tasks.

To combine the deadline-based priority and the dependency-based priority, we introduce a priority weight factor \(\:\alpha\:\) that determines the relative importance of each priority component. The overall priority \(\:P\left({t}_{j}\right)\) of task \(\:{t}_{j}\) is defined as:

$$P\left( {{t_j}} \right)=\alpha \times {P_d}\left( {{t_j}} \right)+\left( {1 – \alpha } \right) \times {P_r}\left( {{t_j}} \right)$$

(9)

where \(\:\alpha\:\in\:\left[0,1\right]\) is the priority weight factor. A higher value of \(\:\alpha\:\) gives more weight to the deadline-based priority, while a lower value of \(\:\alpha\:\) gives more weight to the dependency-based priority.

The task priority assignment algorithm works as follows:

1.

Calculate the deadline-based priority \(\:{P}_{d}\left({t}_{j}\right)\) for each task \(\:{t}_{j}\) using Eq. (7).

2.

Calculate the dependency-based priority \(\:{P}_{r}\left({t}_{j}\right)\) for each task \(\:{t}_{j}\) using Eq. (8).

3.

Combine the deadline-based priority and the dependency-based priority using Eq. (9) to obtain the overall priority \(\:P\left({t}_{j}\right)\) for each task \(\:{t}_{j}\).

4.

Sort the tasks in descending order of their overall priority \(\:P\left({t}_{j}\right)\).

The sorted list of tasks represents the order in which tasks should be considered for scheduling on the available cores. Tasks with higher priority are scheduled first to ensure that they meet their deadlines and to minimize the impact on dependent tasks.

The priority weight factor \(\:\alpha\:\) allows for flexibility in adjusting the relative importance of deadline-based and dependency-based priorities based on the specific requirements of the edge computing application. For example, in applications with strict real-time constraints, a higher value of \(\:\alpha\:\) can be used to give more emphasis to the deadline-based priority. On the other hand, in applications with complex task dependencies, a lower value of \(\:\alpha\:\) can be used to prioritize tasks with a higher number of dependent tasks.

By assigning priorities to tasks based on their deadlines and dependency relationships, the proposed task scheduling algorithm aims to optimize the execution order of tasks on HMPs while considering the energy consumption. In the following sections, we present the core selection and frequency scaling techniques that work in conjunction with the task priority assignment to achieve energy-efficient task scheduling.

Core performance-aware task mapping

In heterogeneous multicore processors (HMPs), the performance characteristics of different cores can vary significantly. Some cores may be designed for high performance, while others may be optimized for energy efficiency. To achieve optimal performance and energy efficiency, it is crucial to map tasks to the most suitable cores based on their performance requirements and the capabilities of the cores. In this section, we present our core performance-aware task mapping strategy that considers the heterogeneity of cores in HMPs.

The goal of the task mapping strategy is to assign tasks to cores in a way that minimizes the overall execution time and energy consumption. We introduce a performance factor \(\:{\beta\:}_{i,k}\) for each core \(\:{c}_{i,k}\), which represents the relative performance of the core compared to a reference core. The performance factor can be determined based on the core’s architecture, frequency, and other performance-related characteristics.

To map tasks to cores, we define a suitability score \(\:{S}_{j,i,k}\) that measures the suitability of assigning task \(\:{t}_{j}\) to core \(\:{c}_{i,k}\). The suitability score takes into account both the performance factor of the core and the execution time of the task on that core. It is defined as:

$${S_{j,i,k}}=\frac{{{\beta _{i,k}}}}{{{e_{j,i,k}}}}$$

(10)

where \(\:{\beta\:}_{i,k}\) is the performance factor of core \(\:{c}_{i,k}\), and \(\:{e}_{j,i,k}\) is the execution time of task \(\:{t}_{j}\) on core \(\:{c}_{i,k}\). A higher suitability score indicates that the core is more suitable for executing the task.

The task mapping algorithm works as follows:

1.

Sort the tasks in descending order of their overall priority \(\:P\left({t}_{j}\right)\) obtained from the task priority assignment algorithm.

2.

For each task \(\:{t}_{j}\) in the sorted list:

Calculate the suitability score \(\:{S}_{j,i,k}\) for each core \(\:{c}_{i,k}\) using Eq. (10).

Assign task \(\:{t}_{j}\) to the core \(\:{c}_{i,k}\) with the highest suitability score.

Update the available time budget of core \(\:{c}_{i,k}\) by subtracting the execution time of task \(\:{t}_{j}\).

3.

Repeat step 2 until all tasks are assigned to cores or no more cores have available time budget.

The task mapping algorithm assigns tasks to cores based on their suitability scores, which consider both the performance characteristics of the cores and the execution time of the tasks. By prioritizing tasks with higher overall priority and assigning them to the most suitable cores, the algorithm aims to minimize the total execution time and energy consumption.

In cases where a task cannot be assigned to any core due to insufficient available time budget, the algorithm can employ techniques such as task migration or task preemption to handle the situation. Task migration involves moving a task from one core to another core with available time budget, while task preemption involves temporarily suspending a lower-priority task to accommodate a higher-priority task.

To further optimize the energy consumption, the task mapping algorithm can be extended to consider the power consumption characteristics of the cores. The power consumption of a core can be modeled as a function of its operating frequency and the workload of the assigned tasks. By incorporating power consumption into the suitability score calculation, the algorithm can prioritize cores that offer a better trade-off between performance and energy efficiency.

For example, the suitability score can be modified to include a power consumption term:

$${S_{j,i,k}}=\frac{{{\beta _{i,k}}}}{{{e_{j,i,k}} \times {p_{i,k}}\left( {{f_{j,i,k}}} \right)}}$$

(11)

where \(\:{p}_{i,k}\left({f}_{j,i,k}\right)\) is the power consumption of core \(\:{c}_{i,k}\) when executing task \(\:{t}_{j}\) at frequency \(\:{f}_{j,i,k}\). This modified suitability score gives preference to cores that can execute the task with lower power consumption while still maintaining good performance.

By considering the heterogeneity of cores and their performance and power consumption characteristics, the proposed task mapping strategy aims to optimize the assignment of tasks to cores in HMPs. The algorithm takes into account the priority of tasks, the suitability of cores for executing each task, and the available time budget of cores to make efficient task mapping decisions. In the following sections, we discuss the frequency scaling and core sleep strategies that work in conjunction with the task mapping algorithm to further minimize energy consumption.

Energy consumption prediction-based DVFS scheduling

Dynamic Voltage and Frequency Scaling (DVFS) is a powerful technique for reducing the energy consumption of processors by dynamically adjusting the operating voltage and frequency based on the workload. In heterogeneous multicore processors (HMPs), DVFS can be applied to individual cores to optimize their energy efficiency. However, determining the optimal DVFS configuration for each core is a challenging task, as it depends on the characteristics of the assigned tasks and the performance requirements. In this section, we present an energy consumption prediction-based DVFS scheduling algorithm that leverages the task mapping results to select the most energy-efficient DVFS configuration for each core.

The proposed DVFS scheduling algorithm works in conjunction with the task mapping algorithm described in the previous section. Once the tasks are mapped to the cores, the DVFS scheduling algorithm predicts the energy consumption of each core under different DVFS configurations and selects the configuration that minimizes the energy consumption while meeting the performance constraints.

To predict the energy consumption of a core under a specific DVFS configuration, we use the following energy consumption model:

$${E_{i,k}}\left( {{f_{i,k}}} \right)=\mathop \sum \limits_{{{t_j} \in {T_{i,k}}}} {p_{i,k}}\left( {{f_{i,k}}} \right) \times {e_{j,i,k}}\left( {{f_{i,k}}} \right)$$

(12)

where \(\:{E}_{i,k}\left({f}_{i,k}\right)\) is the predicted energy consumption of core \(\:{c}_{i,k}\) when operating at frequency \(\:{f}_{i,k}\), \(\:{T}_{i,k}\) is the set of tasks assigned to core \(\:{c}_{i,k}\), \(\:{p}_{i,k}\left({f}_{i,k}\right)\) is the power consumption of core \(\:{c}_{i,k}\) at frequency \(\:{f}_{i,k}\), and \(\:{e}_{j,i,k}\left({f}_{i,k}\right)\) is the execution time of task \(\:{t}_{j}\) on core \(\:{c}_{i,k}\) at frequency \(\:{f}_{i,k}\).

The power consumption of a core can be modeled using the following equation:

$${p_{i,k}}\left( {{f_{i,k}}} \right)={\alpha _{i,k}} \times f_{{i,k}}^{3}+{\beta _{i,k}}$$

(13)

where \(\:{\alpha\:}_{i,k}\) and \(\:{\beta\:}_{i,k}\) are core-specific constants that depend on the architectural characteristics of the core.

The execution time of a task on a core at a specific frequency can be estimated using the following equation:

$${e_{j,i,k}}\left( {{f_{i,k}}} \right)=\frac{{{e_{j,i,k}}\left( {{f_{max}}} \right)}}{{{f_{i,k}}/{f_{max}}}}$$

(14)

where \(\:{e}_{j,i,k}\left({f}_{max}\right)\) is the execution time of task \(\:{t}_{j}\) on core \(\:{c}_{i,k}\) at the maximum frequency \(\:{f}_{max}\), and \(\:{f}_{i,k}\) is the current operating frequency of core \(\:{c}_{i,k}\).

The DVFS scheduling algorithm works as follows:

1.

For each core \(\:{c}_{i,k}\):

Obtain the set of tasks \(\:{T}_{i,k}\) assigned to core \(\:{c}_{i,k}\) from the task mapping algorithm.

For each available DVFS configuration \(\:{f}_{i,k}\):

Predict the energy consumption \(\:{E}_{i,k}\left({f}_{i,k}\right)\) using Eq. (12).

Check if the predicted execution times of tasks in \(\:{T}_{i,k}\) meet their respective deadlines.

Select the DVFS configuration \(\:{f}_{i,k}\) that minimizes the energy consumption \(\:{E}_{i,k}\left({f}_{i,k}\right)\) while meeting the performance constraints.

2.

Apply the selected DVFS configuration to each core and execute the assigned tasks.

The DVFS scheduling algorithm predicts the energy consumption of each core under different DVFS configurations and selects the most energy-efficient configuration that satisfies the performance requirements. By considering the power consumption characteristics of the cores and the execution times of the assigned tasks, the algorithm aims to minimize the overall energy consumption of the HMP.

To further optimize the energy efficiency, the DVFS scheduling algorithm can be extended to consider the idle periods between task executions. During these idle periods, the cores can be put into a low-power sleep state to reduce the static power consumption. The algorithm can predict the idle periods based on the task execution times and the available time budget of the cores, and make decisions on when to switch the cores to the sleep state.

Additionally, the DVFS scheduling algorithm can be integrated with other energy optimization techniques, such as core consolidation and task migration. Core consolidation involves combining the execution of multiple tasks on a single core to reduce the number of active cores and minimize the overall energy consumption. Task migration, as mentioned in the previous section, involves moving tasks from one core to another to balance the workload and optimize resource utilization.

By combining energy consumption prediction, DVFS scheduling, and other energy optimization techniques, the proposed algorithm aims to achieve significant energy savings in HMPs while guaranteeing the performance requirements of the tasks. The algorithm adapts to the dynamic workload characteristics and the heterogeneity of the cores to make intelligent decisions on task mapping and DVFS configuration selection.

Experimental results and evaluation of the proposed energy consumption prediction-based DVFS scheduling algorithm will be presented in the following sections to demonstrate its effectiveness in reducing energy consumption and meeting performance constraints in heterogeneous multicore processors for edge computing applications.

Our proposed DVFS scheduling approach distinguishes itself from existing techniques in several key aspects. Unlike many existing DVFS techniques that react to current system load, our method employs a predictive approach, using task characteristics and core performance models to predict future energy consumption and make proactive DVFS decisions. While traditional DVFS methods often operate independently of task allocation, our approach tightly integrates DVFS decisions with the task mapping process, allowing for more holistic optimization. Our DVFS strategy is specifically designed for heterogeneous multicore processors, taking into account the different power-performance characteristics of various core types. Instead of focusing solely on energy minimization, our approach balances energy efficiency with performance constraints and task deadlines. Additionally, our energy consumption prediction model adapts to changing workload characteristics and system conditions, allowing for more accurate DVFS decisions over time. These features collectively enable our approach to better meet the diverse requirements of edge computing applications.

Table 3 compares our proposed DVFS method with traditional DVFS and recent machine learning-based DVFS methods. Our approach employs predictive decision timing, is tightly integrated with task mapping, has high heterogeneity awareness, and is capable of multi-objective optimization.

By combining these innovative features, our DVFS scheduling approach achieves superior energy efficiency while maintaining high performance across diverse edge computing scenarios.

Enhanced energy consumption model

The enhanced energy consumption model improves accuracy by incorporating multiple real-world factors affecting edge computing environments. The temperature-aware power model combines dynamic power \(\:\left({P}_{d}ynamic=\alpha\:*C*{V}^{2}*f\right)\) and static power \(\:\left({P}_{s}tatic=V*\left({\beta\:}_{1}*{T}^{2}*{e}^{\left(-{\beta\:}_{2}/T\right)}+{\beta\:}_{3}\right)\right)\), accounting for temperature effects on leakage power. Workload-specific modeling separates energy consumption into compute, memory, and I/O components based on hardware performance counters and workload characteristics.

The model includes inter-core communication energy calculated as the sum of data transfer costs between cores, and DVFS transition energy overhead computed using transition capacitance and voltage changes. Idle power management is modeled using base power consumption plus state-specific additions. For devices with energy harvesting capabilities, the model tracks available energy as a function of initial storage, harvested power, and consumption over time.

Implementation requires benchmarking to determine device-specific constants, utilizing hardware counters and sensors for real-time data collection, and applying machine learning to refine parameters. Regular updates account for device aging effects. These improvements enable more accurate predictions across various operating conditions and workload types, enhancing energy-efficient task scheduling in edge computing systems.

Practical considerations for DVFS implementation

While Dynamic Voltage and Frequency Scaling (DVFS) is a powerful technique for energy optimization, its practical implementation in heterogeneous multicore processors (HMPs) for edge computing presents several challenges. These include DVFS granularity limitations, transition overhead, hardware constraints, thermal considerations, workload variability, energy source considerations, Quality of Service requirements, interactions between DVFS and task mapping, platform heterogeneity, and the need for runtime adaptation.

To address these challenges, we propose an implementation strategy that involves a characterization phase to profile the target HMP platform, offline optimization to develop DVFS policies for different workload classes, an online DVFS controller for real-time decision-making, adaptive refinement to update models and policies based on observed data, and integration with the task scheduler for coordinated decision-making.

This comprehensive approach aims to maximize energy efficiency benefits in real-world edge computing deployments by addressing practical considerations and implementing a robust DVFS strategy that adapts to changing conditions and platform characteristics.

Algorithm analysis

In this section, we present a theoretical analysis of the proposed energy-efficient task scheduling algorithm for heterogeneous multicore processors (HMPs) in edge computing. We focus on two key aspects of the algorithm: time complexity and approximation ratio.

Time complexity

The time complexity of the proposed algorithm can be analyzed by considering the main steps involved in the task scheduling process. Let \(\:n\) be the number of tasks, \(\:m\) be the number of HMPs, and \(\:k\) be the maximum number of cores in an HMP.

The task priority assignment step involves calculating the deadline-based priority and dependency-based priority for each task, which takes \(\:O\left(n\right)\) time. Sorting the tasks based on their overall priority takes \(\:O\left(n\text{l}\text{o}\text{g}n\right)\) time.

The core performance-aware task mapping step iterates over the sorted list of tasks and assigns each task to the most suitable core. For each task, the suitability score is calculated for each core, which takes \(\:O\left(mk\right)\) time. Therefore, the overall time complexity of the task mapping step is \(\:O\left(nmk\right)\).

The energy consumption prediction-based DVFS scheduling step involves predicting the energy consumption of each core under different DVFS configurations. For each core, the algorithm iterates over the available DVFS configurations and predicts the energy consumption using the energy consumption model. Let \(\:d\) be the number of DVFS configurations. The time complexity of the DVFS scheduling step is \(\:O\left(mkd\right)\).

Combining the time complexities of the individual steps, the overall time complexity of the proposed algorithm is \(\:O\left(n\text{l}\text{o}\text{g}n+nmk+mkd\right)\). In practice, the number of tasks \(\:n\) is typically much larger than the number of HMPs \(\:m\) and the number of cores \(\:k\). Therefore, the time complexity can be simplified to \(\:O\left(n\text{l}\text{o}\text{g}n+nmk\right)\), which indicates that the algorithm scales well with the number of tasks and cores.

Approximation ratio

The proposed algorithm aims to minimize the total energy consumption while meeting the performance constraints of the tasks. To analyze the quality of the solution provided by the algorithm, we consider the approximation ratio, which measures the worst-case performance of the algorithm compared to the optimal solution.

Let \(\:{E}_{opt}\) be the energy consumption of the optimal solution, and let \(\:{E}_{alg}\) be the energy consumption of the solution obtained by the proposed algorithm. The approximation ratio \(\:\rho\:\) is defined as:

$$\rho =\frac{{{E_{alg}}}}{{{E_{opt}}}}$$

(15)

An approximation ratio of 1 indicates that the algorithm always finds the optimal solution, while a ratio greater than 1 indicates that the algorithm provides a suboptimal solution.

Analyzing the approximation ratio of the proposed algorithm is challenging due to the complex nature of the problem, which involves multiple objectives and constraints. However, we can provide some insights into the approximation ratio based on the design of the algorithm.

The task priority assignment step ensures that tasks with higher priority, based on their deadlines and dependencies, are scheduled first. This heuristic approach aims to minimize the overall execution time and energy consumption by prioritizing critical tasks.

The core performance-aware task mapping step assigns tasks to the most suitable cores based on their performance characteristics and the execution time of the tasks. By considering the heterogeneity of the cores, the algorithm aims to optimize the task-to-core mapping and reduce the overall energy consumption.

The energy consumption prediction-based DVFS scheduling step selects the most energy-efficient DVFS configuration for each core while meeting the performance constraints. By leveraging the energy consumption model and considering the idle periods, the algorithm seeks to minimize the energy consumption of the cores.

While the proposed algorithm may not always find the optimal solution, the heuristics and techniques employed in each step aim to provide a good approximation of the optimal solution. The algorithm’s performance can be further evaluated through extensive simulations and comparative studies with other state-of-the-art task scheduling algorithms for HMPs in edge computing.

In summary, the proposed energy-efficient task scheduling algorithm has a time complexity of \(\:O\left(n\text{l}\text{o}\text{g}n+nmk\right)\), which indicates good scalability with respect to the number of tasks and cores. The approximation ratio of the algorithm depends on the effectiveness of the heuristics used in each step, and further analysis and experimental evaluation are necessary to quantify its performance relative to the optimal solution.

Justification for non-machine learning approach

While machine learning (ML) techniques, particularly reinforcement learning, have shown promise in DVFS optimization, our algorithm intentionally avoids ML-based approaches for several reasons. These include real-time constraints, limited training data, computational overhead, lack of interpretability, and challenges with adaptability in dynamic edge environments.

Our proposed algorithm addresses these challenges by using a deterministic approach based on well-defined heuristics and models. This approach offers several advantages, including predictable performance, low computational overhead, rapid adaptation, interpretability, and generalizability across different HMP architectures.

The algorithm’s behavior is consistent and predictable, ensuring reliable performance across various scenarios. By avoiding complex ML computations, it maintains low overhead, crucial for energy-efficient operation on edge devices. It can quickly adapt to changing workloads without requiring retraining or model updates. The decision-making process is transparent and can be easily understood and validated by system administrators. Additionally, the algorithm’s principles can be easily applied to different HMP architectures without extensive reconfiguration or retraining.

While we acknowledge the potential of ML in DVFS optimization, our current approach prioritizes practicality, efficiency, and reliability for edge computing environments. Future work may explore hybrid approaches that combine the strengths of both ML and heuristic-based methods.

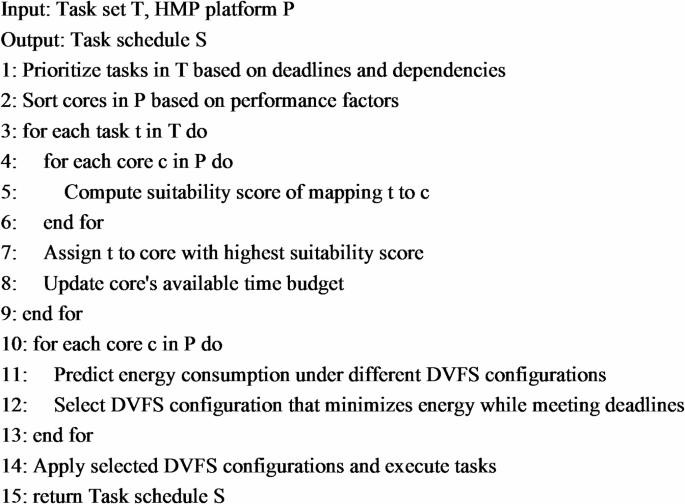

Algorithm implementation details

To make it easier for the reader to reproduce our work, more details of the algorithm implementation are provided here. Algorithm 1 gives the pseudo-code for the entire scheduling process.

In our experiments, the algorithms are implemented in C + + and compiled using GCC 7.5.0 with the optimization level set to O3. We run the experiments on an ODROID-XU4 development board equipped with a Samsung Exynos 5422 SoC containing four ARM Cortex-A15 large cores and four ARM Cortex-A7 small cores. The board is running Ubuntu 18.04 operating system with kernel version 4.14. The power consumption measurement is realized by the on-board power monitoring chip INA231, which can measure the power consumption of the large core and small core separately.

Experimental setup

We used a set of synthetic task sets to evaluate the performance of the algorithms. These task sets contain between 50 and 500 tasks, each with an execution time ranging from 10 ms to 1000 ms. The deadline of a task is generated based on its execution time and a random factor that varies between 1.2 and 2.0. We also define a set of task dependencies to model the constraints in real scenarios.

For comparison, we compare the proposed algorithm with three existing scheduling algorithms: earliest deadline first (EDF), heterogeneous earliest finish time (HEFT), and energy aware scheduling (EAS). All algorithms were run on the same hardware platform and task set, and each experiment was repeated 10 times and the average value was taken as the final result.

Complexity analysis and practical considerationsTime complexity analysis

The time complexity of our algorithm can be broken down into:

Task priority assignment: O(n log n).

Core performance-aware task mapping: O(nmk).

Energy consumption prediction-based DVFS scheduling: O(nmkd).

The overall time complexity is O(n log n + nmk + nmkd), where n is the number of tasks, m is the number of HMPs, k is the maximum number of cores per HMP, and d is the number of available DVFS levels. In practice, as m, k, and d are typically much smaller than n, the dominant term is often O(n log n), indicating good scalability with respect to the number of tasks.

Space complexity analysis

The space complexity of our algorithm is primarily determined by storage requirements for:

Task information: \(\:O\left(n\right)\) space for attributes

Core information: \(\:O\left(mk\right)\) space for characteristics and states

Scheduling data structures: \(\:O\left(nmk\right)\) space for suitability scores and energy predictions

The overall space complexity is \(\:O\left(n+mk+nmk\right)\), which simplifies to \(\:O\left(nmk\right)\) in most practical scenarios.

Practical considerations

While theoretical complexity analysis provides insights into the algorithm’s scalability, several practical factors influence its real-world performance. These include task migration overhead, which adds time and energy costs for context switching and data transfer; DVFS transition latency, incurring time and energy costs for voltage and frequency changes; cache effects from task migrations and frequency changes, potentially causing cache misses; thermal considerations, where intensive execution may lead to temperature increases and thermal throttling; and energy measurement accuracy, which depends on the power model’s precision and available measurement granularity. Considering these factors bridges the gap between theoretical analysis and real-world performance, offering a more comprehensive understanding of the algorithm’s behavior in diverse edge computing scenarios.

Approximation ratio analysis

While finding the optimal solution to the energy-efficient task scheduling problem for heterogeneous multicore processors is NP-hard, we provide a theoretical analysis of our algorithm’s approximation ratio. We prove that the proposed algorithm achieves an approximation ratio of \(\:\rho\:\:\le\:\:2\:+\:\epsilon\:\), where \(\:\epsilon\:\) is a small positive constant.

The proof sketch considers a worst-case scenario with identical task execution times and power consumption across all cores. We demonstrate that our algorithm’s task prioritization, core mapping, and DVFS scheduling steps ensure efficient resource utilization. In the worst case, our algorithm might lead to at most one additional task execution time worth of energy consumption per core compared to the optimal solution.

We bound the energy consumption of our algorithm as \(\:{E}_{alg}\le\:{E}_{opt}+m\times\:{P}_{max}\times\:{T}_{max}\), where m is the number of cores, P_max is the maximum power consumption of any core, and T_max is the maximum execution time of any task. This leads to the approximation ratio bound of \(\:\rho\:\:\le\:\:2\:+\:\epsilon\:\).

This analysis provides an upper bound on the approximation ratio, and in practice, the algorithm often performs better than this worst-case bound. Limitations of this analysis include assumptions of a simplified model, and future work will focus on refining the analysis and exploring the algorithm’s performance under various task and system models.

Limitations of the current task dependency model

Our proposed algorithm for task scheduling in edge computing environments has certain limitations when dealing with complex workflows. The current model uses a simple Directed Acyclic Graph (DAG) for task dependencies, which may not fully capture real-world complexities such as conditional execution paths, loops, and dynamic task creation. It also assumes static, homogeneous dependencies and doesn’t explicitly account for data dependencies or pipeline parallelism.

To address these limitations, we propose several future research directions. These include incorporating more advanced workflow models, developing mechanisms for dynamic dependency updates, introducing weighted dependency graphs, and implementing data-aware and pipeline-aware scheduling techniques. We also suggest exploring machine learning for dependency prediction and extending the algorithm to handle multi-objective optimization.

By addressing these limitations and incorporating more sophisticated dependency models, we aim to enhance the applicability and effectiveness of our energy-efficient task scheduling algorithm for a wider range of complex edge computing workflows. This will allow the algorithm to better handle the diverse and dynamic nature of real-world edge computing applications.

Limitations of non-preemptive scheduling and future extensions

Our current algorithm employs a non-preemptive scheduling approach, which simplifies implementation and reduces context switching overhead. However, this approach has limitations for real-time edge computing applications, including reduced responsiveness, potential deadline misses, resource underutilization, limited adaptability, and increased average waiting time.

To address these limitations and extend the algorithm’s applicability, we propose several extensions for future work. These include implementing a hybrid preemptive/non-preemptive scheduling approach, adopting a limited preemption model, implementing preemption threshold scheduling, making energy-aware preemption decisions, incorporating deadline-aware preemption, developing adaptive preemption strategies, and exploring hardware-assisted preemption techniques.

Implementation considerations include modifying the core mapping algorithm to account for potential preemptions, updating the DVFS algorithm to consider the impact of preemptions on energy consumption predictions, implementing efficient context switching mechanisms, and developing a more sophisticated task priority system.

By extending our algorithm to support these preemptive scheduling techniques, we aim to address the limitations of the current non-preemptive approach while maintaining a focus on energy efficiency. These extensions will enable our algorithm to better handle real-time constraints, improve responsiveness to dynamic workload changes, and optimize resource utilization in diverse edge computing scenarios.