A Carbondale woman was recently charged with possession of child sexual abuse material after police said they found images of young children engaged in sex acts on her cellphone.

And, although the three third-degree felony charges are very real, the images weren’t.

Instead police say they were generated by artificial intelligence, which uses a compilation of existing images and inputs involving detailed descriptions to come up with a new digital one. Many such images seem very real.

The case is the first of its kind to be brought in Lackawanna County, facilitated by a law passed several months ago.

In October, Gov. Josh Shapiro signed a bill into law, after it passed the state House and Senate unanimously, expanding the definition of child sex abuse materials to include AI-generated images of children, identifiable or not.

Act 125 of 2024 also makes changes to the language of the law, replacing the term “child pornography” with “child sexual abuse material,” more accurately describing chargeable offenses, lawmakers argued.

The recent case reflects law enforcement’s response to ever-changing technology — and a growing problem.

In 2024, the National Center for Missing & Exploited Children’s CyberTipline saw a 1,325% increase in reports involving generative AI, jumping from 4,700 in 2023 to 67,000 reports last year.

The use of artificial technology to create illegal images has frequently been in the news.

For example, psychiatrist David Tatum was found guilty of possession of child sexual materials in May 2023 in the Western District of North Carolina.

He used AI to alter innocent images of children into pornographic images, using photos of classmates taken decades before.

In court some of those classmates offered victim impact statements, saying treasured memories caught in photos were turned into images that elicit fear and distrust. Some spoke of photos taken on the first day of school or at sporting events, saying the memories were forever tarnished.

Tatum was sentenced to 40 years behind bars.

Investigators were able to bring the case because they could identify people depicted in the generated photos. They made phone calls. Talked to victims. Heard stories. And, made their case.

But law enforcement officers had a problem. What if the people in the images couldn’t be identified? What if they were an unidentifiable compilation?

Law enforcement officers would often hit an impasse, knowing it was a predator’s intent to possess the illegal image, but not being able to trace it to a person or call it illegal.

Over the course of the last several months, however, both the state and federal governments have answered that concern, making it illegal to possess computer-generated child sexual abuse images, even if the image could not be traced back to a specific person.

![]() Lackawanna County District Attorney Brian Gallagher

Lackawanna County District Attorney Brian Gallagher

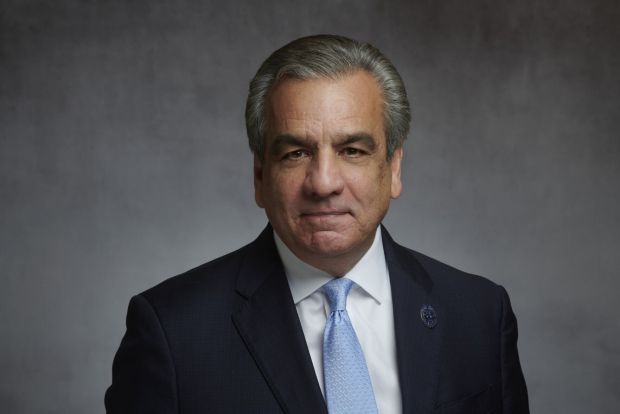

Wyoming County District Attorney Joe Peters

Wyoming County District Attorney Joe Peters

Ismail Onat, Associate Professor University of Scranton (SUBMITTED)

Ismail Onat, Associate Professor University of Scranton (SUBMITTED)

Lackawanna County District Attorney Brian Gallagher anticipates more cases being brought in Lackawanna County centered on artificial intelligence.

“This is the first AI-generated child pornography case that we’ve prosecuted in Lackawanna County, but unfortunately, it will not be the last,” he said. “Lackawanna County law enforcement will continue to be proactive and aggressively investigate, arrest and prosecute child predators and those who manufacture child sexual abuse material.”

Sandra Rogers, 35, the defendant in the Carbondale case, was charged on May 12 and waived her preliminary hearing on May 27, transferring the case to county court. Each third-degree felony charge carries with it a maximum of a seven-year prison sentence and $15,000 fine, should she be found guilty.

A new tool

Wyoming County District Attorney Joe Peters said the new law provides an additional tool for law enforcement to identify and prosecute predators.

“You can now bring charges, even when there was never a real person involved,” he said. “Before the changes in the law there would have been challenges to that sort of child pornography. In fact that term has been redone to more accurately reflect what’s going on. Because, in some cases there is no child and the pornography didn’t actually happen — it’s AI generated, we can still charge it.”

Peters said the law also extends the reach of law enforcement in response to technology that is weaponized against innocent, nonconsenting people in an effort to extort money, to humiliate them, to make a profit or for sexual pleasure.

It covers instances where criminals use technology to superimpose an innocent person’s face onto someone else’s body.

Human traffickers often use such images to extort young women, first grooming them with flirtatious conversation and then asking for a photo.

“Once you send that photo, they own you,” he said.

State Sen. Tracy Pennycuick, a Berks and Montgomery counties Republican, one of the sponsors of the state legislation, said the advent of the use of artificial intelligence technology to produce child sexual abuse images “shocks the conscience.”

“We need to do everything we can to prevent individuals from using AI for these insidious purposes,” said Pennycuick, who chairs the Senate Communications and Technology Committee. “By working in a bipartisan manner, the General Assembly is making it clear that Pennsylvania is not the place for this depraved activity.”

‘Take it Down’ includes AI-generated images

Ismail Onat, associate professor of criminal justice, cybersecurity and sociology at the University of Scranton, said that just as state law is adapting to emerging technology, so is federal law.

The Take it Down Act, signed into law by President Donald Trump on May 19, makes it a federal crime to post not only sexually explicit images online without consent, but also images generated by artificial intelligence.

First lady Melania Trump, who helped usher the legislation through Congress, called it a “national victory” helping to protect children from online exploitation and deterring the use of artificial intelligence to make fake images.

“AI and social media are the digital candy for the next generation, sweet, addictive and engineered to have an impact on the cognitive development of our children,” she said. “But unlike sugar, these new technologies can be weaponized, shape beliefs and, sadly, affect emotions and even be deadly.”

Onat said in a world where artificial intelligence can generate realistic images of nonexistent children in sexual scenarios, also known as “deep fakes,” the legislation is definitely a step in the right directions.

Onat, who also leads the university’s Center for the Prevention & Analysis of Crime, said law enforcement has long been tasked with adapting to new inventions and new technology as the speed of those innovations quickens.

When cars were invented, for example, they provided the opportunity for people to easily get from one place to another quicker. But they also opened up the opportunity for numerous infractions, ranging from driving too fast to operating unsafe vehicles.

Onat points out that first law enforcement had to assess the nature of the new invention, before it implemented laws governing it.

The same goes for technology, he said. As artificial intelligence evolves and becomes more and more refined, government and law enforcement agencies must react quickly.

Because law enforcement didn’t create the new technology, it takes some time for them to understand it, he said.

Onat credits state and federal law enforcement agencies with consistency in identifying and addressing new technologies as they unfold.

The FBI, for example, investigates and prosecutes cases relating to artificial intelligence, particularly when it falls under the category of child sexual abuse material.

Higher education is also evolving in response to the need for oversight of new technology, he said.

The university offers a cybercrime and homeland security major, designed to address the need to investigate and protect information in cyberspace.

“The goal of the program is to form the cybercrime investigators, digital forensic examiners, information security analysts, and national security analysts of tomorrow,” as described on the university’s website.

The program now includes an internship that allows students to hone their cybersecurity skills at the Lackawanna County district attorney’s office, specifically aimed at extracting data from cellphones, analyzing the data, evaluating that data with detectives and presenting it to the prosecution team before trial.

The program equips students to assist law enforcement in keeping up with ever-evolving technology — helpful to most, but, Onat said, also often misused by criminals.

Originally Published: June 8, 2025 at 12:00 AM EDT