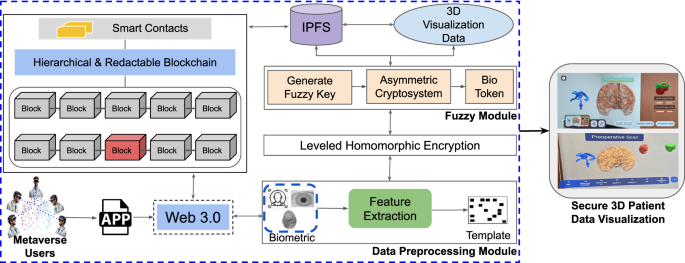

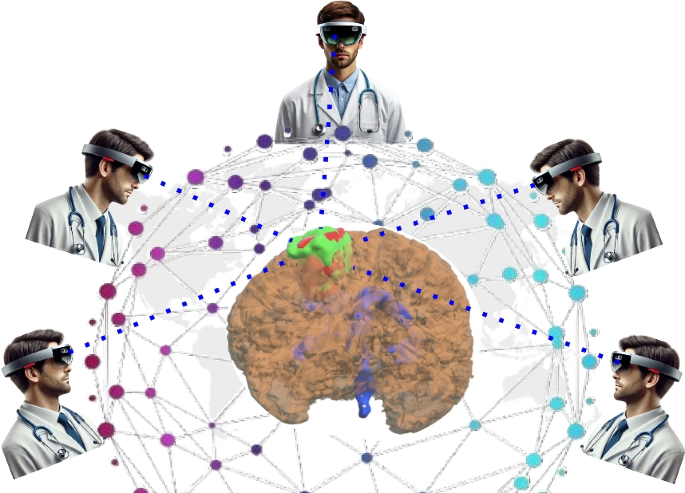

The architecture of the proposed framework is illustrated in Fig. 3. The process begins with a designated set of Metaverse users (medical experts) collaboratively accessing the system through a Web 3.0-enabled application. This interface serves as a gateway to access the decentralized visualization platform, which then employs biometric authentication for user authentication. The user biometric input is processed through a data Preprocessing module, where key features are extracted and transformed into a unique biometric template. This template is then passed into a fuzzy module, which generates a fuzzy key used for encryption and identification. It is important to note here that the framework grants access to the visualization if and only if all the designated experts authenticate and authorize simultaneously, as shown in Fig. 3.

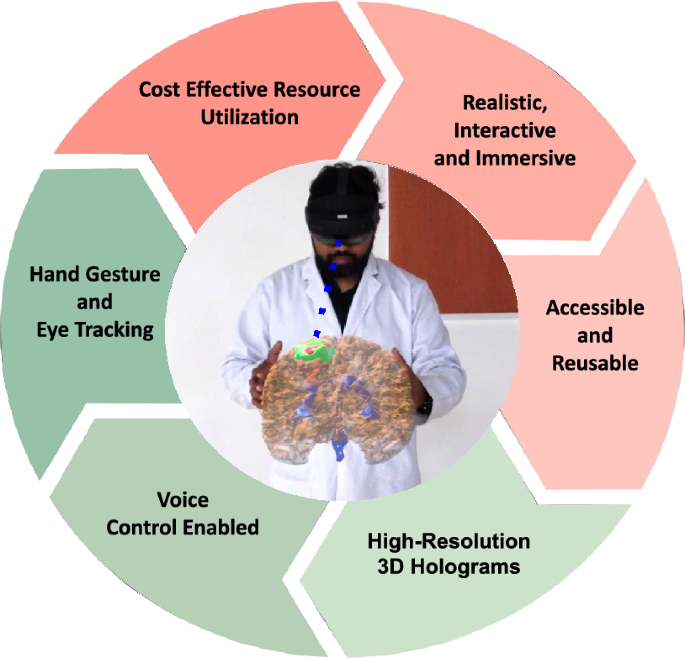

Proposed framework for Biometric-Based Secure 3D Patient Data Visualization, which integrates advanced biometric authentication with a hierarchical redactable blockchain and a hybrid encryption approach by combining leveled HE with a modified FV scheme to enable secure, efficient, and immersive XR-based visualization.

Next, the fuzzy module produces a secure bio token using an asymmetric cryptosystem, which ensures that each session and user is uniquely verified. The generated bio token is then used within the leveled HE module, which performs secure computations on encrypted patient data without the need for decryption. This approach preserves data privacy while allowing computations and visualization to occur securely. The encrypted data is then logged on a hierarchical and redactable blockchain that supports smart contracts, as shown in Fig. 3. This blockchain infrastructure allows secure, tamper-resistant storage with the added capability of redaction under authorized conditions while simultaneously enabling collaborative investigation and planning of surgeries remotely. The patient data is ultimately stored in a decentralized manner using IPFS, ensuring that the data is distributed, immutable, and available across nodes, supporting the integrity and scalability of the system. Upon successful authentication and decryption, the relevant 3D patient data is retrieved and rendered within the XR environment. This immersive visualization allows medical practitioners to interact with secure, immersive, high-fidelity medical data models in an XR setting, enhancing both understanding and collaboration. The proposed architecture is implemented in multiple phases, as described below.

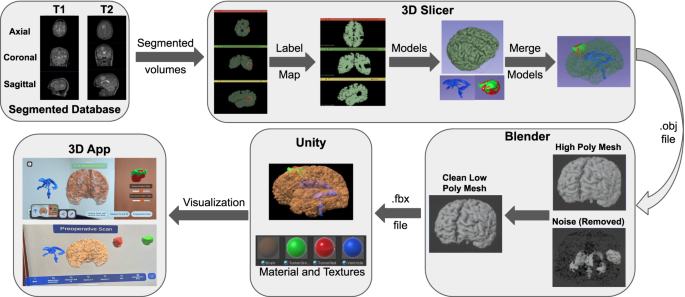

The process of XR-based 3D visualization: segmented MRI volumes from T1 and T2 scans are converted to label maps and 3D models using 3D Slicer. These models are merged, cleaned, and optimized in Blender to reduce polygon complexity. Final models are imported into Unity with textures for real-time visualization. The resulting application enables detailed AR/MR-based interactive exploration of brain anatomy and tumor data.

Phase 1: XR-based 3D visualization system

This paper presents a novel framework for 3D visualization of patient data through XR-based interactive technology for brain MRI, as depicted in Fig. 4. This requires a high-resolution brain MRI scan from the patient. Then, the segmented structures and regions of interest are to be rendered in 3D, by incorporating advanced visualization techniques such as volume rendering and surface rendering. Subsequently, an XR application for 3D visualizations is developed such that the 3D renderings could be superimposed on a real-world environment by marker-less tracking methods for appropriate registration and alignment. Interactivity features including rotation, zooming, and measurement are implemented in the XR environment by an intuitive user interface with ‘pinch and stretch’ gesture. A view collaboration feature is also provided to increase the clinical utility of the system.

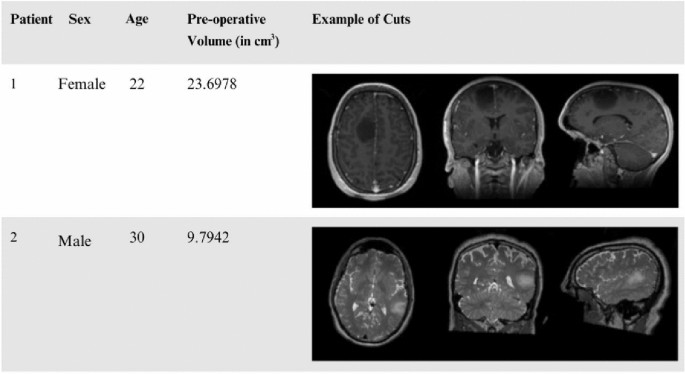

Sample demographic and imaging data from the ReMIND brain tumor MRI dataset20 used in the XR application.

Database description

All brain tumors require resection with maximal safe surgical resection. Neuronavigation augments the ability of the surgeon to maximize this, however, surgery is an evolving process, and the information progresses into the past with the development of brain shift. More importantly, the high-grade gliomas are usually indistinguishable from the surrounding healthy brain tissue. Here we used the largest publicly available database20, ReMIND database. It consists of 123 consecutive patient datasets treated surgically with image-guided tumor resection in the AMIGO Suite at Brigham and Women’s Hospital (Boston, USA) between November 2018 and August 2022, with both iUS and iMRI. 9 of these cases were excluded because of corrupted or poor-quality data, leading to 114 cases. The exclusion criteria ranged from large iMRI artifacts (N = 1) to 3D iMRI corruption (N = 1) and poor quality of the intra-operative iUS before the opening of the dura (N = 7). The database consists of preoperative MRIs, for 114 consecutive patients who were surgically treated for brain tumors: 92 patients with gliomas, 11 with metastases, and 11 with other brain tumors. This increases the potential for research in brain shift and, in particular, image analysis, as well as neurosurgical training in the interpretation of iMRI. A sample of the data demographics is reported in Fig. 5 which includes patient id, gender, age, preoperative tumor volume and sample 2D DICOM slices.

Development workflow

The complete development workflow is presented in Fig. 4, including all the steps described below to obtain the required system.

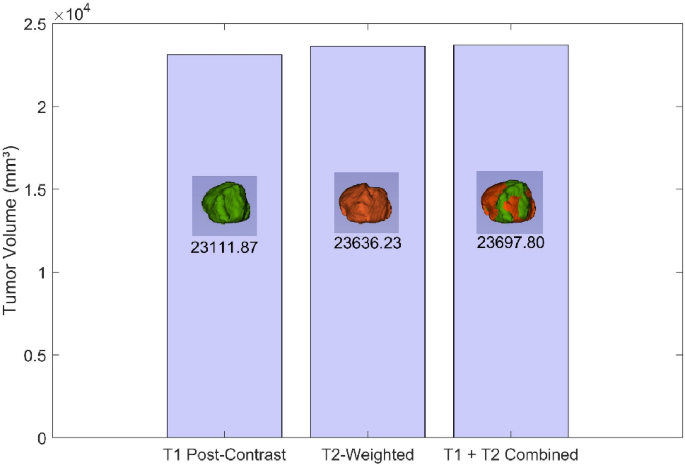

(a) Data preparation: The initial step involved acquiring segmented files in Nearly Raw Raster Data (NRRD) format. These files were obtained from the ReMind database and included information of brain structures like the cerebrum, the ventricles and the tumor. Each patient had two variations of scans: pre-operation and intra-operation. The pre-operation scans included two methods: T1-weighted post-contrast imaging and T2-weighted imaging, as shown in Figs. 4 and 6 . T1 post-contrast imaging enhances the visibility of the tumor by using a contrast agent, making it easier to delineate the tumor boundaries. In contrast, T2-weighted imaging is more sensitive to fluid content and generally shows a larger tumor volume due to its high sensitivity to the edema surrounding the tumor.

(b) 3D visualization: The segmented files were then imported into the 3D Slicer software21, a comprehensive platform for medical image informatics. In 3D Slicer, the segmented files were loaded as volumetric data. A volume in this context refers to a 3D dataset where each voxel (3D pixel) holds intensity information, representing the scanned brain and its components. For each patient, this process was done separately for the pre-operation scans (both T1 post-contrast and T2-weighted) and the intra-operation scans showing the residual tumor.

Volume to label map conversion: Within 3D Slicer, the volumetric data was converted into label maps, which is a type of image where each voxel is assigned a specific integer label representing different anatomical structures, such as the cerebrum, ventricles, and tumor. This conversion is crucial as it simplifies the delineation of different regions of interest for subsequent modelling.

3D model creation: Every label map was processed to produce matching 3D surface models using the 3D Slicer. In this step, voxel-based label mappings were translated into polygonal meshes that faithfully capture the 3D structure of each anatomical component. The brain, ventricles, and cancer were all depicted in detail in the final models, as shown in Fig. 4. Three distinct 3D models were made for each patient: the T2-weighted pre-operation scan, the T1 post-contrast pre-operation scan, and the intra-operation scan that revealed the remaining tumors, as illustrated in Figs. 4 and 6 .

(c) Model integration: The next stage was to combine the separate 3D models to create a complete brain model that featured the ventricles and tumor. Since this integration was carried out inside of 3D Slicer, it was guaranteed that the spatial relationships between the different structures would be precisely recorded and preserved. To enable in-depth comparison and analysis, distinct models were kept for the various scan variations (T1 and T2 pre-operation, and intra-operation).

(d) Model optimization: This stage focuses on transforming the high-fidelity segmented anatomical models into lightweight, real-time compatible assets suitable for interactive XR rendering, while preserving anatomical accuracy and visual quality.

Polygon reduction: The combined 3D model was saved in an OBJ file format by 3D Slicer. The OBJ file was then processed in Instant Meshes, a software tool designed for mesh simplification, in order to make it efficient for real-time use. In fact, one of the changes included lowering the polygon count both in terms of size and complexity, which is essential in optimizing the model for use in the field of AR.

Mesh refinement: The low-poly model was then saved and processed in Blender22, a 3D modelling software available open-source. The final version of the model was refined in the Blender to keep only meshes necessary for the 3D object representation but ensure it was accurate anatomically and aesthetically. The model was then processed to an FBX file format, which is convenient while working with a graphics engine.

In the final rendering stage, the FBX models are imported into Unity, where physically-based materials, real-time shaders, and texture mapping are applied to achieve photorealistic visualization of brain structures, enhancing spatial perception and clinical interpretation in AR/MR environments.

Phase 2: Redactable blockchain for data management

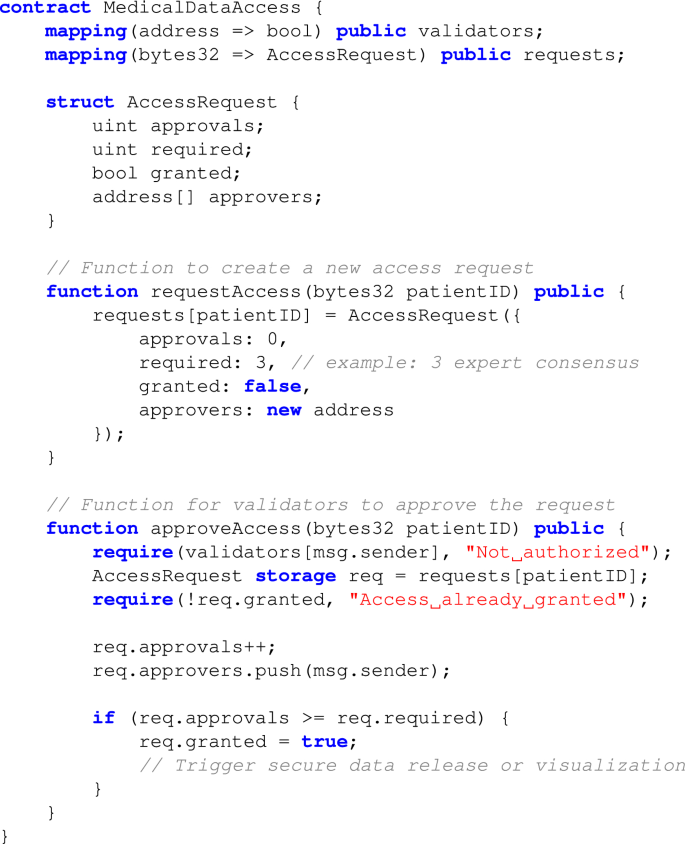

The proposed system utilizes a redactable blockchain architecture to meet the dual requirements of data integrity and compliance with data privacy regulations such as GDPR. Unlike traditional immutable blockchains, this system employs a stateful Chameleon Hash with Revocable Subkey (sCHRS)23 mechanism, enabling controlled modifications or redaction of data blocks. However, this feature is enabled via a consensus-based collaborative access through smart contracts, i.e., modifications or redaction is enabled if and only if all the designated experts approve simultaneously. This allows selective data updates while preserving the blockchain’s cryptographic properties and overall structural integrity. The statefulness of sCHRS ensures that each modification remains authorized and traceable, preventing malicious activities and supporting regulatory compliance23. The hierarchical blockchain consists of a parent layer for user identity and a child layer for medical data access. Redactions are enabled only when all designated experts provide authorization, enforced through smart contracts and validated using Chameleon Hash functions.

Redaction Mechanism: Redactions are initiated by authorized users possessing revocable subkeys after receiving authorization by all the designated experts. The Chameleon Hash function verifies the legitimacy of these modification requests and cryptographically replaces the targeted data block while maintaining the original blockchain’s hash properties. This ensures that the blockchain remains consistent and tamper-proof, even after modifications.

Audit and Compliance: Each redaction operation is logged as a transaction that records the authorized entity, type of change, and cryptographic proof. This mechanism maintains transparency and trust in the system, allowing independent nodes to verify modifications while supporting regulatory requirements such as GDPR’s “right to be forgotten.”

Permission Management: Smart contracts automate access control by embedding permission rules that check user credentials and validate biometric authentication before allowing data modifications. This reduces the risk of unauthorized access and strengthens compliance with privacy laws.

Smart Contract Pseudocode for Access Control

To illustrate the collaborative authorization process in our redactable blockchain, the following pseudocode outlines the structure and logic of the smart contract used for validator-based access control.

Redaction Transparency and Monitoring: Every redaction is logged on the blockchain, providing an immutable audit trail. Smart contracts ensure that all authorized changes include cryptographic proofs, which can be reviewed by stakeholders to maintain transparency and traceability.

Phase 3: HBC based authentication for XR

The proposed XR system incorporates a hybrid multimodal biometric authentication framework to ensure secure and reliable user identification while optimizing for real-time efficiency and simplicity. This phase combines leveled HE for critical operations and lightweight FV mechanisms for storage, achieving robust privacy, security, and computational feasibility. Blockchain technology is used for decentralized storage, ensuring irreversibility, unlinkability, and regulatory compliance. The multimodal biometrics are utilized in HBC for XR authentication to overcome the issues in single modality, i.e., enable robustness and security against malicious attacks. The HBC framework generates encrypted biometric tokens that are stored in IPFS, with their locations committed to the blockchain. During authentication, smart contracts verify user permissions and retrieve vaults securely, linking biometric verification with decentralized access control. Furthermore, the multimodal biometrics used for AR and MR are specifically chosen for the unique requirements of their immersive environment while ensuring user convenience and security. These modality choices were guided by clinical practicality: mobile-supported face and fingerprint recognition are suitable for AR settings, while gaze and PIN provide contactless, hygienic, and hands-free interaction required in sterile MR environments. Further details are explained in the following sections.

Authentication for AR

An Android application is developed to facilitate seamless interaction with the AR visualization system, as shown in Fig. 4. For secure access to the Android application for AR, face and fingerprint biometrics are selected as the multimodal biometrics to achieve efficient and user-friendly authentication process. Precisely, face recognition is chosen for its non-intrusive nature and ease of use in clinical and mobile settings, whereas fingerprint recognition is integrated as a supplementary biometric for its reliability and compatibility with existing mobile hardware. Building on prior work using these modalities16, the hybrid system proposed here integrates FV mechanisms and leveled HE for secure multimodal biometric authentication. To present hybrid the system for AR in detail, the following notations are introduced:

Notations: Let \(B_1\) (Biometric 1) and \(B_2\) (Biometric 2) denote the dorsal hand and facial image biometrics, respectively. The following functions are used in the methodology: \(n\_ random(p,q)\) generates n random integers in the range [p, q]; \(f_{DL}(B)\) extracts features from B using a Deep Learning (DL) model; \(f_{GLCM}^{MRG}(B)\) extracts features from B using Gray Level Co-occurrence Matrix (GLCM) and Minutiae Ridge Gabor (MRG) methods; \(n\_ encode(E)\) encodes the encrypted weights \(E_1\) to RS codes; grid(p, q) generates a \(p \times q\) grid; \(encrypt_{LHE}(X, K)\): leveled HE of sensitive data X using key K; \(decrypt_{LHE}(X, K)\): Homomorphic decryption of sensitive data X using key K; \(generate\_ key(C_1,C_2)\) generates a fuzzy key \(F_k\) using RS codes \(C_1\) and \(C_2\); and \(encrypt_{FV}(G_r, R, F_k)\): FV encryption of grid \(G_r\), random numbers R, and fuzzy key \(F_k\). Let \(r_i\) and \(c_i\) denote the \(i^{th}\) row and column of \(G_r\), respectively. FV and \(\varnothing\) represent the FV and null vector, respectively. dist(a, b) denotes the Euclidean distance between a and b and \(s_0>0\) is a certain threshold.

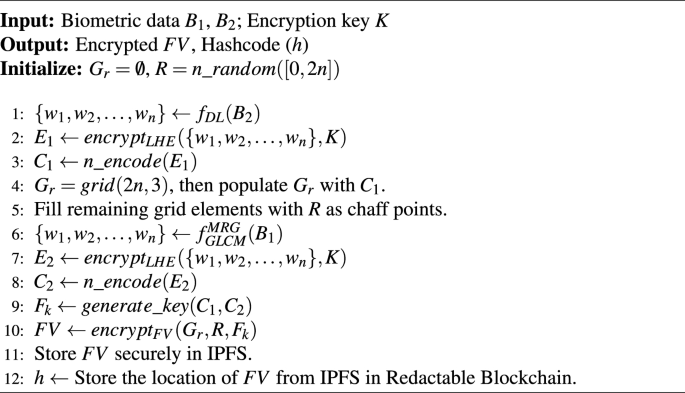

Proposed algorithm 1: user registration for AR

This algorithm presents an integration of leveled HE and the FV mechanism, where Leveled HE is used for feature encryption, ensuring privacy during biometric authentication, while the FV provides robust storage and retrieval of biometric data. First, a set of n integers, \(R = n\_random([0, 2n])\), is randomly selected within the range [0, 2n]. DL techniques, such as CNNs, are employed for feature extraction from the facial image \(B_2\), defined as \(f_{DL}(B_2)\), resulting in feature weights \(\{w_1, w_2, \ldots , w_n\}\). These extracted features are then encrypted via leveled HE using encryption key K, generating \(E_1 = encrypt_{LHE}(\{w_1, w_2, \ldots , w_n\}, K)\). The encrypted weights are further encoded into RS codes to obtain \(C_1 = n\_encode(E_1)\). Next, a \(2n \times 3\) grid, \(G_r = grid(2n, 3)\), is generated and populated with \(C_1\), with remaining elements filled using randomly generated chaff rows for additional security. Similarly, minutiae-based feature extraction algorithms such as GLCM and MRG are applied to fingerprint data \(B_1\), represented as \(f_{GLCM}^{MRG}(B_1)\), to derive another set of feature weights \(\{w_1, w_2, \ldots , w_n\}\). These are encrypted via leveled HE using the same encryption key K, producing \(E_2 = encrypt_{LHE}(\{w_1, w_2, \ldots , w_n\}, K)\). The encrypted weights are subsequently encoded into RS codes to obtain \(C_2 = n\_encode(E_2)\). Using \(C_1\) and \(C_2\), a fuzzy key \(F_k = generate\_key(C_1, C_2)\) is generated. The FV is then constructed by encrypting the grid \(G_r\) with the generated key and the randomly selected integers, yielding \(FV = encrypt_{FV}(G_r, R, F_k)\). This FV is then stored into IPFS to ensure distributed and secure storage. Furthermore, the address of this storage in IPFS is stored in the readctable blockchain network to enable security via decentralized architecture.

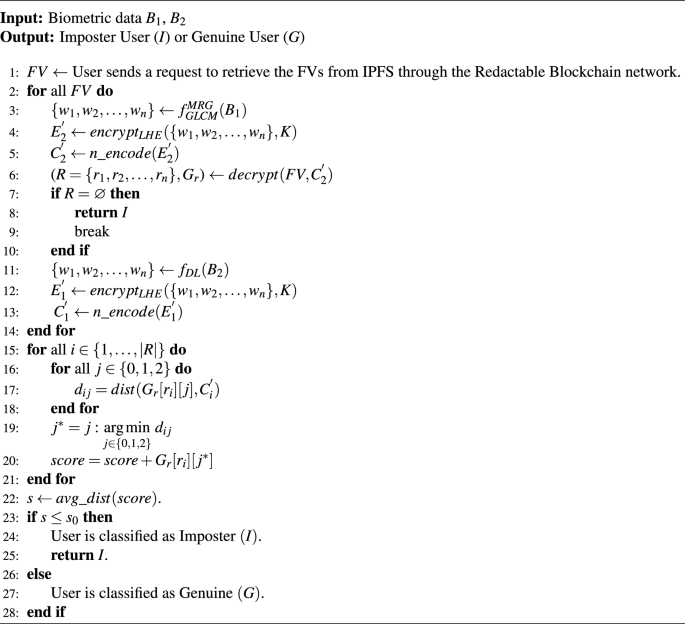

Proposed algorithm 2: user recognition for AR

The user recognition framework for accessing the AR application is presented in Algorithm 2. The system first retrieves the stored FVs from IPFS through a redactable blockchain network. For each retrieved FV, the input biometric \(B_1\) is processed using the function \(f^{MRG}_{GLCM}\) to generate a weights \(\{w_1, w_2, \dots , w_n\}\). These weights are encrypted into \(E_2^{‘} \leftarrow encrypt_{LHE}(\{w_1, w_2, \ldots , w_n\}, K)\) via leveled HE using Encryption key K. Subsequently, \(E_2^{‘}\) is encoded to obtain the RS codes (\(C_2^{‘}\)), which are then used to decrypt the FV to produce R. If R is null after decryption, the user is immediately classified as an Imposter (I), and the algorithm terminates. Otherwise, the process continues to the second biometric \(B_2\). \(B_2\) undergoes feature extraction through function \(f_{D_L}\) to produce another feature vector \(\{w_1, w_2, \dots , w_n\}\). These weights are then encrypted into \(E_1^{‘} = encrypt_{LHE}(\{w_1, w_2, \ldots , w_n\}, K)\) via leveled HE using K. \(E_1^{‘}\) is subsequently encoded into RS codes (\(C_1^{‘}\)). In the comparison phase, the algorithm iterates over each row \(r_i\) in \(G_r\) and computes the distances (\(d_{ij}\)) between the reconstructed grid entries \(G_r[r_i]\) and \(C_1^{‘}\), for \(j \in \{0, 1, 2\}\). The minimum distance \(j^*\) for each feature \(r_i\) is determined, and the corresponding grid scores \(G_r[r_i][j^*]\) are aggregated to compute the total matching score. Once all features are processed, the algorithm evaluates whether the aggregated score exceeds a predefined threshold \(s_0\). If the score is below the threshold, the user is classified as an Imposter (I). Otherwise, the user is authenticated and classified as Genuine (G).

Authentication for MR

The proposed HBC based MR authentication utilizes a combination of gaze-based behavioral biometrics and PIN authentication as the two biometric multimodalities. Gaze-based biometrics provide a contactless and hygienic authentication solution ideally suited for sterile medical environments, such as operating rooms. By eliminating the need for physical contact, it aligns naturally with clinical workflows and reduces the risk of contamination. Additionally, the integration of gaze biometrics within the proposed MR-based framework leverages the built-in eye-tracking capabilities of the HoloLens 2, eliminating the need for additional hardware and simplifying the deployment.

In particular, the gaze-based behavioral biometric leverages eye-tracking data such as fixation duration, saccades, and gaze trajectory, while PIN ensures an additional layer of security. In combination, these biometric modalities enable a non-intrusive, secure, and user-friendly authentication process. To present these authentication algorithms for MR in detail, we introduce the following notations in addition to those in the Sect. “Notations”:

Notations: Let \(I_g\) denote the gaze biometric captured from the eye-tracking system of HoloLens 2, and P denote the PIN input from the user. The function \(f_{hash}(P)\) yields a 128 bit output using SHA-256 for P, which are used as feature set; and \(f_{DL}(G)\) extracts gaze features using a DL model.

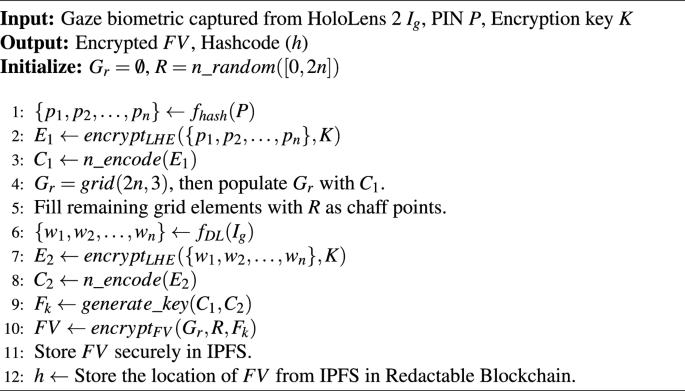

Proposed algorithm 3: user registration for MR

The MR-based system employs a user-set PIN P and gaze biometric \(I_g\) captured from the eye-tracking system of HoloLens 2. Firstly, a random set \(R = n\_random([0, 2n])\) containing n integers randomly selected in [0, 2n] is generated. The feature weights \(\{p_1, p_2, \ldots , p_n\}\) are extracted from the user-set PIN P using the function \(f_{hash}(P)\), which yields a 128-bit output via SHA-256. These feature weights are encrypted via leveled HE using encryption key K, resulting in \(E_1 \leftarrow encrypt_{LHE}(\{p_1, p_2, \ldots , p_n\}, K)\). The encrypted weights \(E_1\) are then encoded to obtain the RS codes into \(C_1 \leftarrow n\_encode(E_1)\). Subsequently, gaze features \(\{w_1, w_2, \ldots , w_n\}=f_{DL}(I_g)\) are extracted using deep learning techniques via function \(f_{DL}(I_g)\), and encrypted via leveled HE into \(E_2 = encrypt_{LHE}(\{w_1, w_2, \ldots , w_n\}, K)\). The encrypted gaze weights are encoded into RS codes \(C_2 \leftarrow n\_encode(E_2)\). Then, a \(2n \times 3\) grid \(G_r = grid(2n, 3)\) is generated and populated with elements of \(C_1\), with the remaining elements filled with random integers to form chaff rows. A fuzzy key \(F_k\) is generated as \(F_k = generate\_key(C_1, C_2)\). The FV is then obtained as \(FV=encrypt_{FV}(G_r, R, F_k)\), and stored in IPFS for distributed, efficient, and secure storage. Finally, the location of FV in IPFS is stored in the redactable blockchain network. Therefore, this algorithm for MR employs an integration of leveled HE and the FV mechanisms, as shown in Algorithm 3.

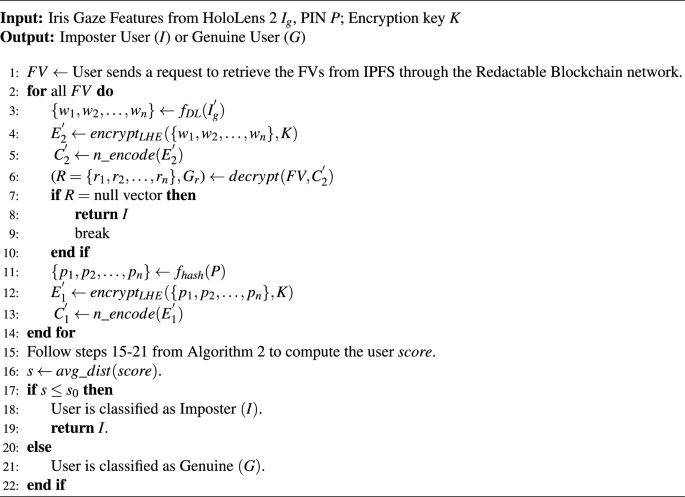

Proposed algorithm 4: user recognition for MR

For accessing the MR visualization, Algorithm 4 presents the user recognition framework. Firstly, the stored FVs are retrieved from IPFS through a redactable blockchain network. For each retrieved FV, the weights \(\{w_1, w_2, \dots , w_n\}=f_{DL}(I_g^{‘})\) are derived using DL techniques from the gaze-based behavioral biometric \(I_g^{‘}\) of the user via eye-tracking system of HoloLens 2. These weights are encrypted into \(E_2^{‘} \leftarrow encrypt_{LHE}(\{w_1, w_2, \ldots , w_n\}, K)\) via leveled HE using Encryption key K. \(E_2^{‘}\) is subsequently encoded to RS codes (\(C_2^{‘}\)) and used to decrypt the FV to generate R. If R is null after decryption, the user is deemed an Imposter (I), and the algorithm terminates. Otherwise, the algorithm proceeds to the second biometric modality, which is the PIN authentication for MR. The user enters their PIN (P) using a virtual gaze-controlled keypad. Another set of weights \(\{w_1, w_2, \dots , w_n\}\) are then derived from P through function the \(f_{hash}(P)\), which yields a 128 bit output using SHA-256. These weights are then encrypted into \(E_1^{‘} = encrypt_{LHE}(\{w_1, w_2, \ldots , w_n\}, K)\) via leveled HE using K. \(E_1^{‘}\) is subsequently encoded into RS codes (\(C_1^{‘}\)). In the comparison phase, the algorithm iterates over each row \(r_i\) in \(G_r\) and computes the distances (\(d_{ij}\)) between the reconstructed grid entries \(G_r[r_i]\) and \(C_1^{‘}\), for \(j \in \{0, 1, 2\}\). The minimum distance \(j^*\) for each feature \(r_i\) is determined, and the corresponding grid scores \(G_r[r_i][j^*]\) are aggregated to compute the total matching score. Once all features are processed, the algorithm evaluates whether the aggregated score exceeds a predefined threshold \(s_0\). If the score is above the threshold, the user is authenticated and classified as Genuine (G). Otherwise, the user is deemed an Imposter (I).

In summary, the proposed multimodal authentication for MR combines gaze tracking with knowledge-based PIN authentication while leveraging Leveled HE and FV mechanisms for privacy preservation. The virtual PIN keypad for knowledge-based PIN authentication also offers the added advantage of Shoulder Surfing Resistance by randomizing layout to prevent observation attacks. Additionally, the proposed Algorithms 3 and 4 use the built-in HoloLens 2 sensors, maintaining high usability and security without requiring external hardware. Therefore, the Algorithms 3 and 4 provide secure access to MR visualization of medical data for users from different geo-locations, as shown in Fig. 7.