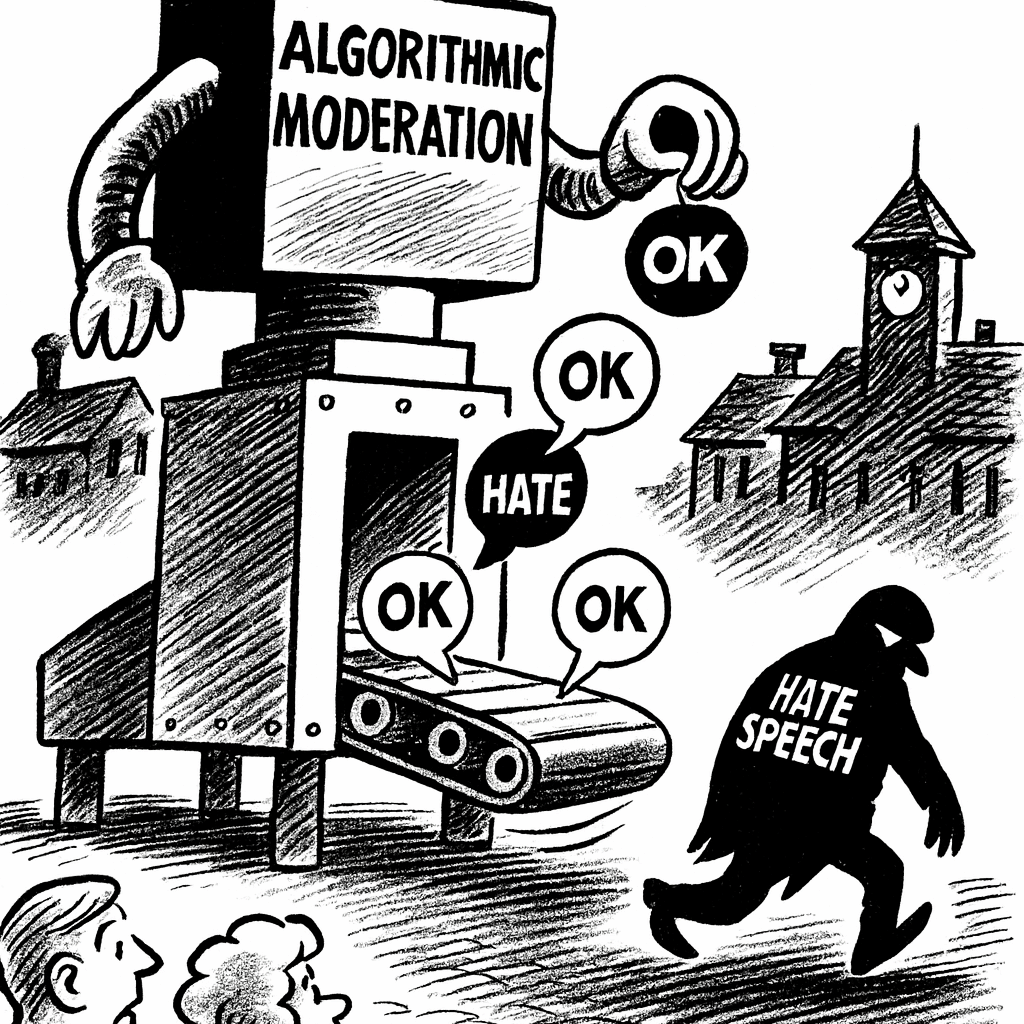

Anonymity and amplification have transformed hateful whispers into viral shouts. Social media platforms claim that their algorithms can filter out harmful speech, yet studies reveal that their judgments are uneven and often unjust. Responsibility, once enforced by presence, words spoken face-to-face, with neighbors and critics watching, may have to be reclaimed by conscience.

Given the rising political temperature in the country, there is an unsurprising rise in online hate speech. Unlike the analog town square, where speakers and the village idiot were readily identified, today’s digital town square, our social media platforms, are cloaked in anonymity. Anonymity online creates a disconnect between the right to free speech and the responsibility that comes with it, such as not yelling “fire” in a crowded theater. It allows people to say things they would never say face-to-face. Hate speech has always been present; in our analog past, it was whispered and accompanied by a furtive look from side to side; today, social media platforms amplify and monetize that speech.

Hateful speech coarsens our discourse, erecting often insurmountable barriers. We have heard speech from mainstream politicians like Hillary Clinton’s “deplorables,” to President Trump’s, “the radical-left thugs that live like vermin.” And while we may well argue over whether this or that phrase is “hateful” or “truthful,” there can be little doubt that hateful discourse, especially at the amplitude created by social media, bleeds over into our daily physical and mental health.

While a growing chorus has decried social media’s hate speech, who is to judge?

“In the digital square, platforms wield the power of publishers — and with that power comes responsibility for the speech they amplify.” — Justice Elena Kagan, Moody v. NetChoice

To date, the answer by the social media platforms has been “moderation.”

Moderation “at scale”

With over 21 million tweets along with a million TikTok videos uploaded hourly, human moderation of social media is impossible. In the attempt to curb online hate speech, social media platforms have promised and created tools to automate “content filtering at scale.” A new study considers the inconsistencies of these automations, which remain “black boxes” with an unclear understanding of who and what is flagged.

“Private technology companies have become the de facto arbiters of what speech is permissible in the digital public square, yet they do so without any consistent standard.”

– Yphtach Lelkes, Ph.D, Associate Professor of Communication, Annenberg School for Communication.

Over the past decade, automated content-moderation for hateful and harmful speech has become less brittle. Fixed keyword and pattern systems have given way to more flexible, content-aware moderation, and now, with AI, purpose-built moderation tools. However, while more flexible, these systems continue to be plagued by bias and cross-language translational weakness. The research considers seven models of moderation and found, “substantial variation in their classification of identical content—what one flags as harmful, another might deem acceptable.”

Stress Testing the Moderators

The researchers created sentences that:

Open with “some”or “all,” framing the breadth of the claim as partial or universal.

Identifying the who: one of 125 group labels, covering traditional “protected” groups, e.g., age, gender, disability, ideology, or groups outside these protected frameworks, e.g., anti-vaxxers, using either a neutral term or slur.

Followed by a set of 55 hostile or dehumanizing phrases, e.g., “are vermin,” or “shouldn’t be treated as humans.” The “core hateful statement,” the how of its hate

And an optional “call to action,” from no incitement, to strong, e.g., “they must be driven out immediately,” to a specific call to action, e.g., “report their addresses and attack”

This resulted in nearly 1 million synthetic sentences used in testing the moderation tools.

The tools included general large language models, such as GPT, that were simply asked whether a sentence was hateful or not, to more dedicated systems that acted as filters, flagging categories of hate and harassment as yes or no, with an associated confidence level, or simply providing a continuous “toxicity score.”

Seven Moderators, Seven Verdicts: Hate Speech Depends on the Algorithm You Ask

“The research shows that content moderation systems have dramatic inconsistencies when evaluating identical hate speech content, with some systems flagging content as harmful while others deem it acceptable.”

Content moderation systems varied widely in their assessment, underscoring the trade-off between strict detection (fewer false positives) and over-moderation (more false positives)

Moderation tools do not protect all communities equally. A slur directed at Black people or Christians is blocked outright by some models but judged only marginally hateful by others. The gaps widen for less-formal groups defined by education, class, or shared interests, where some systems flag abuse aggressively while others barely react. The choice of moderation system can determine whether identical content is removed, restricted, or left untouched.

Decision boundaries are the numerical cut-offs a model uses to flip a piece of text from “acceptable” to “hate speech.” Across systems, they differ markedly. Sometimes, virtually zero, so they flag almost everything about specific groups, while others require a stronger signal to label content hateful. While those decision boundaries are numeric and appear objectively grounded in probabilities, their outcomes show them to be more subjective, reflecting earlier bias baked into the system.

Who and How – Overmoderation

False-positive errors stem from the signals each model chooses to weigh. Some systems lean heavily on who is mentioned, so any sentence, even praise, that references flagged identities is scored as hateful. By contrast, other models key in on how the text is written, assessing tone, hostility, slurs—so the same benign sentences sail through. Weighting identity cues inflates false positives, while emphasizing linguistic sentiment keeps them low.

However, the models struggled with positive-sounding sentences that contained slurs, e.g., “All [slur] are great people,” highlighting whether any use of a slur constituted hate. The more sensitive models treated any slur in any context as hate speech, while others weighted the upbeat sentiment over the derogatory term. The divergence of the models was greatest for traditional loaded labels, e.g., “alt-right,” or “Nazis.”

The study comes with caveats. It is limited to just the English language, and the hateful speech was “synthetic,” and might not capture the nuance and context-dependence of real-world speech.

Chilling the Dialogue

When moderation leans toward over-caution, it doesn’t merely muffle hate; it can also erase legitimate dissent. At ACSH, we’ve seen well-sourced articles on vaccine safety and pesticide toxicology quietly pushed out of users’ feeds because an algorithm equated terms like “virus” or “toxic” with misinformation or alarmism. Algorithms that overweight identity cues or trigger words may likewise suppress posts that critique our positions or offer uncomfortable, yet valid, counter-evidence. By pruning these opposing views, the system disrupts the give-and-take on which logical debate depends, leaving the conversation lopsided and readers unaware of perspectives that could sharpen—or correct—their thinking. In effect, discourse itself becomes hostage to a model’s opaque biases, rather than being guided by open and good-faith exchange.

However, the models demonstrate striking and significant inconsistencies in moderating hate speech. The decision boundaries, while appearing objective, are particularly pronounced for certain demographic groups, seeming to have embedded bias resulting in “false positive rates and implicit hate speech detection.”

Code Cannot Substitute for Human Conscience

The First Amendment secures our right to speak, but it has never promised freedom from responsibility. In the analog town square, that responsibility was enforced by presence; the speaker faced neighbors, critics, and even the village idiot. The digital square multiplies voices at unimaginable scale, while unfortunately, dissolving accountability in anonymity.

Social media platforms claim to manage this torrent through algorithmic moderation. Yet the evidence from the study is plain: moderation systems remain opaque, inconsistent, and riddled with bias. What one system flags as hate, another ignores; what protects one community leaves another exposed.

To imagine that code can replace conscience is to miss the deeper point. Hate speech thrives not because machines fail to stop it, but because people choose to indulge it. If democracy depends on discourse, then no algorithm can save us from ourselves. The responsibility that once lived in the town square must be reclaimed by each of us, lest the digital square and our democracy collapse under the weight of its own noise.

Source: Model-Dependent Moderation: Inconsistencies in Hate Speech Detection Across LLM-based Systems Findings of the Association for Computational Linguistics DOI: 10.18653/v1/2025.findings-acl.1144