Corporate compliance is undergoing a seismic shift due to the transformative effect of digitalization and, in particular, AI. Once a futuristic concept, AI is rapidly becoming a mainstream, even business-critical, technology for legal and compliance functions globally. As organizations grapple with an increasingly complex regulatory environment, exponential data growth and relentless pressure to operate more efficiently and effectively, AI presents both unprecedented opportunities and novel challenges.

Our findings reveal a period of transition—one where early adopters are realizing tangible benefits, while also running up against growing pains such as implementation challenges, gaps in policy development and the inherent risks of deploying this transformative technology.

AI adoption trends

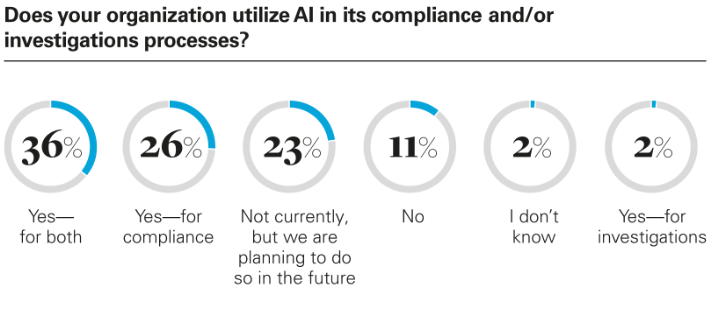

At the most fundamental level, AI is no longer a niche tool, but a technology gaining serious traction, albeit with adoption levels varying considerably across different organization types and sizes. Overall, 36 percent of respondents report using AI in both their compliance and investigations processes, with a further 26 percent using it for compliance tasks only.

This adoption is notably higher among certain segments. Respondents that are publicly listed companies are almost twice as likely (44 percent) to use AI for both compliance and investigations compared with their private sector counterparts (23 percent). This disparity likely reflects the larger data volumes and potentially higher investment capacity often associated with public entities, and potentially the correspondingly greater expectations from regulators regarding use and deployment of data analytics in underlying compliance programs. Similarly, corporates show significantly higher adoption of AI (43 percent) compared with private equity firms (10 percent), suggesting differences in operational scale, risk appetite and/or the immediate perceived need for AI-driven compliance between these different types of businesses.

Organizational size and revenue generation show a strong positive correlation with AI adoption. Nearly six out of every ten (59 percent) of the highest revenue-generating respondents already leverage AI for both compliance and investigations, a stark contrast to the 14 percent adoption rate among the lowest revenue-generating respondents. This finding highlights a resource gap, where larger organizations possess the financial means, technical expertise and the necessary data infrastructure to invest in and deploy AI more readily.

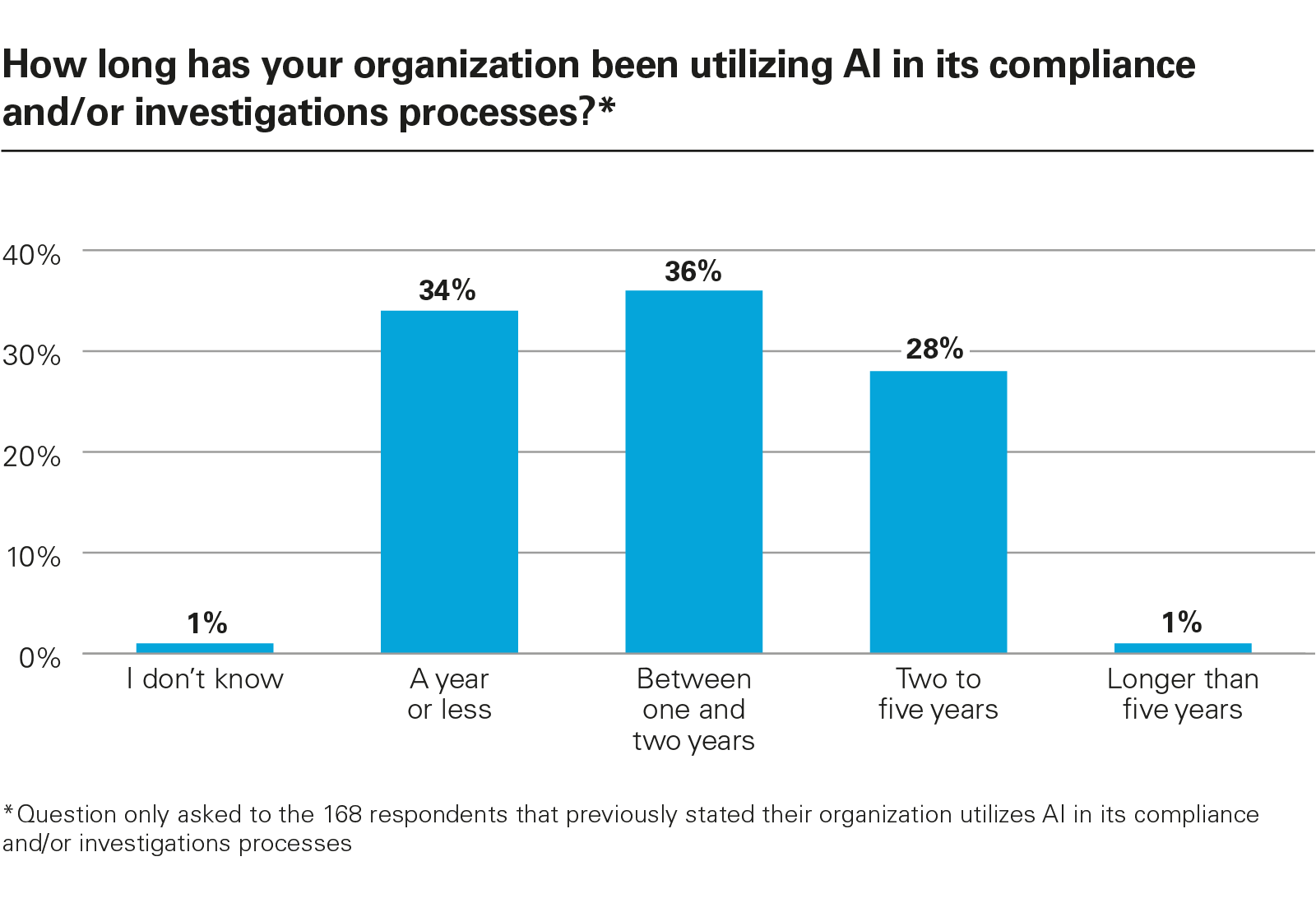

The tenure of AI usage reveals that while adoption is growing, it is still a relatively recent phenomenon for many organizations. Among respondents currently using AI, the largest cohort (36 percent) has been using it for one to two years, closely followed by those using it for a year or less (34 percent). A wave of adoption has occurred within the past two years, driven in part by pandemic-era digitalization trends, but even more so by the rapid mainstreaming of generative AI models and other scalable tools that have made the technology newly accessible and applicable to legal and compliance teams.

Again, respondents that are larger organizations demonstrate longer-term engagement with AI. Almost half (46 percent) of the highest revenue organization respondents have been using AI for two to five years, compared with just 11 percent of the lowest revenue organization respondents. As such, organizations that have used AI longer perceive the value of the technology as higher and have developed more sophisticated use cases.

Motivations driving AI adoption

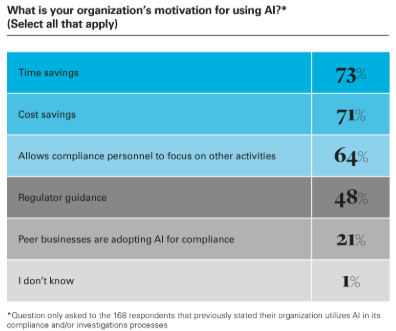

The rationale behind implementing AI for compliance and investigations is overwhelmingly pragmatic, focusing on efficiency and resource optimization. For respondents using these tools, the primary drivers are time savings (cited by 73 percent) and cost savings (71 percent). This finding underscores the increasing pressure on compliance functions to “do more with less”—managing escalating risks and data volumes without commensurate increases in headcount or budget. As one member of the ethics and compliance function of a US company said: “We use AI for compliance and investigations to lower the amount of manual work. Manual work has become time consuming due to the changing regulations and the complexity of the process. So, the use of AI became inevitable at a certain point.”

AI is viewed as a critical tool to automate repetitive tasks, accelerate analysis and free up compliance professionals for higher-value strategic work.

View full image: What is your organization’s motivation for using AI?* (Select all that apply) (PDF)

How AI is being applied

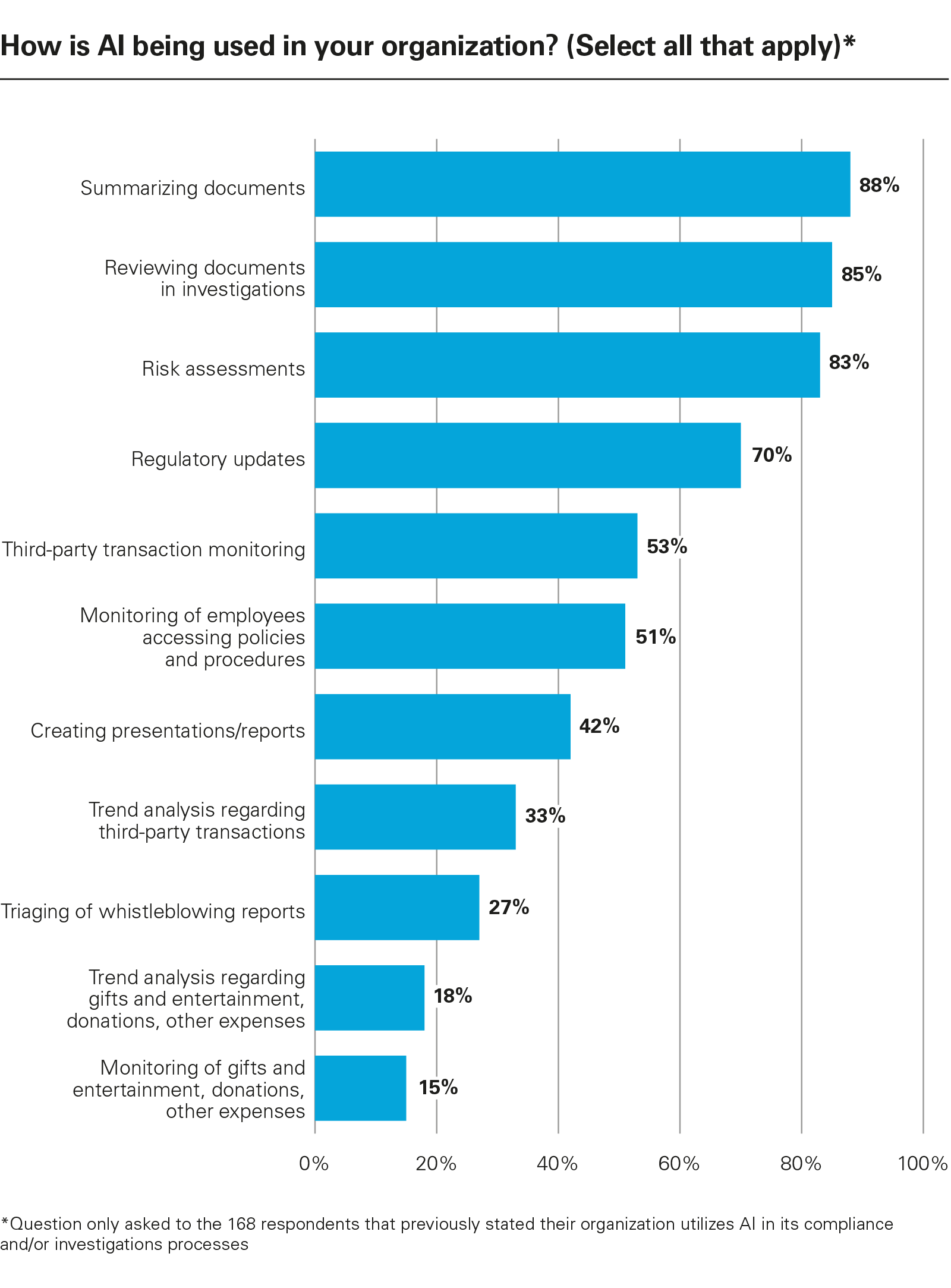

When examining the specific uses of AI among users in compliance and investigations, a clear focus emerges on the use of AI for tasks involving large-scale text analysis. The top use cases identified are summarizing documents (88 percent) and reviewing documents during investigations (85 percent). This aligns with the strengths of current Natural Language Processing (NLP) and Large Language Model (LLM) technologies, which excel at processing and extracting information from vast amounts of unstructured text data—a commonly shared challenge in compliance monitoring, due diligence and internal investigations.

In particular, the advent of generative AI marks a notable inflection point. Unlike earlier rule-based systems or machine learning algorithms designed for discrete tasks, generative models can summarize, compare, rephrase or even prepare first drafts of compliance documentation in a fraction of the time. This versatility, while powerful, also brings a new class of risks, including potentially opaque decision-making, unexpected outputs and uncertainty around the reliability of AI-generated content. Organizations are still grappling with where to draw the line between helpful automation and risky over-reliance and potential liability exposure.

While current uses of AI deliver on efficiency and cost savings, they represent a relatively narrow band of the technology’s capabilities. More sophisticated applications, such as advanced anomaly detection in transactional data or intelligent training personalization, are less prevalent, based on the top responses, suggesting many organizations are still in the early stages of leveraging AI’s full potential.

Some organizations are already seeing benefits beyond basic review, however, as noted by a member of the legal function of a Mexico-based company: “We noticed how contextual information is captured and processed by utilizing AI, so we are using it for both compliance and investigations processes. There is a better understanding of everyday and uncommon risks in our activities.”

View full image: How is AI being used in your organization? (Select all that apply)* (PDF)

View full image: How is AI being used in your organization? (Select all that apply)* (PDF)

View full image: Do you personally use AI tools within your role?* (PDF)

View full image: Do you personally use AI tools within your role?* (PDF)

User experience: High engagement and perceived value

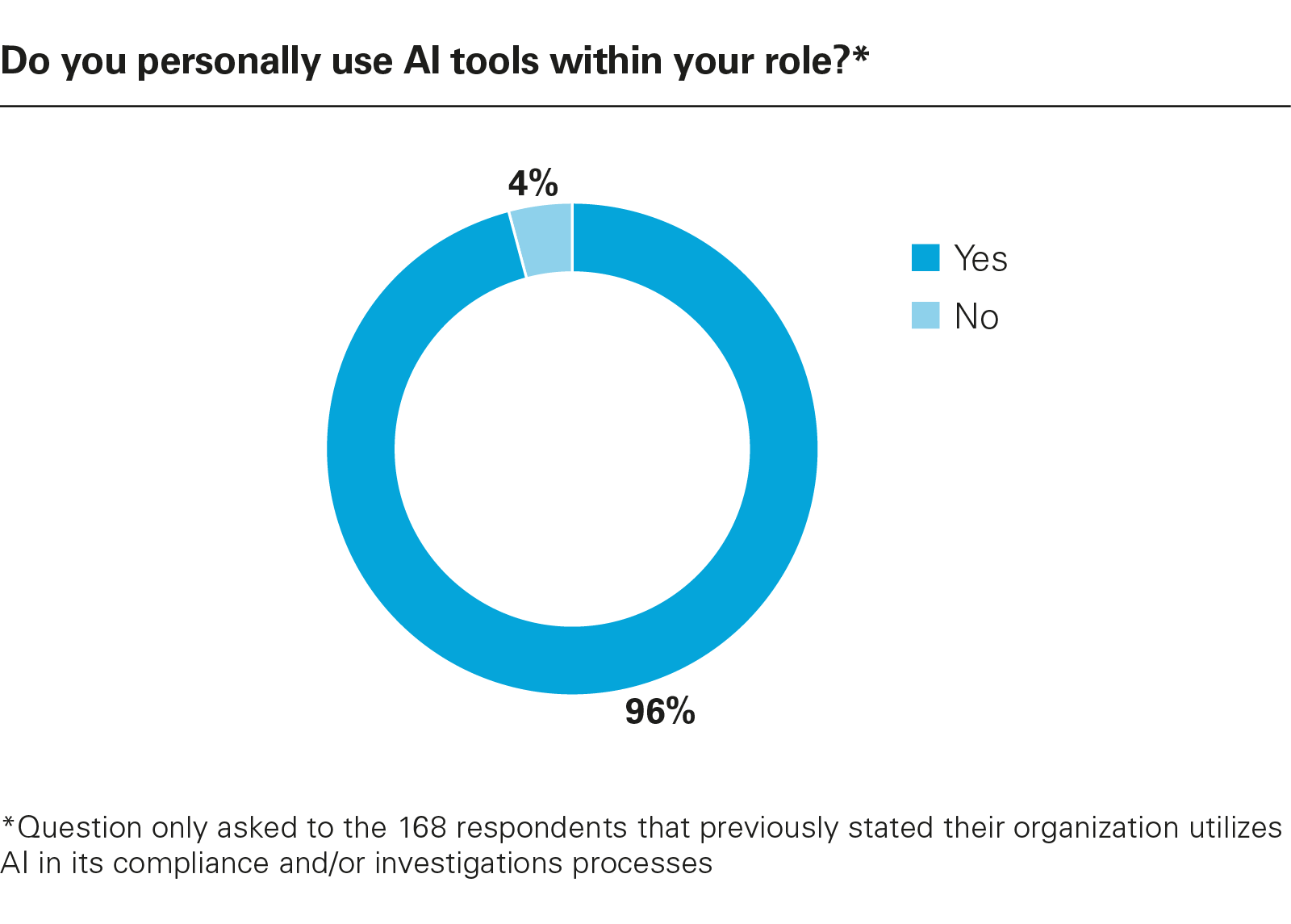

Encouragingly, where AI is implemented, user engagement and satisfaction appear to be high. Among respondents in organizations using AI, almost all (96 percent) report personally using AI tools within their role. This level and use indicates that AI is not just running in the background, but is being integrated into the daily workflows of legal and compliance professionals.

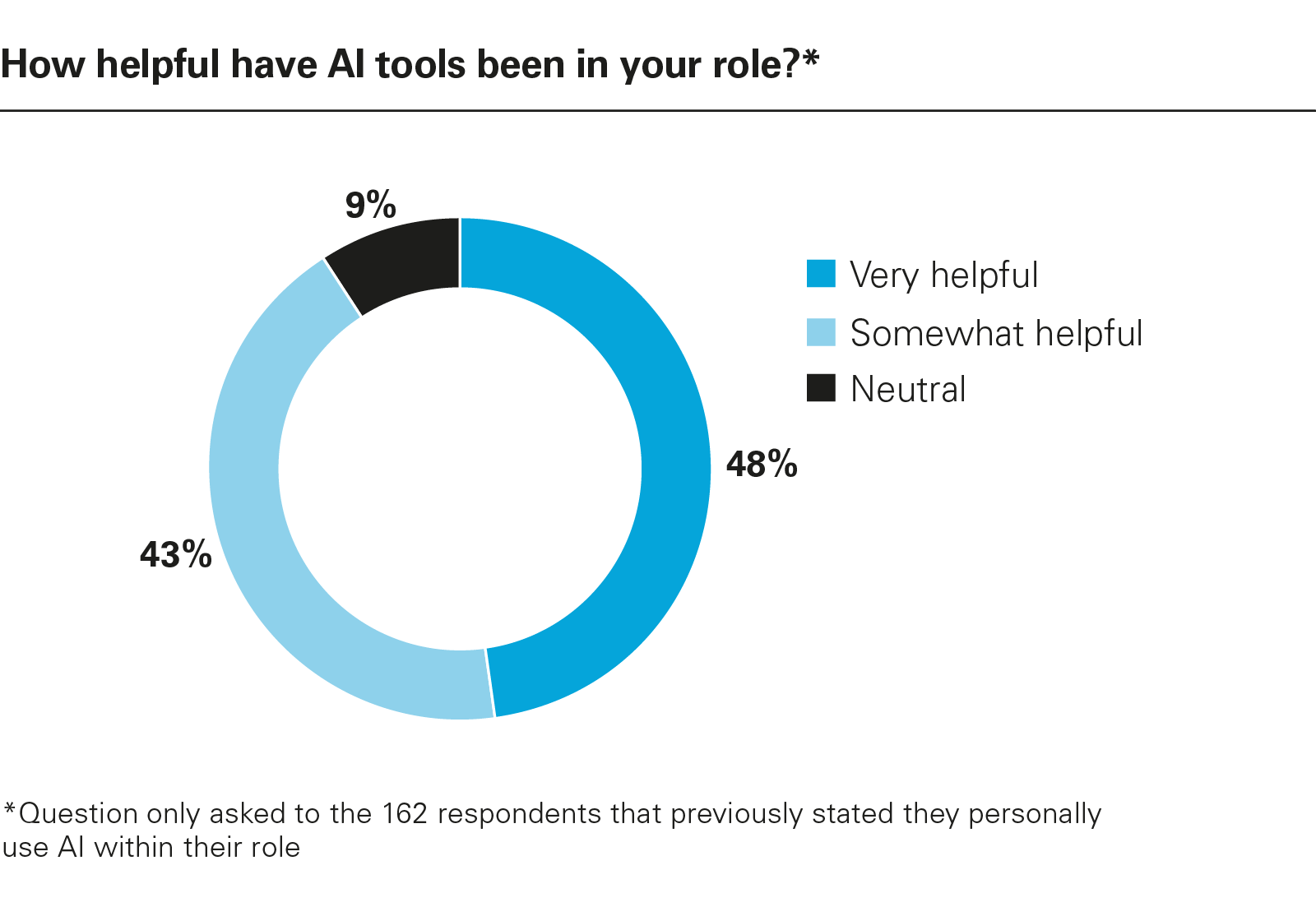

Furthermore, the perceived utility is overwhelmingly positive. None of the respondents who personally use AI tools found them unhelpful. Instead, 48 percent rate them as “very helpful,” while 43 percent find them “somewhat helpful.”

This strong endorsement suggests that once deployed, these tools are meeting user needs and delivering tangible benefits in their day-to-day tasks.

This perceived utility correlates strongly with organizational size and resources. Nearly three-quarters (73 percent) of users at the highest-revenue-generating respondents find AI tools “very helpful,” compared with only 37 percent at the lowest-revenue-generating respondents. This disparity is likely attributed to the maturity of AI implementation in larger firms, better integration with existing systems, more comprehensive training, and/or access to more sophisticated, tailored tools, reinforcing the longer tenure findings.

View full image: How helpful have AI tools been in your role?* (PDF)

View full image: How helpful have AI tools been in your role?* (PDF)

AI challenges and concerns

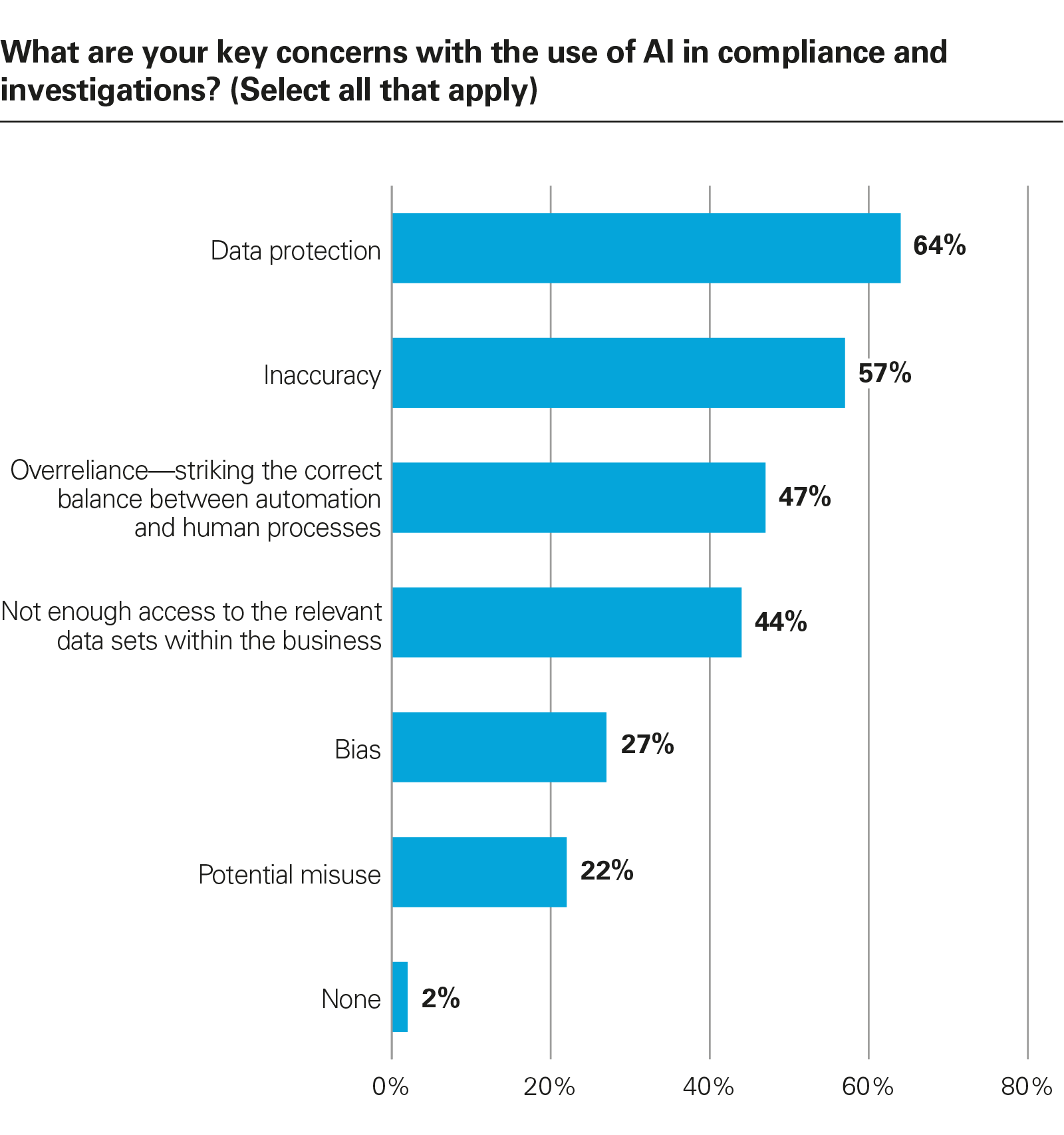

Despite positive user experiences, significant concerns remain regarding the deployment of AI. The key concerns center on data security and reliability. Data protection emerges as the top concern (64 percent), reflecting respondents’ anxieties about handling sensitive personal or corporate data within AI systems, ensuring compliance with privacy regulations such as the European Union’s General Data Protection Regulation (GDPR) and safeguarding against costly breaches. Inaccuracy (57 percent) is the second major concern, highlighting the risks associated with potential biases in algorithms, “hallucinations” in generative AI outputs, and the consequences of making legal and compliance decisions based on potentially flawed AI analysis.

Concerns about overreliance on AI are more pronounced in respondents that are publicly listed companies (55 percent) than in private organizations (35 percent). This finding may indicate a greater awareness in public companies of stricter governance expectations and a keener sense of the reputational and regulatory risks associated with inadequately supervised AI systems.

One often underestimated hurdle is cultural. Some legal and compliance teams remain skeptical of AI, fearing that automation could either dilute their influence or introduce errors for which they will be held responsible.

Others face institutional silos, where the data required for AI analysis is sequestered in legacy systems or resides in departments that do not coordinate effectively with legal and compliance teams. Without cross-functional alignment, even the most advanced AI models will struggle to reach their potential. Given this reality, it is perhaps not surprising that one of the questions that US DOJ prosecutors are encouraged to ask in the Evaluation of Corporate Compliance Programs (ECCP) guidance when assessing a company’s compliance program is whether compliance teams have sufficient access to relevant data sources for timely testing and monitoring of a company’s policies, controls and transactions.

View full image: Does your organization have a policy governing employee use of AI? (PDF)

View full image: Does your organization have a policy governing employee use of AI? (PDF)

Policies and frameworks: Catching up to technology

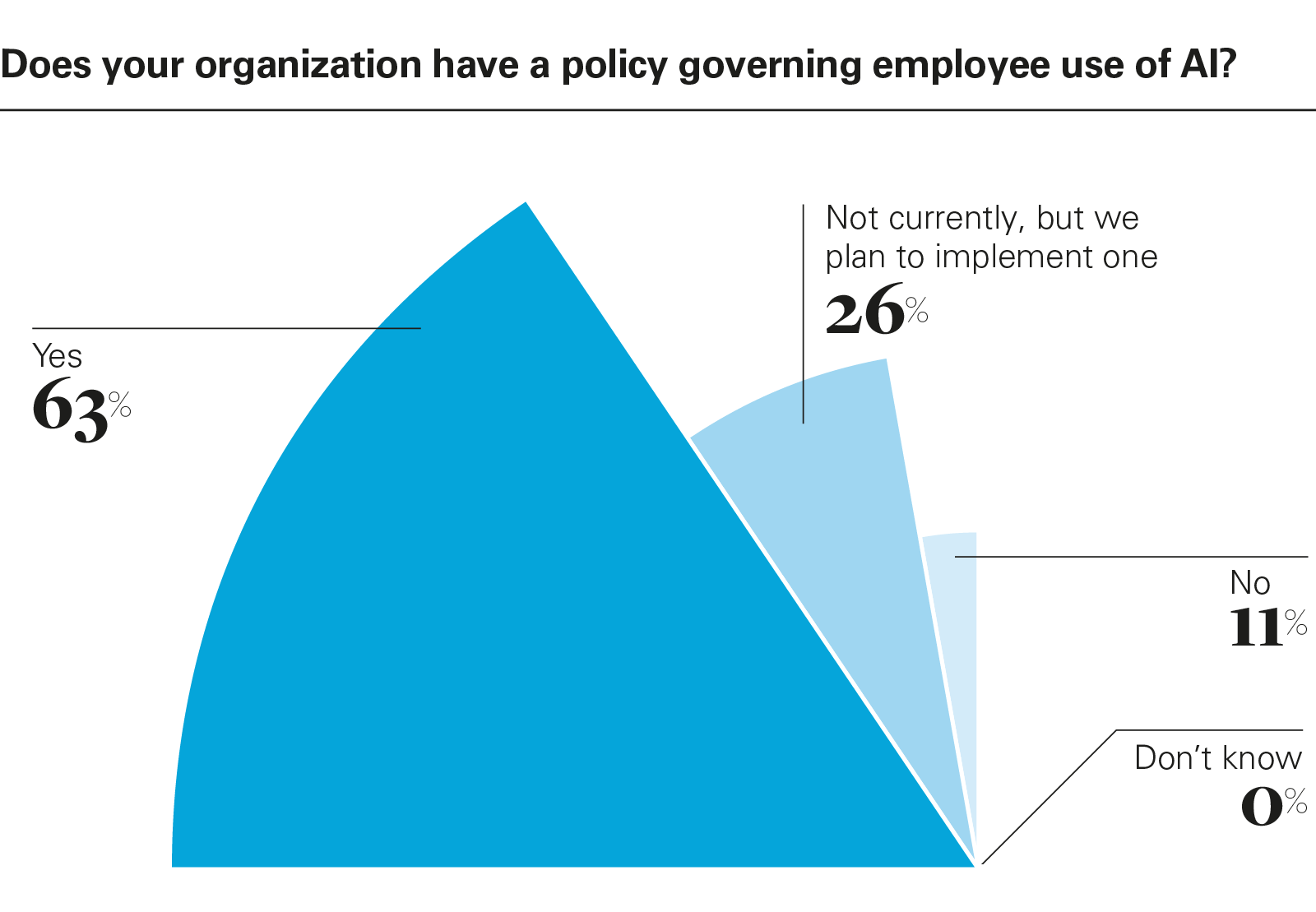

As AI adoption grows, organizations are stepping up by developing governance frameworks, although progress varies. Almost two-thirds (63 percent) of respondents report having a policy governing employee use of AI. A significant gap remains, however, with 26 percent stating they do not currently have a policy but plan to implement one. Policy implementation shows disparities similar to adoption rates: 79 percent of the highest-revenue respondents have an AI use policy compared to only 34 percent of the lowest-revenue respondents. Likewise, publicly listed respondents (75 percent) and corporates (68 percent) are ahead of private companies (44 percent) and private equity firms (30 percent) in establishing these guidelines.

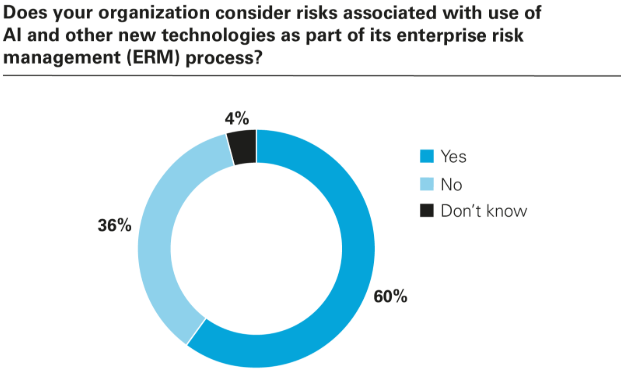

Beyond usage policies, integrating AI considerations into broader risk frameworks is crucial. Currently, 60 percent of respondents consider risks associated with the use of AI and other new technologies as part of their ERM process. AI is also proving to be valuable in navigating the complexities of the regulatory environment. “When there are regulatory updates, we need to make sure that we remain adaptive to these changes,” explains a member of the ethics and compliance function of a US company. “AI has transformed the way we adapt to regulatory changes. Existing compliance procedures are altered without many issues. There is more confidence in our compliance management ability overall.”

Integrating AI into ERM is again more prevalent among larger and public respondents compared with smaller and private entities. For example, 71 percent of respondents that are public companies incorporate AI into ERM versus 44 percent of private companies, and 79 percent of the highest-revenue respondents do so compared with 30 percent of the lowest revenue ones.

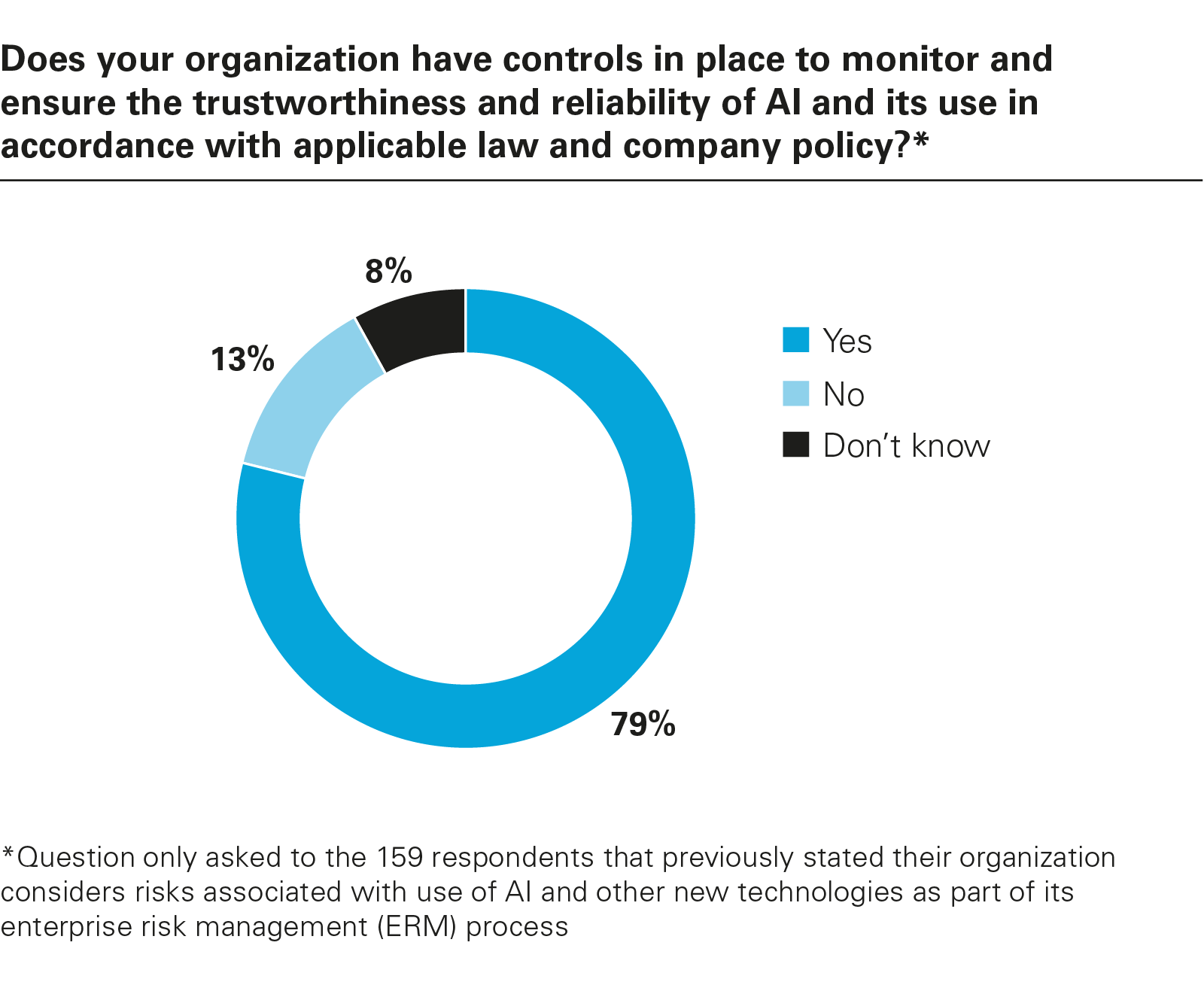

Encouragingly, among the 60 percent of respondents that consider these risks in ERM, a strong majority (79 percent) state they have controls in place to monitor and ensure the trustworthiness and reliability of AI and its use in accordance with applicable law and company policy. This finding suggests that organizations formally addressing AI risks are also actively implementing mitigation measures.

Looking ahead, several forces may accelerate AI integration in compliance. Regulatory bodies are starting to experiment with AI for enforcement and oversight, raising the stakes for regulated entities. At the same time, the emergence of AI-specific audit frameworks and ethical guidelines along with innovation programs run by governments and regulators may help hesitant organizations gain confidence. In the UK, the Financial Conduct Authority (FCA) publishes regular updates on the work it is doing to support the government’s “pro-innovation strategy” on AI (this work has included co-authoring a discussion paper on how AI may affect regulatory objectives for the prudential and conduct supervision of financial institutions).

As tools evolve, we may also see a shift from task automation toward decision augmentation—where AI is not just doing the work but helping to shape how compliance professionals think about risk.

[View source.]