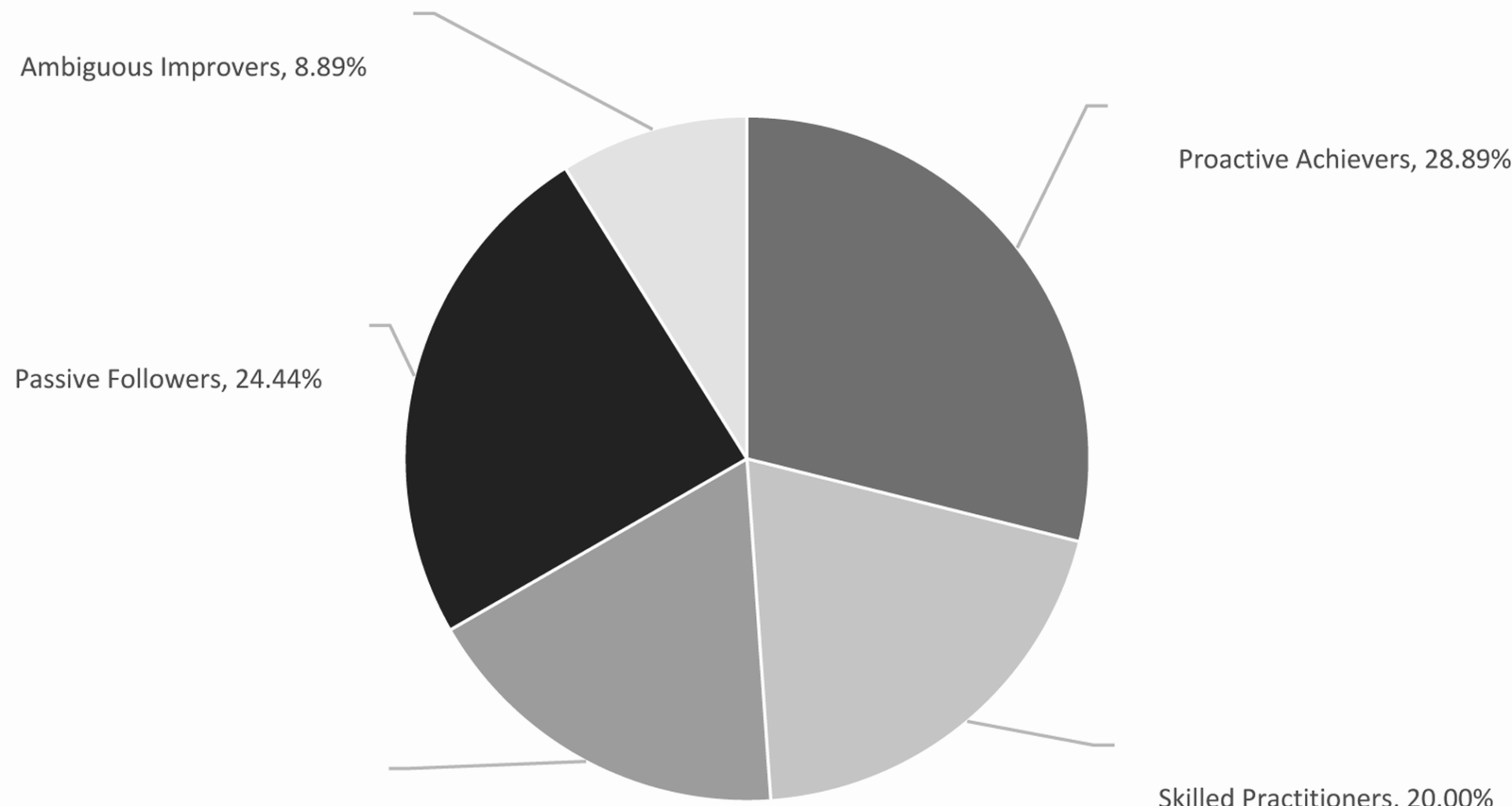

The five identified personas—Proactive Achievers, Skilled Practitioners, Social Collaborators, Passive Followers, and Ambiguous Improvers—exhibit distinct patterns in EPA-assessed competencies and social sensing-derived behaviors. Proactive Achievers (28.89%) demonstrated superior performance in diagnostic reasoning (9.2 ± 0.4) and treatment planning (8.9 ± 0.5), aligning with Mador et al.’s (2021) description of “high-achieving phenotypes” in CBME. However, this study extends prior work by integrating real-time social sensing data (e.g.,3 + hours daily on learning platforms), revealing how online knowledge sharing correlates with clinical competence [13]. Conversely, Passive Followers (24.44%) showed consistent underperformance in basic EPAs (history taking: 5.5 ± 0.6), which may reflect gaps in self-directed learning—an identified limitation of traditional EPA assessments [14]. The small Ambiguous Improvers subgroup (8.89%) highlights the need for targeted interventions, as their low scores in all EPA dimensions (mean = 4.8 ± 0.5) suggest foundational skill deficits that require personalized support. Notably, the Ambiguous Improvers subgroup (n = 4) had limited statistical power, which may affect the generalizability of their low EPA scores (mean = 4.8 ± 0.5). This highlights the need for targeted sampling in future research to robustly characterize this learner type [15, 16].

Theoretical implications for EPA-Integrated profiling

This study bridges two key theories: (1) ten Cate’s EPA framework, which provides objective competency benchmarks, and (2) social sensing literature, which captures contextual learning behaviors. The integration addresses a critical gap: while EPAs evaluate “what learners can do,” social sensing reveals “how they do it” [17, 18]. For example, Skilled Practitioners (20.00%) achieved high procedural EPA scores (physical examination: 8.8 ± 0.3) but lacked theoretical engagement, a pattern undetected by traditional EPA reviews alone[19]. From a design thinking perspective, these personas function as “goal-directed archetypes,” enabling educators to anticipate learner needs. Social Collaborators (17.78%), for instance, excel in team communication EPAs (8.7 ± 0.4) but require structured knowledge consolidation to align with competency standards. This aligns with recent calls to merge EPA frameworks with learner-centered design [20,21,22,23]. Holmboe et al. (2017) noted that traditional EPA assessments often focus on summative outcomes, lacking granular data on learning processes—a gap addressed by integrating social sensing in this study [6]. Mador et al. (2021) identified learner phenotypes using static performance data, whereas our framework advances this by linking dynamic behaviors (via social sensing) to EPA outcomes [3].

Practical applications for medical education

The persona framework offers tangible educational strategies tailored to each learner type [24]. For Proactive Achievers, advanced EPA challenges such as complex case simulations are recommended to satisfy their self-directed learning tendencies, while Passive Followers may benefit from EPA-based gamification like badge systems for completing core EPAs to enhance engagement [25,26,27]. Ambiguous Improvers, meanwhile, would profit from mentorship tied to specific EPAs, such as weekly diagnostic reasoning workshops, to build foundational competencies [28]. These recommendations align with BMC Education’s focus on evidence-based interventions, as seen in integrating social sensing data into EPA feedback systems to enable real-time monitoring of learner engagement—an approach validated by Liu et al.’s (2025) work on social media profiling for academic support [12]. This integration not only enhances the personalization of medical education but also bridges behavioral analytics with competency assessment to foster targeted skill development [29,30,31].

Generalizability of the framework

The centralized platform in this study facilitated data collection, but many educational environments have fragmented systems. Adaptations include:

Multi-platform integration: Using API interfaces or standardized formats (e.g., xAPI) to aggregate data from LMS, clinical apps, and communication tools.

Alternative data sources: Supplementing with learning logs or instructor records if platform data are unavailable.

Simplified framework: Prioritizing EPA scores and key behavioral indicators (e.g., weekly study hours) in resource-limited settings.

Study limitations

Several limitations should be acknowledged to contextualize the findings. The single-center sample (n = 45) may limit generalizability to diverse medical education systems, with the Ambiguous Improvers subgroup (n = 4, 8.89%) showed significant deficits in all EPA dimensions (mean = 4.8 ± 0.5), though this finding should be interpreted with caution due to the small sample size [32]. The low sample size may lead to unstable statistical results, and future studies with larger samples (e.g., n ≥ 15) are needed to validate whether their performance gaps reflect true skill deficits or sampling variation. Additionally, the 6-month internship period constrains longitudinal analysis of EPA skill development over time. While six core EPAs were assessed to capture clinical competencies, future studies should incorporate all ten Cate-defined EPAs for comprehensive profiling. Finally, the personas have not yet been validated through educational interventions, which limits inferences about their practical utility in real-world training contexts [33, 34]. Recognizing these constraints highlights the need for multi-center, longitudinal research to enhance the framework’s applicability and validate its impact on medical education.The EPA assessment used a global 1–10 scale rather than entrustment decision levels (e.g., 5-level entrustment proposed by ten Cate, 2007), which may restrict generalizability to programs employing detailed milestone-based EPA evaluations. Future studies should integrate milestone mapping to enhance alignment with international EPA standards [35].

Future directions

Future research should prioritize several key directions to build upon these findings. Multi-center studies are essential to validate the generalizability of the identified personas across diverse medical education systems, while longitudinal designs will enable tracking of EPA performance trajectories throughout different internship stages. Additionally, developing integrated tools that merge EPA assessments with social sensing data could facilitate real-time learner feedback, enhancing the adaptability of educational interventions. Finally, testing persona-driven strategies—such as tailored EPA training modules—will be critical to measuring their tangible impact on educational outcomes and ensuring the framework’s practical utility in cultivating competent medical professionals. These steps will not only strengthen the scientific rigor of the approach but also bridge the gap between theoretical profiling and real-world educational application.

Conclusion

By merging social sensing data with EPA assessments, this study constructs robust learner personas that capture both competency outcomes and contextual behaviors. The framework addresses critical gaps in traditional EPA evaluations, offering a data-driven approach to personalized medical education. While validation in broader contexts is needed, these findings lay groundwork for integrating dynamic behavioral analytics into competency-based training systems [–].