Digital health fatigue, in this article, refers to the diminishing returns and growing burden experienced by users bombarded with continuous streams of health metrics and nudges from monitoring technologies. Terms like ‘cyberchondria’ have also emerged, referring to health anxiety fueled by excessive digital self-tracking5. Recent research suggests that for select populations, interactive digital health tools and continuous health monitoring may impose significant cognitive and emotional burdens, including heightened anxiety from misinterpreting normal physiological fluctuations, and emergence of maladaptive tracking behaviors that transform well-intentioned health management into rigid, distressing preoccupations with metrics and targets6,7. For instance, users may misinterpret smartwatch electrocardiogram (ECG) alerts as signs of cardiac disease or become anxious when wearable sleep metrics suggest poor rest despite feeling well. Recent studies have suggested that information overload from digital health tools can lead to user fatigue and skepticism; ultimately resulting in reduced engagement, tool abandonment, and poorer health outcomes as users disengage from both the technology and often the healthy behaviors these platforms were designed to encourage6,7,8,9.

This phenomenon in part parallels concerns in traditional healthcare regarding medical (i.e., healthcare practitioner-initiated) ‘overtesting’ or low-value testing – where unnecessary diagnostic procedures generate false positives, incidental findings, and patient stress10. Some alerts or abnormal readings from wearables can serve as analogous “false alarms,” where benign variations trigger unnecessary concern or follow-up. In the digital health context, this issue potentially operates at a larger scale and frequency, with consumer-oriented tools continuously generating metrics and alerts that often involve interpretation without clinical or expert oversight11.

Navigating health information overloadCurrent approaches

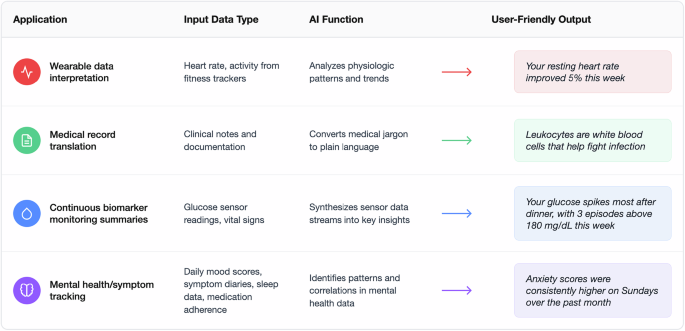

Current digital health technologies employ several strategies to attempt to manage the overwhelming volume of health data generated by modern monitoring systems. These information management strategies include, but are not limited to, approaches such as: reducing data volume through filtering, improving data presentation through consolidation and visualization, and, more recently, AI-mediated interpretation (Fig. 1)4,12,13.

Filtering often involves personalized threshold systems that allow users to customize when and how they receive health-related notifications. For instance, Fitbit allows users to set personalized heart rate alerts based on their individual norms rather than using average population-based thresholds14, theoretically reducing alert fatigue while maintaining sensitivity to physiologic changes.

Data consolidation and visualization strategies, exemplified by platforms like Apple Health Kit, have also tried to address the cognitive burden of monitoring disparate health parameters15. These systems can synthesize health metrics from various devices or sensors, such as heart rate data from a watch, sleep patterns from wearables, and glucose readings from continuous monitors, into unified, intuitive dashboards that allow users to track their health holistically rather than through fragmented applications.

However, amongst other challenges, these strategies still place the burden on users to interpret what their health data actually means and determine appropriate responses to concerning patterns.

The promise of AI health companions

Artificial intelligence-driven health companions have been proposed as a potential solution to the digital health information dilemma. Proponents have suggested that these assistive AI systems could serve as intelligent mediators between users and their health information, helping to filter, contextualize, and personalize health data in ways that promote understanding without overwhelming users with every information point. They could function to notify individuals about health concerns and suggest interventions at a level of detail and based on thresholds predetermined by that individual, or, perhaps, determined by the system to be appropriate for that individual.

Several promising technologies may help enable these AI health companions. Large language models (LLMs) adapted for healthcare applications represent one such technology. Recent models like Google’s Personal Health LLM (PH-LLM) demonstrate how these models can be fine-tuned to interpret raw sensor data from wearables and generate personalized health insights16. For instance, by distilling complex data into actionable recommendations, these systems can improve sleep and fitness behaviors with expert-level performance. Across 857 expert-curated cases constructed from real-world wearable data, PH-LLM’s recommendations were rated comparable to human experts for fitness and only slightly below expert ratings for sleep, though still receiving the top score 73% of the time in the sleep domain. By summarizing dense streams of wearable metrics into clear, prioritized guidance, such systems may also help users navigate information overload and reduce the digital health fatigue that often arises from constant, uninterpreted data influx. Similarly, Google’s recent Personal Health Agent (PHA) has introduced a multi-agent health companion framework composed of a data science agent (analyzing personal and population health data), a domain expert agent (contextualizing findings with medical knowledge), and a health-coach agent (e.g., supporting behavior change through evidence-based and motivational strategies), illustrating how layered generative architectures can operationalize the filtering and personalization functions envisioned for AI health companions17,18,19.

In parallel, recent research demonstrates that LLMs can be fine-tuned to help patients navigate and comprehend their medical records, translating complex medical terminology into accessible explanations and reducing information overload20. For instance, a patient reviewing their oncology clinic note could be presented with a list of unfamiliar but clinically important terms, such as ‘rituximab’ or ‘large cell lymphoma’, each linked to plain-language explanations, helping them focus on the key elements of their care plan rather than being overwhelmed by dense jargon.

In clinical practice, LLMs have demonstrated the ability to translate continuous glucose sensor data into a concise, plain-language two-week overview; these generated summaries were judged by clinicians to have high accuracy, completeness, and safety ratings21.

Taken together, early evidence suggests LLM-powered companions can convert and triage high volumes of incoming data into patient-specific guidance, potentially reducing cognitive load while still surfacing clinically important insights – provided they meet standards for safety validation, privacy and security, and ongoing user and clinical oversight.