November 26, 2025

By Karan Singh

The global map of FSD just got a little bit larger. Following several months of speculation and an announcement earlier this month, Tesla has officially flipped the switch in South Korea, making it the seventh global market to receive FSD (Supervised).

The rollout has commenced with Tesla’s usual modus operandi for FSD releases, landing in the hands of key influencers and early adopters first. While the sample size is currently small, the first reports from the streets suggest that FSD is remarkably well adapted to driving in one of Asia’s busiest and most challenging driving environments.

$TSLA 🇰🇷 FSD is now live in Korea, and the way it navigates those shared streets is impressive 🔥

On this tight road, the system appears to have a lower, more appropriate sensitivity to pedestrians 🔥

This eliminates the problem of sudden, unnecessary braking 🔥

Amazing 🔥 pic.twitter.com/Bpk93twt9O

— Ming (@tslaming) November 22, 2025

Notably, this is also the first overseas rollout of FSD v14. Other countries like China, Australia, and New Zealand remain on FSD V13.2.9 for the time being. North America, including Puerto Rico, Canada, and Mexico, are all on the most current builds of FSD v14.

The Vanguard Fleet: AI4 Model S and X

This isn’t a larger wave covering all of Tesla’s models like it was in Australia and New Zealand. Instead, the initial wave is strictly limited to Model S and Model X vehicles equipped with AI4 (HW4) computers.

There is a geopolitical reason for this exclusivity. The rollout leverages the Korea-U.S. Free Trade Agreement (FTA), which allows a specific quota of U.S.-manufactured vehicles (like the Fremont-built Model S and X) to be imported into Korea under U.S. safety standards, bypassing some of the domestic certification hurdles that currently block the Chinese-made Model 3 and Model Y fleets.

Once Tesla completes the additional safety certifications required to deploy FSD on the Model 3 and Model Y, we expect those AI4-equipped vehicles to quickly follow along with the rest of the fleet.

Local Validation

The star of this initial rollout has been the performance of FSD in Seoul’s notoriously dense and aggressive traffic. Some of the first few comprehensive looks at FSD’s performance highlighted the ability for FSD to handle the challenging cut-ins, complex intersections, and narrow lanes throughout Korea’s capital city.

$TSLA 🇰🇷

BREAKING : I just experienced FSD (Supervised) for the first time in Korea.

The vehicle is Model X and the FSD version is *V14.1.4.

We have yet to see any official cases of V14 being applied outside of the United States and Canada.

I’ve just completed my first FSD run… pic.twitter.com/521P7Ckgn5

— Tsla Chan (@Tslachan) November 21, 2025 What’s Next?

With the software now live on the initial few vehicles, Tesla will be closely monitoring reports from Korean influencers and early access users. If reports trend well, which they seem to be, we can expect FSD v14.1.4 (or potentially v14.1.7) to roll out to a larger set of AI4 Model S and Model X vehicles across South Korea.

For the vast majority of Korean owners driving the ever-popular Model 3 and Model Y, the wait will continue. As these vehicles fall under a different safety regulatory framework, they’ll need additional certification before they can join the FSD fleet. However, the deployment of the software on Tesla’s flagship vehicles will also help to serve as a critical proof-of-concept: the software works.

The next hurdles for South Korean owners will be legal, not technical.

Use our referral code and get 3 months free of FSD or $1,000 off your new Tesla.

November 26, 2025

By Karan Singh

For years, the critique of Tesla’s FSD approach was that it lacks common sense. It excels in the mechanics of driving, including lane keeping, braking, and turning. While it has improved significantly, especially in FSD v13 and v14, it can still struggle with the high-level reasoning required for complex scenarios.

A car might stop for a construction worker holding a “Slow” sign, but would it understand that the worker is looking away and chatting, while waving traffic through? Would it understand that the lane that’s moving faster on a road to the right is not the right one to be in for the moment, due to the upcoming lane closures?

These are real scenarios FSD struggles with today, and Tesla is now actively hiring an AI engineer to solve this exact problem. This new job posting, for an “AI Engineer, Reinforcement Learning and Distillation,” is a role explicitly tasked with developing smarter, more contained models.

Instinct vs Reason

To understand why this matters, we can examine the way Andrej Karpathy, Tesla’s former Director of AI, discussed System 1 (fast and intuitive) versus System 2 (slow and reasoning) thinking (video below). This framework defines how human thinking works, and in short, we need to apply that same framework to how an AI model works.

System 1 thinking is fast, instinctive, and emotional. It is what you use when you drive a familiar route to work. System 2, meanwhile, is slower, deliberative, and logical. It’s the type you use when navigating through a construction zone or series of highway interchanges.

Current end-to-end FSD is effectively a perfectly accurate System 1. It maps pixels to controls with incredible reaction time, but it lacks a dedicated System 2. A parallel reasoning layer that can think through a problem before acting.

In fact, Tesla’s job posting admits this: “These models continue to struggle in real-world physical reasoning, often struggling to tell left from right.”

Distillation

The second half of the job title, Distillation, is the key to how this System 2 thinking will actually reach your driveway. Reasoning models are massive. They require immense amounts of compute and thinking time that a moving car simply doesn’t have. You can’t wait 10 seconds for your car to ponder whether to go or stop.

That’s where the act of distillation comes in. Tesla plans to train these massive System 2 reasoning models in their own data centers, which will act as “teachers,” generating perfectly reasoned solutions of millions of complex driving edge cases.

Those solutions can then be trained down for smaller, faster “student” models that can run side-by-side with FSD on your car’s local inference chip. The result: a car that has the instincts of a reasoning genius but maintains its perfect pixel-to-control reaction time.

Distillation isn’t just optimization; for HW3 owners, it is the only bridge to the v14 era.

What About HW3?

When you look at it broadly, distillation is exactly the means that Tesla used to build FSD V12.6.4, which is a distilled, simplified version of FSD v13.2.9. Once reasoning is solved, these engineers will likely move on towards distilling the simplified version of FSD v14-lite that was previously mentioned at the recent Q3 2025 Earnings Call.

Reasoning is Key

This hiring push hints at Tesla moving beyond simply throwing more and more data at FSD in the act of training it better. Now, they are looking at changing the way the car thinks ahead, moving from pure imitation to a genuine physical understanding of the world around it.

For the edge cases that currently cause FSD to hesitate or throw up red hands, this reasoning layer is likely the final bridge from Supervised to Unsupervised.

There’s a lot to look forward to – and the reasoning space is one that Tesla hasn’t really stepped a foot into quite yet, but it is one of the spaces where we’ll see some of the greatest and most visible progress, versus the more granular, iterative steps between v13 and v14.

November 26, 2025

By Karan Singh

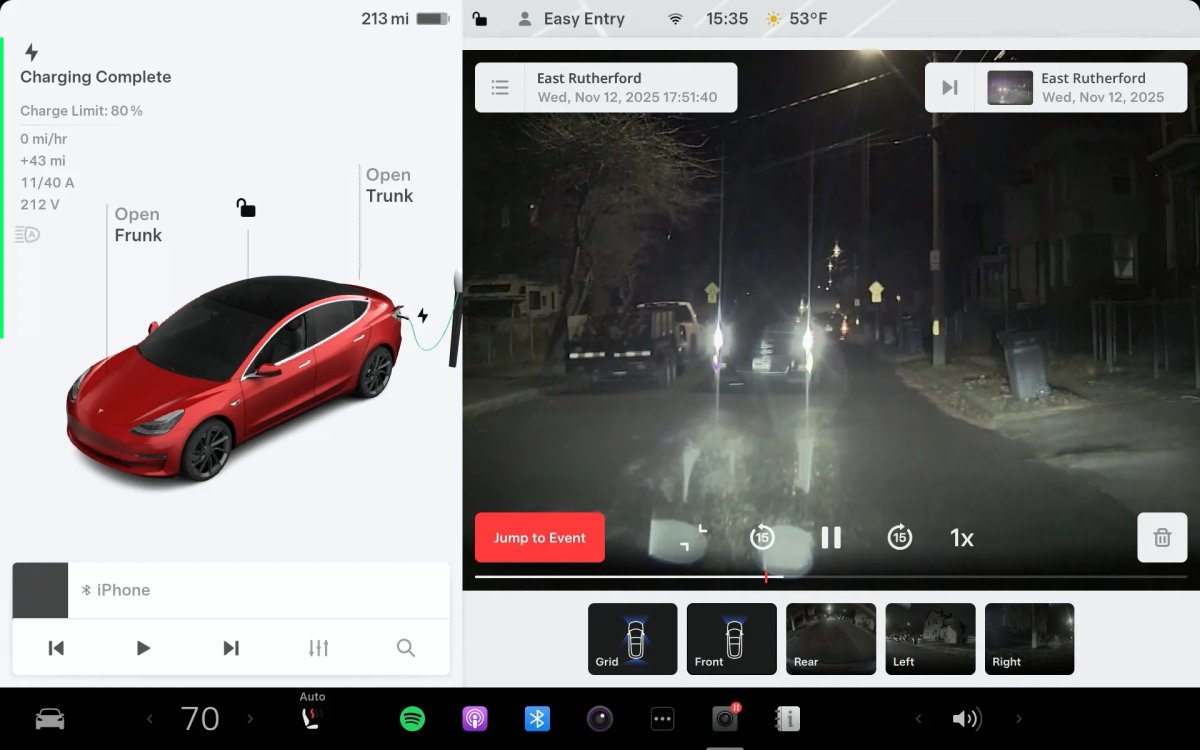

In a surprising and welcome move for the legacy fleet, Tesla’s baseline for the upcoming Holiday Update, 2025.44, updates the Dashcam Viewer for vehicles on the older Intel Atom MCU2 processor.

Since the 2025 Spring Update, the improved viewer that featured streamlined controls and a new layout was exclusive to AMD Ryzen vehicles, as the older Intel chips were too slow to handle the simultaneous video streams and UI overlays. With this update, Tesla has achieved significant optimization, bringing feature parity to millions of older vehicles with the Intel infotainment processor.

What’s New?

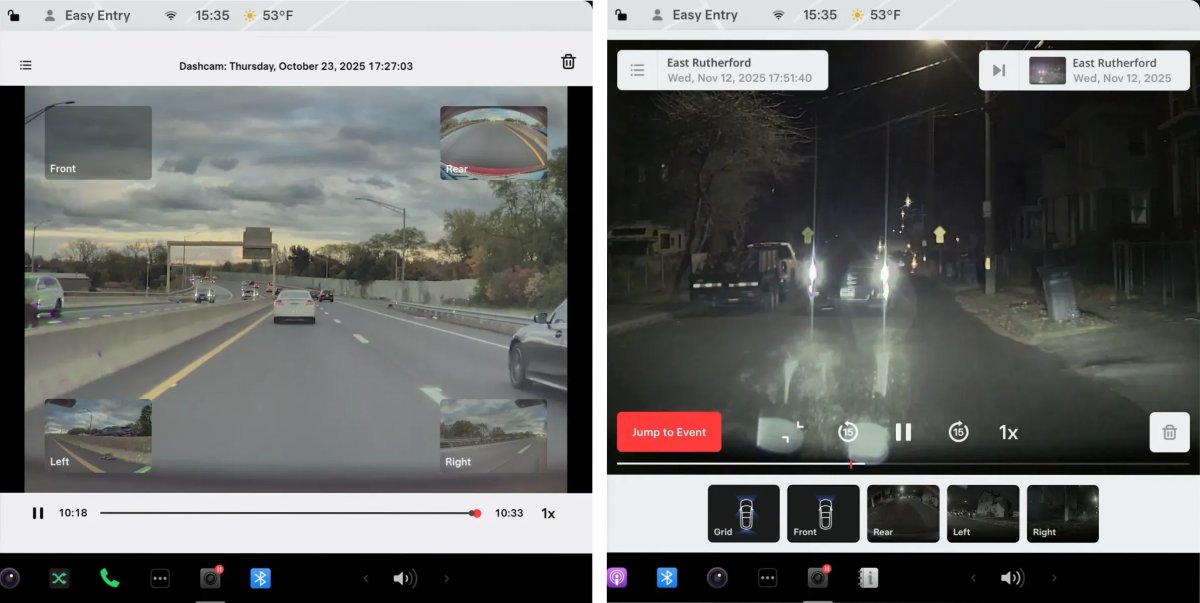

The difference is immediately noticeable. The old interface, which displayed small camera feeds in the four corners of the screen and obscured the main video, has been removed.

The new Intel Dashcam Viewer matches the Ryzen experience. That means all the cameras are neatly laid out at the bottom of the UI, which also means the space the video plays in is larger, making it easier to see details and maximizing the size of the main video playback area.

However, this isn’t just an improved UI. It also includes additional features. The scrubber bar now includes the Jump to Event button, which immediately brings you to the event that triggered the Dashcam to save the video – whether it be a Sentry event or a horn honk. You can also now jump forwards and backwards in 15-second increments using the dedicated on-screen buttons, replacing the clumsy manual scrubbing of the past.

However, these aren’t the only improvements. Tesla also added an “Up Next” button that lets you easily jump to the next video clip, a new “uncropped” button that lets you view the full, uncropped video and a new Grid view.

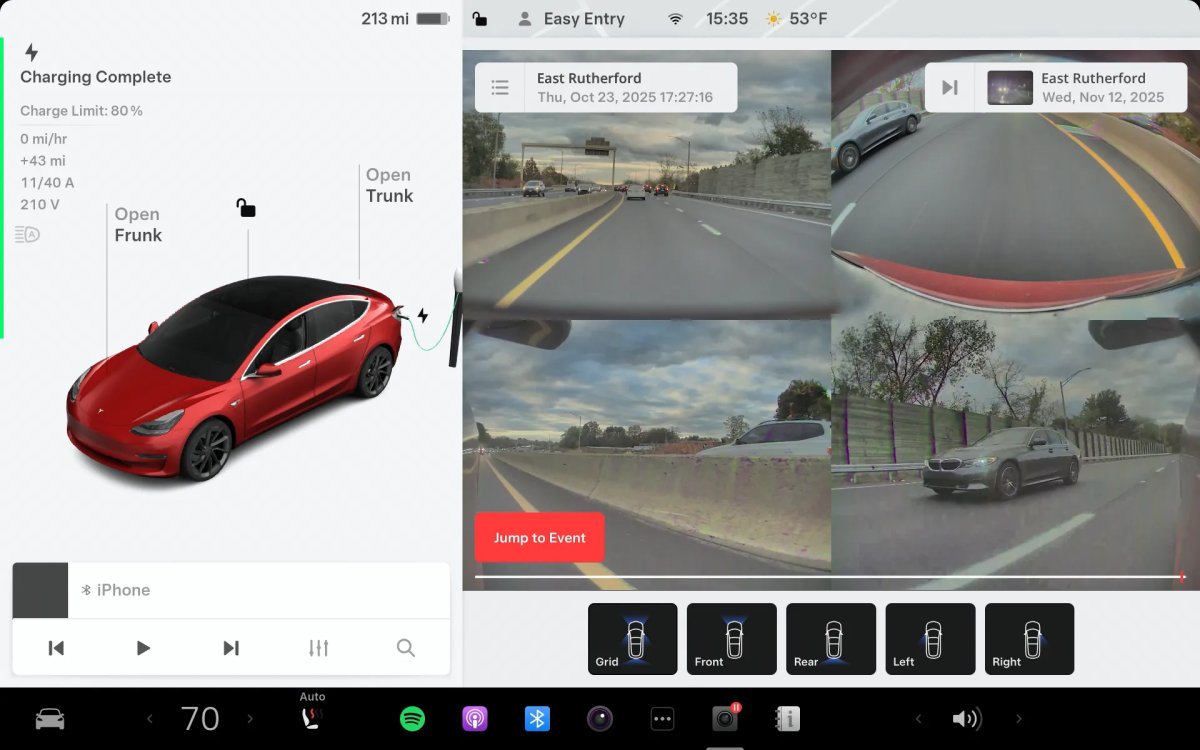

New Grid View

One of the biggest improvements to this update is the new grid view. Similar to the Tesla app, which allows you to view multiple camera feeds in a grid view, the new Dashcam Viewer also allows you to view the four Dashcam cameras in a grid format.

You’re able to view the front, rear and two repeater cameras at once when selecting ‘Grid’ at the bottom. While it’s not immediately obvious, the black box at the bottom denotes the cameras that are currently being viewed. The new grid layout makes it much easier to find the clip you’re looking for or to get an good idea of whether anything happened to the vehicle.

The ability to adjust the playback speed is still there, as are the play/pause buttons, delete and other functions.

Optimization Wins

This update removes another one of the major UI discrepancies between the Intel and Ryzen generations, with the last remaining one being the full-screen parked vehicle UI, which we hope eventually arrives on Intel vehicles too.

Historically, the Intel Atom processor has struggled with the graphical load of decoding multiple video streams while rendering the UI overlay. The arrival of this feature in 2025.44 suggests Tesla’s software team has been hard at work finding tricks to optimize the video decoding pipeline. Ryzen-based vehicles still feature two additional cameras for Dashcam and Sentry Mode, but the vehicles now at least share the same UI.

This improvement to the Dashcam Viewer is the lone improvement in update 2025.44 before the Holiday Update arrives, but it shows that Tesla still cares about the millions of older vehicles on the road today.