On Christmas morning, a deceptively simple X post from Elon Musk set the tech world buzzing — not for a flashy product announcement, but for a video demonstration of something quietly spreading through Tesla’s software updates: real-world AI navigation. “This actually works in Teslas right now,” Musk wrote in a terse caption beneath a clip that has since racked up tens of millions of views.

The video, originally shared on X by the popular meme account Planet of Memes on December 25, shows a Tesla Cybertruck owner issuing a single natural-language command to the vehicle’s onboard AI: find a Home Depot, then a nearby Supercharger, then a local coffee shop that isn’t a chain.

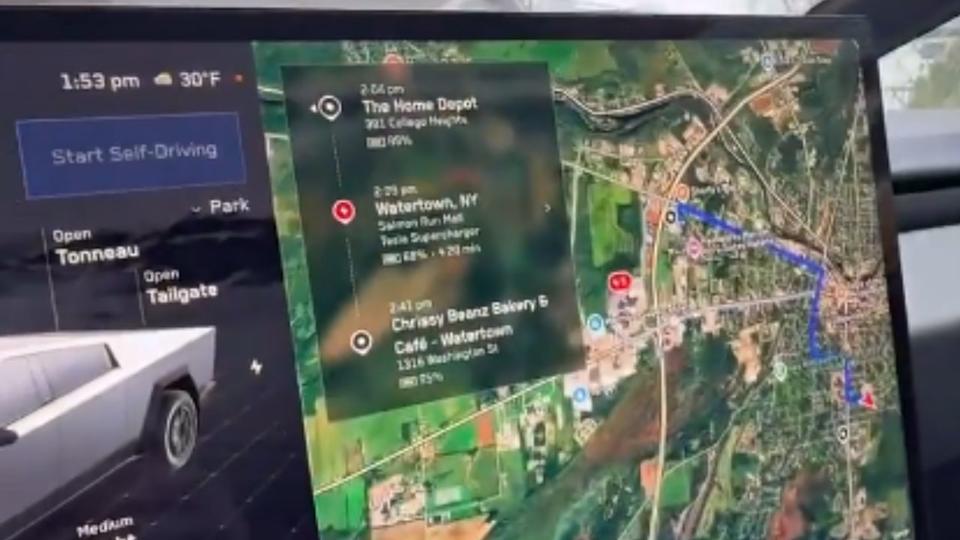

With no button-tapping or map fiddling, the truck’s assistant parses and outputs a full multi-stop route, changing destinations on the fly and setting up navigation while the driver keeps hands on the wheel. The software handles the whole sequence based on one integrated voice request. The clip’s traction captures a moment that feels, for many drivers, like science fiction finally becoming everyday reality.

From Beta to Your Dash

Image Credit: Elon Musk/X.

The engine behind this capability is Grok, Tesla’s conversational AI developed by Elon Musk’s xAI. Originally rolled out to Teslas earlier this year as a fun in-car assistant — more chatbot companion than functional tool — Grok’s role has been steadily expanding through software updates.

In the major 2025 Holiday Update (build 2025.44.25.x and beyond), Tesla enabled Grok to go beyond casual chat and interact meaningfully with vehicle navigation: adding, editing, and chaining destinations, discovering points of interest, and setting up routes without manual input.

Previously, Grok in Tesla cars was confined to text-based queries or simple replies; voice activation existed, but it didn’t control vehicle functions. The new integration — what Tesla describes as “navigation commands” in beta — bridges that gap, letting the AI actually shape how the car guides you. Drivers can long-press the steering wheel’s voice button and speak naturally, like they would to a co-pilot, and Grok interprets, locates places, sequences stops, and feeds them into the navigation system.

A Real-World Test Case

The specific demonstration that went viral makes the feature feel tangible. It’s not a staged demo reel from Tesla’s engineers or an animation. It’s a Cybertruck on a normal errand run: navigate to Home Depot, then find a Supercharger close to a coffee shop that isn’t a big chain. That last bit is crucial: drivers have long complained that spoken navigation systems are literal; you ask for a “Starbucks”, and you get a list of nearby chains.

Image Credit: Elon Musk/X.

Giving the AI context (“not a chain”) and seeing it follow that instruction into a coherent route is exactly the sort of nuance voice recognition has struggled with historically, and a huge part of why viewers found the clip compelling.

The implications go beyond viral internet fame. If Tesla’s Grok can reliably build and modify multi-stop routes from conversational prompts, and not just single destination commands, we may be witnessing a turning point in how humans interact with vehicles.

Traditional voice systems often require precise, structured phrases (“Navigate to X”), and even then, can misinterpret things. Grok’s AI approach aims for intent understanding, not keyword matching, which opens the door for assistants that cope with the messy, real-life way people actually speak.

However, the rollout is not without kinks. Early users are reporting variability in how smoothly Grok embeds routes into Tesla’s navigation. In some cases, you still have to confirm options or tap the screen if the system misreads a location or offers multiple matches. That’s typical for beta-tier tech, and Tesla’s over-the-air updates suggest improvements are continuous rather than instantaneous.

Beyond Navigation

Grok’s expanded role is part of a broader trend at Tesla: turning its vehicles into AI-enhanced digital platforms. Alongside navigation, the technology already handles chat, information lookup, and conversational tasks that once required a phone. This loosens the grip of touch-centric interaction and moves toward a future where speaking to your car feels more like talking to a savvy passenger than commanding a cold interface.

The viral clip means millions of people seeing what the next generation of car tech feels like. Whether you’re a Tesla skeptic or a self-driving enthusiast, the idea that an AI can plan an efficient, context-aware route through our chaotic world of errands, chargers, and caffeine stops is undeniably captivating.