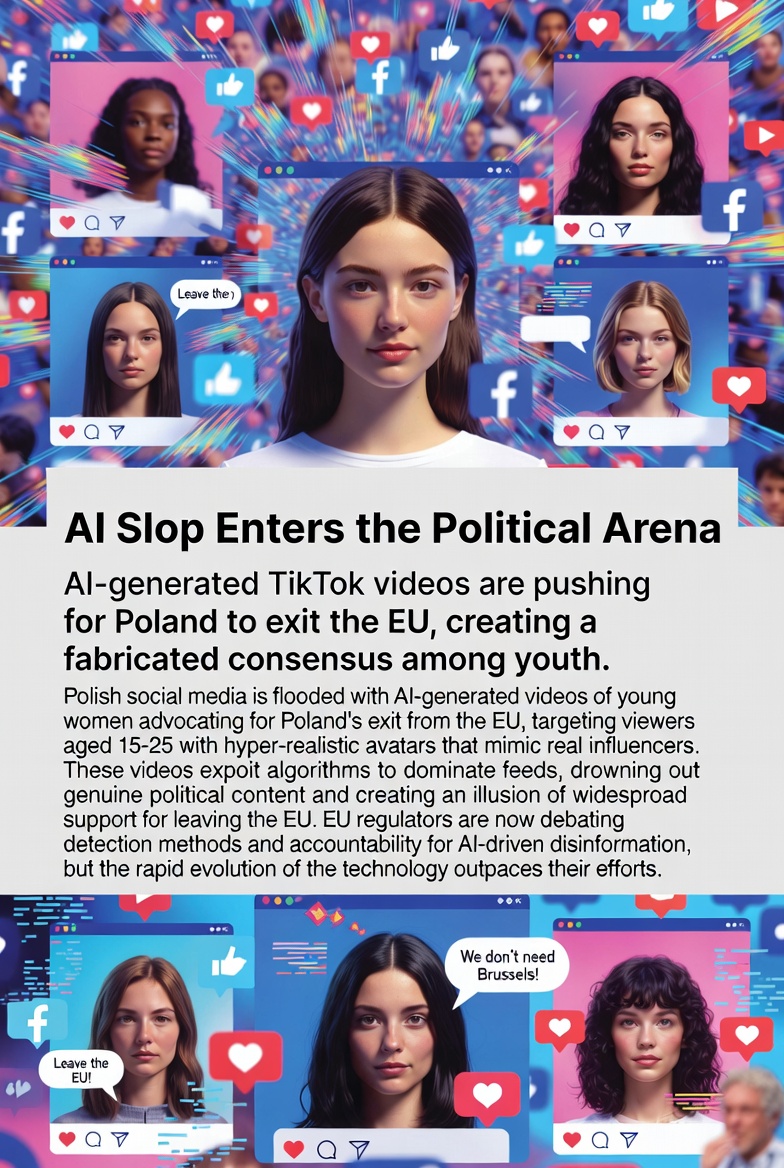

In late 2025, a wave of seemingly authentic TikTok videos featuring young, attractive Polish women clad in national symbols began circulating, passionately advocating for “Polexit” – Poland’s withdrawal from the European Union. These clips, tailored for viewers aged 15-25, included messages like: “I want Polexit because I want freedom of choice, even if it will be more expensive. I don’t remember Poland before the European Union, but I feel it was more Polish then.”

But these women don’t exist. They are entirely AI-generated, complete with realistic faces, voices, and gestures. The account behind them, “Prawilne Polki,” had been dormant or posting unrelated English content since 2023 before suddenly rebranding in mid-December 2025 to flood the platform with pro-Polexit propaganda.

But these women don’t exist. They are entirely AI-generated, complete with realistic faces, voices, and gestures. The account behind them, “Prawilne Polki,” had been dormant or posting unrelated English content since 2023 before suddenly rebranding in mid-December 2025 to flood the platform with pro-Polexit propaganda.

Polish authorities quickly labeled this a coordinated disinformation campaign, almost certainly orchestrated from Russia. Deputy Digital Affairs Minister Dariusz Standerski formally requested the European Commission to launch an investigation into TikTok under the Digital Services Act (DSA), arguing that the platform failed to adequately moderate synthetic content and mitigate systemic risks to democratic processes.

This incident marks a pivotal moment: low-quality, mass-produced AI-generated content—”AI slop”—is no longer just clogging feeds with nonsense. It’s now weaponized to influence real-world political decisions, amplifying divisive narratives at scale.

The Mechanics of Modern Disinformation

What makes this campaign particularly insidious is how effortlessly generative AI enables it:

What makes this campaign particularly insidious is how effortlessly generative AI enables it:

Realistic avatars: Tools can create convincing “talking heads” that appear human, building instant trust, especially among younger audiences who consume short-form video voraciously.

Rapid iteration: Scripts can be tweaked and new videos produced in minutes, allowing operators to test messages and adapt to algorithms.

Precision targeting: Platforms like TikTok excel at delivering content to specific demographics—here, tech-savvy Polish youth who get much of their news from the app (43.7% of 18-25-year-olds cited it as a primary source in recent surveys).

Automatic scaling: One person (or a small team) can generate hundreds of variations, creating the illusion of grassroots opinion. Combined with bots or paid promotion, it snowballs into viral “organic” trends.

The result? A fabricated consensus that Poland should abandon the EU, echoing slogans from far-right figures like Grzegorz Braun. Linguistic clues, such as Russian-influenced syntax in scripts, further pointed to foreign interference.

TikTok responded by removing the account and offending videos after user reports, stating they were “in contact with Polish authorities” and acted where rules were violated. Yet critics argue this reactive approach is insufficient against proactive, AI-fueled floods.

Broader Questions for Europe and Beyond

The Polexit videos have sparked urgent debates across the EU:

The Polexit videos have sparked urgent debates across the EU:

Detection challenges: How do we reliably distinguish real humans from AI creations? Current tools lag behind rapid advances in generative models.

Accountability: Who bears responsibility — the AI tool providers, the content creators, the platforms hosting it, or all of the above? Under the DSA, very large platforms like TikTok must assess and mitigate risks from AI, including disinformation.

Platform obligations: Should social media preemptively scan for synthetic media? Require watermarking? Or impose stricter transparency on political ads and viral content?

The EU’s DSA, fully enforced since 2024, empowers the Commission to fine non-compliant platforms up to 6% of global revenue. Complementary rules from the AI Act (effective 2026) will mandate labeling of deepfakes and certain synthetic content. But as this case shows, threats are evolving faster than regulations.

This isn’t isolated. Similar AI-driven tactics have appeared in elections worldwide, from deepfake audio in Slovakia to fabricated news in the U.S. In Europe, the Commission has already probed TikTok over election risks in Romania.

As AI democratizes content creation, it also democratizes manipulation. Bad actors no longer need armies of trolls—just a laptop and access to models like those powering video generation. The Poland incident underscores a harsh reality: AI is reshaping public discourse, eroding trust, and testing democratic resilience.

If unchecked, such campaigns could sway referendums, elections, or policy debates — not through superior arguments, but through sheer volume of convincing fakes. Regulators, platforms, and society must adapt quickly, or risk politics drowning in AI slop.

Also read:

Also read:

Thank you!