20 November 2025

Authors: Sukhanjeet Singh (DNB), Andreas Schupbach (Bundesbank), Antti Asiala (ECB) and Daniel Adam Siwecki

(ECB)

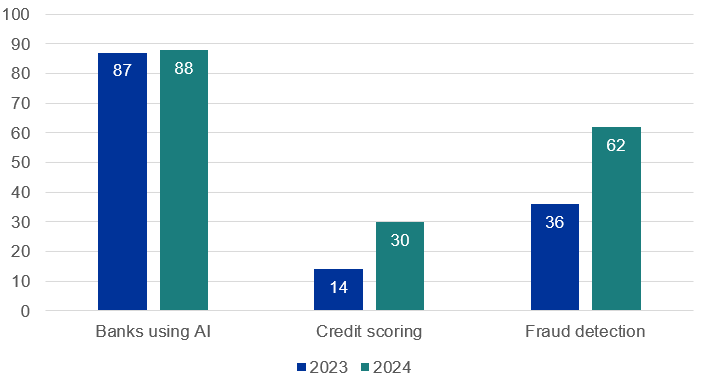

Artificial intelligence (AI) is progressively reshaping the future of banking. Banks now use AI for credit

scoring and fraud detection among other purposes, to boost efficiency and sharpen decision-making. ECB Banking

Supervision’s annual

data collection on the use of innovative technologies highlights a strong increase in AI use cases among

European banks between 2023 and 2024, including the use of AI for credit scoring and fraud detection (Chart 1),

which are relevant to micro-prudential supervision. In addition, on-site inspections took place for the fourth

consecutive year to assess specific institutions’ digital transformation strategies, their execution, impact,

and supporting technologies (including AI). As part of our 2025 priorities, together with national banking supervisors, we are further

investigating this trend and have carried out a series of workshops with 13 banks selected from those that

reported using AI for the use cases in scope. The workshops focused on general AI developments, including

governance and compliance, and specific AI applications. The takeaways from the workshops described below are

based on high-level information from a small sample of banks and should not be generalised for the entire

sector.

AI use for credit scoring and fraud detection

across all significant institutions

Percentage share of significant institutions

Source: ECB Banking Supervision.

Notes: This chart shows the percentage of banks using AI in total, the percentage using AI

for credit scoring and the percentage using AI for fraud detection, for 2023 and for 2024,

indicating an increase. Based on the STE data from supervisory reporting of 107 SIs in 2023 and 110

SIs in 2024.

AI governance and compliance

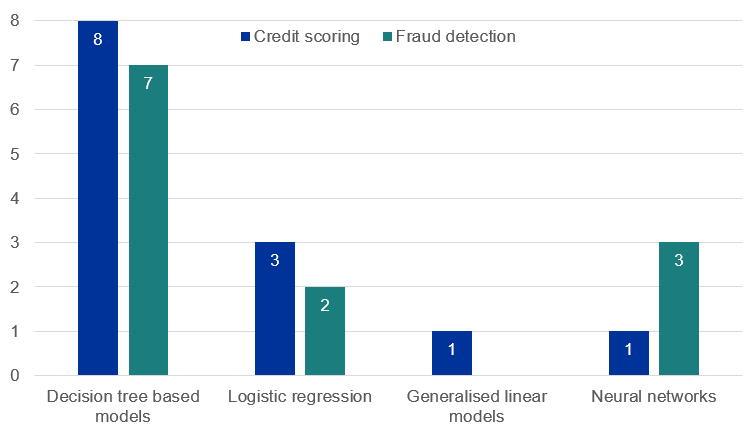

Banks have reported that they are ready to use AI models more effectively. They use various types of AI models:

decision tree-based models are mainly used for both credit scoring and fraud detection, while neural networks

are mainly used for fraud detection (Chart 2). Banks using AI see clear business benefits from improved model

performance, including greater process efficiency and better customer service. In credit scoring, AI models

enhance accuracy through advanced predictive analytics and a greater ability to tailor offers to individuals.

This enables extended lending, more effective risk assessments, better risk avoidance and lower default rates,

ultimately contributing to improved profitability. In fraud detection, AI models enhance real time monitoring

and pattern recognition, allowing banks to identify suspicious activity and stop fraud before it happens. This

minimises the need for manual reviews and improves operational efficiency, reducing financial losses. However,

the financial quantification of realised benefits remains a challenge, echoing repeated findings from on-site

inspections on banks’ ability to monitor the financial impact of strategic digital initiatives. As those

benefits come with risks as well, they have also had to update their governance and compliance frameworks.

A number of banks are integrating AI governance into their current risk frameworks. About half of the banks in

the sample have introduced dedicated policies or committees to oversee AI. This development reflects a broader

alignment of AI initiatives with digitalisation strategies and business needs. Banks are preparing for the EU AI

Act to come into force, despite some uncertainty on the steps to take in terms of compliance. Some banks conduct

self-assessments to spot high-risk use cases, mapping the use of AI models across the organisation and aligning

internal processes with expected regulatory standards. These steps help them to become compliant and better

prepared to influence the broader regulatory dialogue. An emerging practice includes appointing Chief AI

Officers to reinforce accountability. Banks are also working to make sure that the second and third lines of

defence adequately oversee the use of AI. This is an area where further development may be needed.

AI model use for credit scoring and fraud detection in the sample of 13 banks

x-axis: AI methodology used by banks for credit scoring and fraud

detection

y-axis: number of participant banks using each type of methodology

Source: ECB Banking Supervision.

Notes: This chart shows the AI methodology deployed by number of banks for credit scoring

and fraud detection. Please note that there is no clarity yet on whether logistic regression is

considered AI in the context of the AI Act. For a

description of AI methodologies, please see NBB Financial Market Infrastructures and Payment Services Report,

June 2019: specific theme report “Detecting payment fraud with Artificial intelligence”, pages 64

and 65

Risk management and changing technology

The use of AI also means banks must update their risk management frameworks, for example by ensuring

explainability and model governance. The AI models analysed are generally developed in house but often hosted in

external cloud-based environments. Most banks in the sample use explainability tools to monitor models’

performance. We observed centralised dashboards offering visibility into model behaviour. In addition, external

experts are sometimes consulted, to quantify the model input variables and how they affect the outcomes. The

higher the risk, the more human validation is aimed to be involved. To ensure models remain stable and

auditable, none of the banks in the sample permits self-learning after deployment. Especially in the event of

high-risk decisions and real-time fraud alerts, banks reported that there is human oversight to enable

intervention when necessary. The bank’s model reviewers maintain feedback loops with AI systems to enhance

accuracy and reliability. Other practices include appointing dual-role Chief Data and AI Officers to bridge

governance gaps, using “golden” (authoritative) data sources[1]

within centralised AI model records and applying robust data quality checks to AI models. To address the risks

associated with using external providers, some banks rely on EU-based companies and carry out comprehensive

compliance checks, ensuring back-up options to avoid business disruptions. As reliance on external providers

grows, banks are increasingly mindful of related risks, including data privacy, operational resilience and

regulatory compliance. We are therefore focusing on deficiencies in operational resilience frameworks regarding

cybersecurity and third-party risk management capabilities as a prioritised vulnerability.

A few banks seem to lack full transparency regarding the internal

processes of some AI models that lead to their results, noting that models inherently operate with a degree of

autonomy, a characteristic that could introduce the risk of black box behaviour. Also, different banks perceive

explainability in diverse ways, leading to varied definitions and approaches. While several banks are

considering applying their data governance frameworks to AI models to ensure the data used is of high quality –

particularly for high data volumes and unstructured data, often fed through a complex IT architecture – only a

few banks reported effectively applying data management standards in practice and adjusting them to the specific

requirements of AI models. This is critical, as poor data inputs will inevitably lead to unreliable results.

This also reinforces the need to continue following up on our work on risk data aggregation and risk reporting

(RDARR).

Digitalisation as a supervisory priority

The many takeaways from this analysis (annexed in further detail here) form part of the

ECB’s broader effort to understand the digital transformation of banking. This work started with the Report

on Digitalisation published last year, and has recently been complemented by other publications and

assessment criteria on related topics.[2] The application of

existing supervisory expectations to AI models is the focus of dedicated supervisory work within European

banking supervision, reflecting their importance in shaping a resilient and well-integrated digital

transformation. In this context, an SSM Conference on Digitalisation held in October brought

together supervisors and banks to exchange on digital strategies, their implementation in specific business

lines like retail and payments, and AI developments and related risks.[3] The discussions reaffirmed that digitalisation is not a destination but a journey.

While the opportunities are substantial and tangible, the challenges are equally significant and must be

addressed.

The ECB Banking Supervision supervisory priorities for 2026-2028 will continue focusing on AI. The aim is

to continue to monitor how banks are using AI, with a focus on strategies, governance and risk management. To

this end, we plan to continue engaging with the industry, consult external experts and further extend our

internal capabilities to ensure emerging risks are appropriately identified, monitored and mitigated.