Filter Bubbles Under Fire: Seoul Eyes Algorithm Regulation to Curb Online Polarization (Image supported by ChatGPT)

SEOUL, July 26 (Korea Bizwire) — As YouTube continues to dominate the global internet landscape—with over 20 trillion videos uploaded and 20 million more added daily—its influence in South Korea has become particularly pronounced.

More than half of South Koreans (51%) now consume news via YouTube, significantly outpacing the global average of 31%, according to the Korea Press Foundation’s Digital News Report 2024.

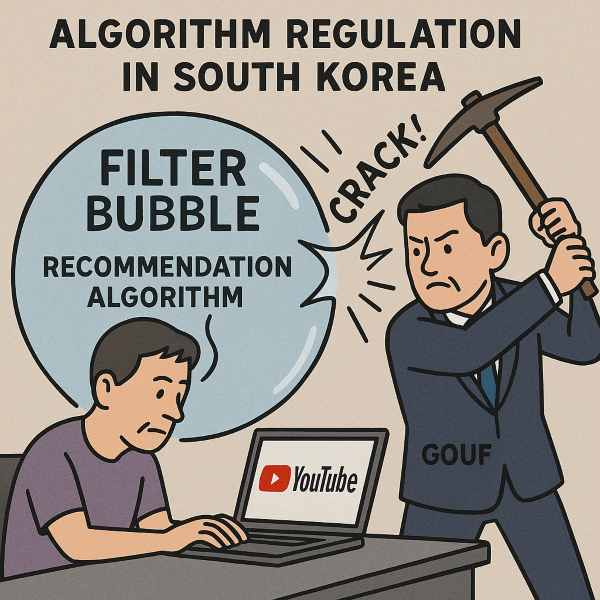

Yet amid this ubiquity, growing concerns are emerging over the platform’s powerful recommendation algorithm, which critics say fosters political polarization and entrenches ideological echo chambers.

A recent report from the National Assembly Research Service warns that YouTube’s algorithmic suggestions are narrowing users’ exposure to diverse viewpoints, fueling “filter bubbles” and “echo chambers” that reinforce existing beliefs while shutting out opposing perspectives.

The report, authored by legislative researcher Choi Jin-eung, argues that this algorithm-driven content curation intensifies confirmation bias and may exacerbate political divisions.

Choi specifically singled out YouTube’s practice of promoting content from non-subscribed channels, suggesting it heightens the risk of extreme partisanship by continually reinforcing users’ preexisting political leanings.

As a potential remedy, the report points to the European Union’s Digital Services Act (DSA), enacted in 2022, as a model. The DSA requires online platforms to disclose the main parameters of their recommendation systems in their terms of service and provide users with meaningful options to modify or opt out of those algorithms.

Large platforms are also mandated to assess and mitigate systemic risks associated with their algorithmic designs and report those findings to EU regulators.

Legislative momentum is already building in South Korea. Earlier this year, Rep. Cho In-chul of the Democratic Party introduced a bill to amend the Information and Communications Network Act, requiring platforms to clearly inform users of their rights regarding algorithmic recommendation systems and to allow opt-out options.

The bill warns that unchecked algorithmic bias could damage democratic discourse and intensify social conflict.

The issue also surfaced during the confirmation hearing for Science and ICT Minister Bae Kyung-hoon, where lawmakers raised concerns about the social ramifications of AI-driven content filtering. Bae acknowledged the complexities and pledged to consider ethical dimensions in shaping South Korea’s forthcoming AI legislation.

In the meantime, media literacy efforts are gaining traction. The BBC’s educational platform “Bitesize” encourages students to break free from algorithmic silos by following accounts with opposing views, avoiding reliance on a single platform, and engaging in offline discussions with family and friends—offering a reminder that in a hyperpersonalized digital age, human connection remains a vital counterbalance.

M. H. Lee (mhlee@koreabizwire.com)