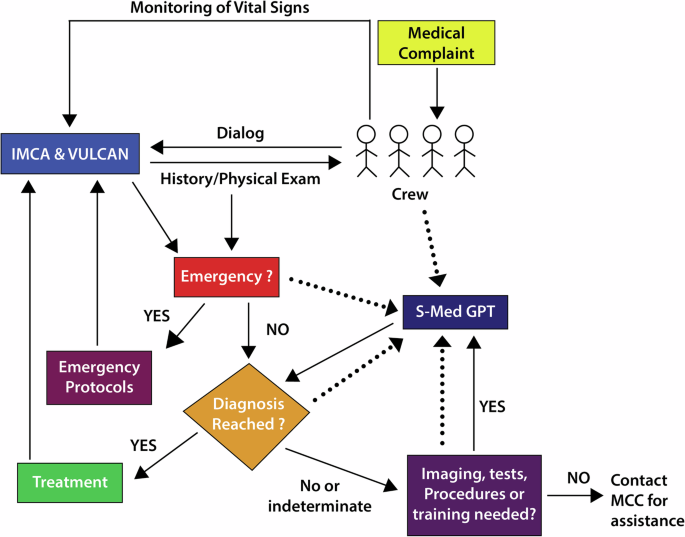

Our team has been developing tools for NASA to meet the needs of EIMO and help astronauts independently manage the inevitable medical problems arising on exploration-class missions. The technology behind AI-based Large Language Models (LLM) holds the potential for true onboard medical expertise emulating the current ground-based capabilities. To this end, we are in the early stages of development of Space Medicine GPT (SGPT). This is a localized LLM, Generative Pretrained Transformer (GPT) that is designed to meet needs #1–7 and #9–13 above. Unlike other chatbots that provide comprehensive answers to specific questions, SGPT employs novel prompt engineering to provide an interactive, question-answer environment to reach a medical diagnosis. To function without internet connectivity, this system utilizes a smaller LLM “distilled” from a larger model. By concentrating on only medical information, its targeted size of less than 50 billion parameters is suitable for deployment on laptops, smartphones, and tablets. This should help meet the mass, volume, power and data constraints described in need #3. “ector databases”7 that provide rapid search and retrieval of evidence-based medical resources are built on top of the foundational LLM. Actual conversations recorded during physician-patient encounters can be used to train the model to respond as an expert medical consultant. Once fully developed the dialog directed by SGPT will hopefully mimic the thought-process and data gathering that a clinician would use to manage a crewmember who presents with a symptom such as chest pain (Fig. 1). Through a series of focused questions, SGPT will incorporate well-established Bayesian logic techniques8 to arrive at the most appropriate follow-up question, test, or procedure. When sufficient information is gleaned from the symptom history, the CMO will be asked to perform a physical exam and further tests such as imaging and laboratory examinations, as needed. The highest priority will be to rule out an emergent condition such as acute coronary syndrome or tension pneumothorax (need #7) requiring immediate action.

Once the need for urgent intervention is recognized, SGPT will take whatever lifesaving measures are required even if the diagnostic process is incomplete. Per need #9, SGPT will know the knowledge and skills base of the CMO or non-CMO crewmember and speak at a level commensurate with his/her expertise. Likewise, the complete medical history of each crewmember will be stored in memory and be available as part of the diagnostic process, as well as automatic reading of vital signs which are available through wearables. SGPT will be an always on, instantly available resource that can also continuously process and monitor data from crew-worn sensors and alert the crew when dangerous readings or trends appear (need #10). Finally, it can act as a conventional medical information source for routine medical information requests or questions that the crew may have (need #11).

How can AI be used if a CMO needs additional guidance for a recommended medical procedure (e.g., placing an IV or performing an ultrasound examination) or wants refresher training on a particular medical topic (need #8)? Another AI-based tool has been developed called the Intelligent Medical Crew Agent (IMCA). IMCA uses augmented reality (AR) to provide step-by-step guidance for the performance of medical procedures. Content such as imaging, diagrams, checklists, and videos can be projected through crew-worn AR devices, alongside views of the real-world environment. AR scripts for procedures such as pericardiocentesis, chest tube placement, and deep abscess drainage have been developed and can be tailored to the level of an expert or novice. Scripts can be rapidly developed using systems modeling language tools9 for new procedures or just-in-time training that may be required and then uplinked to the crew.

A key component of IMCA is the Visual Ultrasound Learning, Control and Analysis Network (VULCAN). Currently, the primary imaging tool available to crews in LEO is ultrasound. VULCAN is designed to monitor operator execution of ultrasound scans and ultrasound-guided procedures and provide real-time, corrective feedback to ensure proper execution and outcomes in situ. It uses AI/machine learning procedural guidance as a 3-dimensional mentor, guiding operators through complex medical procedures in near real-time. The VULCAN system fuses computer vision and telemetry (e.g., magnetic GPS for spatial precision) to create “closed loop” procedural guidance with corrective feedback (300–400 msec) to assist in proper performance of procedures. VULCAN not only shows operators what to do but also monitors execution through machine learning, providing instant corrective feedback.

Challenges and Aspirations

With the assistance of AI-based tools such as SGPT, IMCA, and VULCAN, our CMO on the way to Mars can expertly manage his crewmate’s condition. Whether the etiology of the chest pain is as serious as a myocardial infarction or as benign as muscle strain, these tools act as omnipresent, well-informed “experts” that take the place of ground-based medical consultants. This is the goal, but significant challenges lie ahead before all the needs listed above can be met. Not least of these include the immaturity of the hardware and software required to make these tools a practical reality during exploration missions. The deductive process that physicians use to arrive at a diagnosis from a patient complaint is extraordinarily complex. No two clinicians have precisely the same style/approach and determining the “optimal” method to emulate experts is difficult. It is imperative to train our model with generally recognized “best medical practices” to avoid going rogue. Almost all large LLM’s in use today are designed to be internet-based while our tools must work off-line. Large medical databases must be distilled into a usable size that conforms to spaceflight constraints while providing a comprehensive medical knowledge base for both rare and common conditions. Systems such as we describe will need to be rigorously tested in earth-based analogs such as Antarctica, wilderness medicine, military deployments, and even routine medical practice. Finally, it is important to note that these tools are only one component of a comprehensive space-based clinical decision support system. Other features like pharmacy and supply management, health maintenance, disease prevention, and environmental control are needed, but AI will certainly be an integral part of these.

We are hopeful that, despite the challenges, a model such as the one described will be developed for exploration-class missions. Hardware advances in storage and speed will allow AI-based systems such as SGPT to reside on a tablet or cellphone. Imagine the possibilities if the same kind of on-board expertise that is being developed for exploration of our solar system is available at low cost to local healthcare paraprofessionals in very remote terrestrial settings, in disaster situations, or war zones where internet, or any communications at all, is non-existent. Artificial intelligence-based tools such as those described have the potential to save lives, reduce human suffering and improve the health of all, not only in outer space, but importantly, here on Earth.