Report Overview

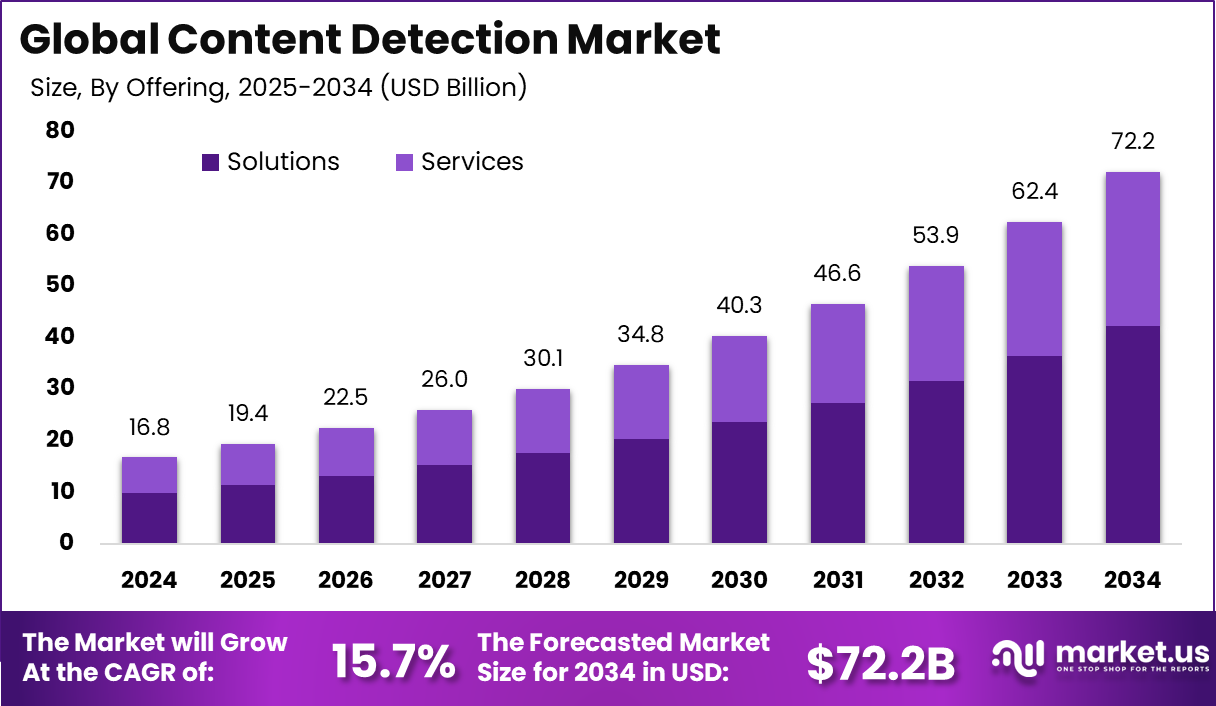

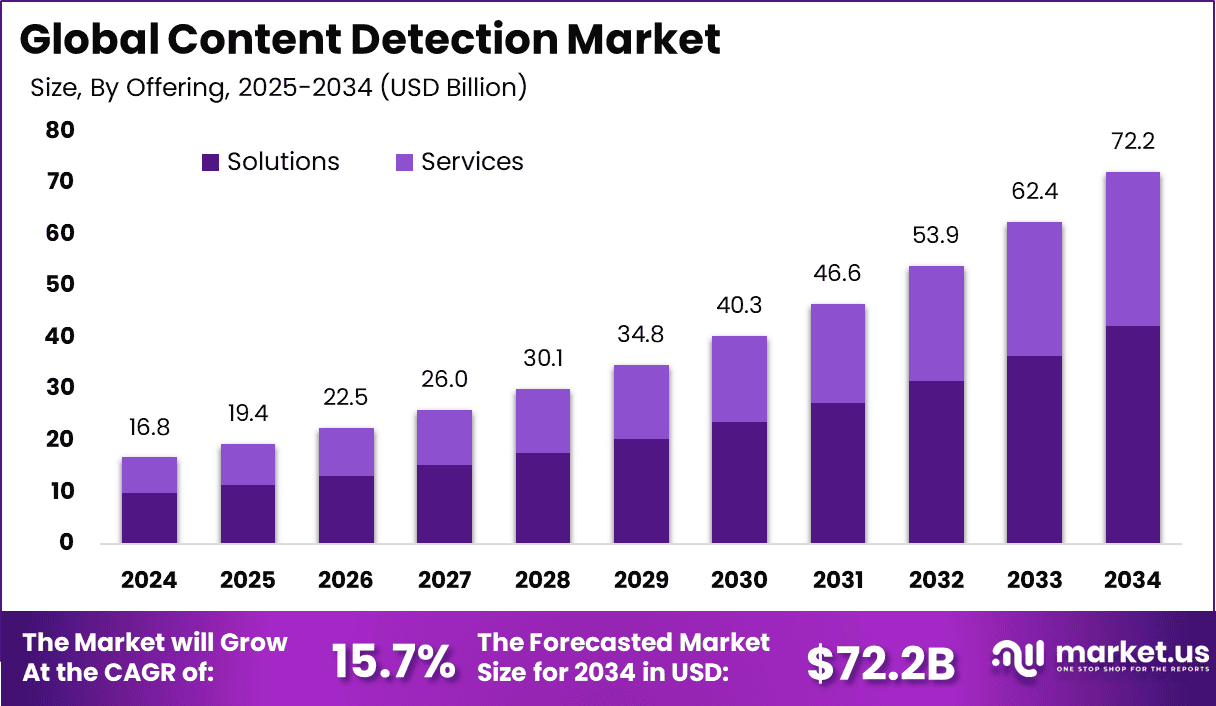

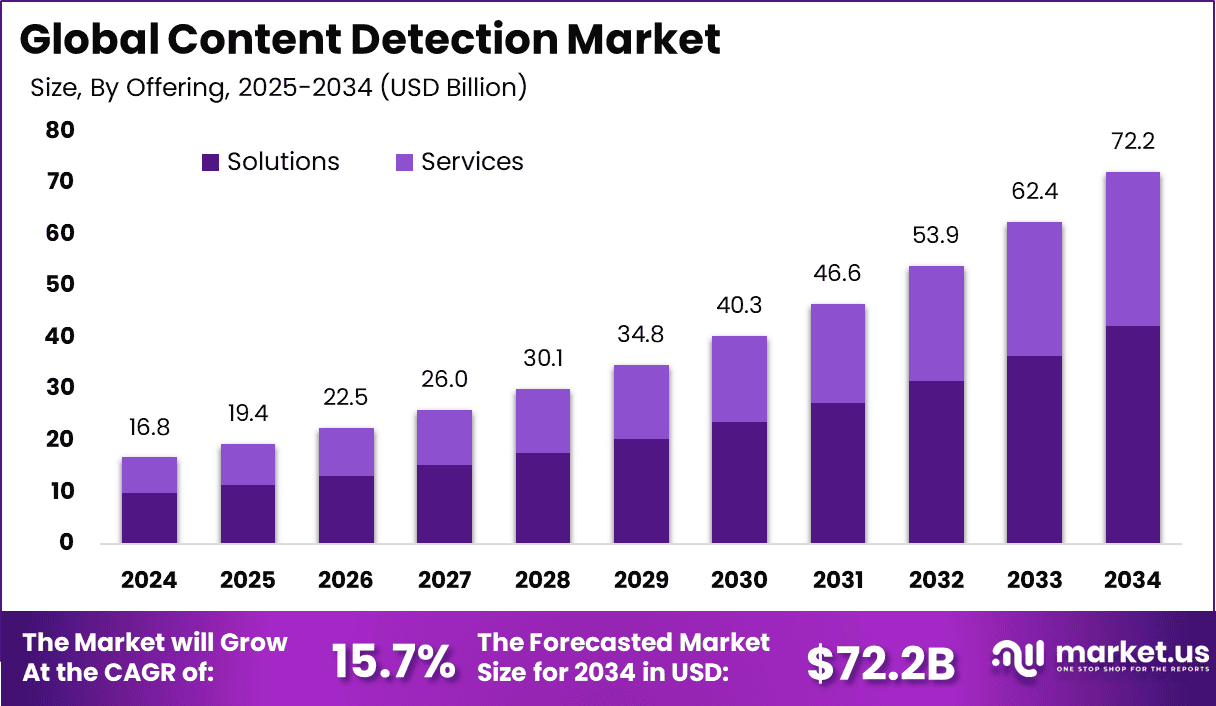

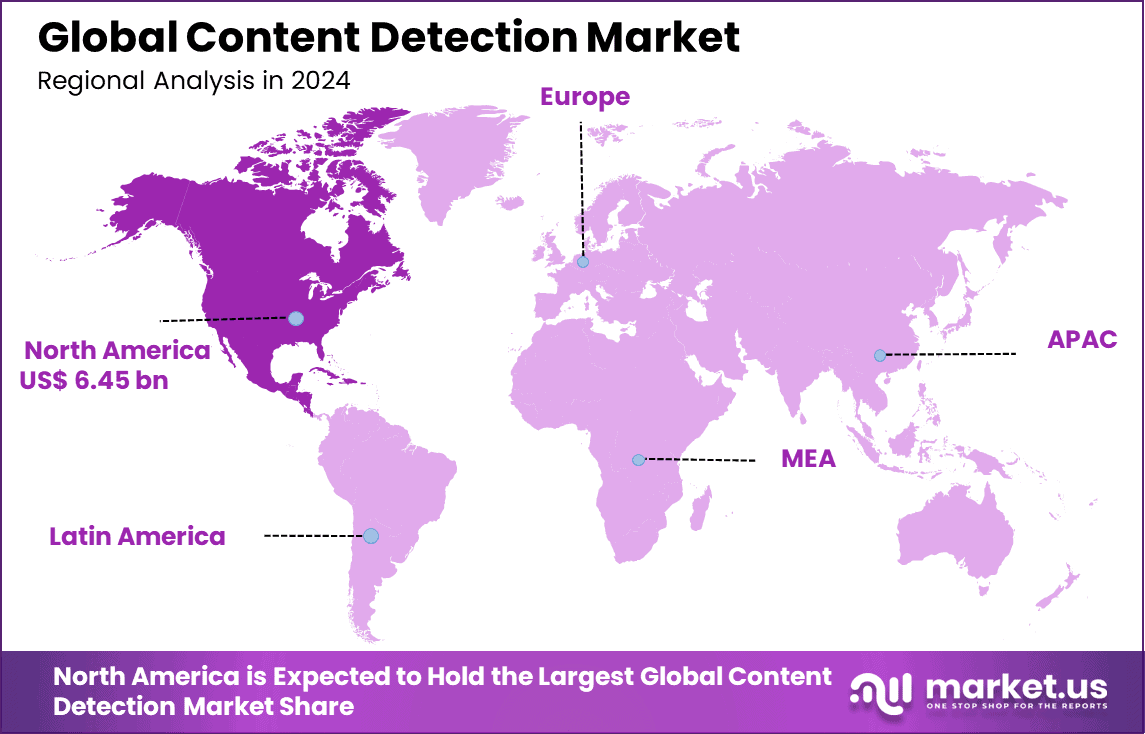

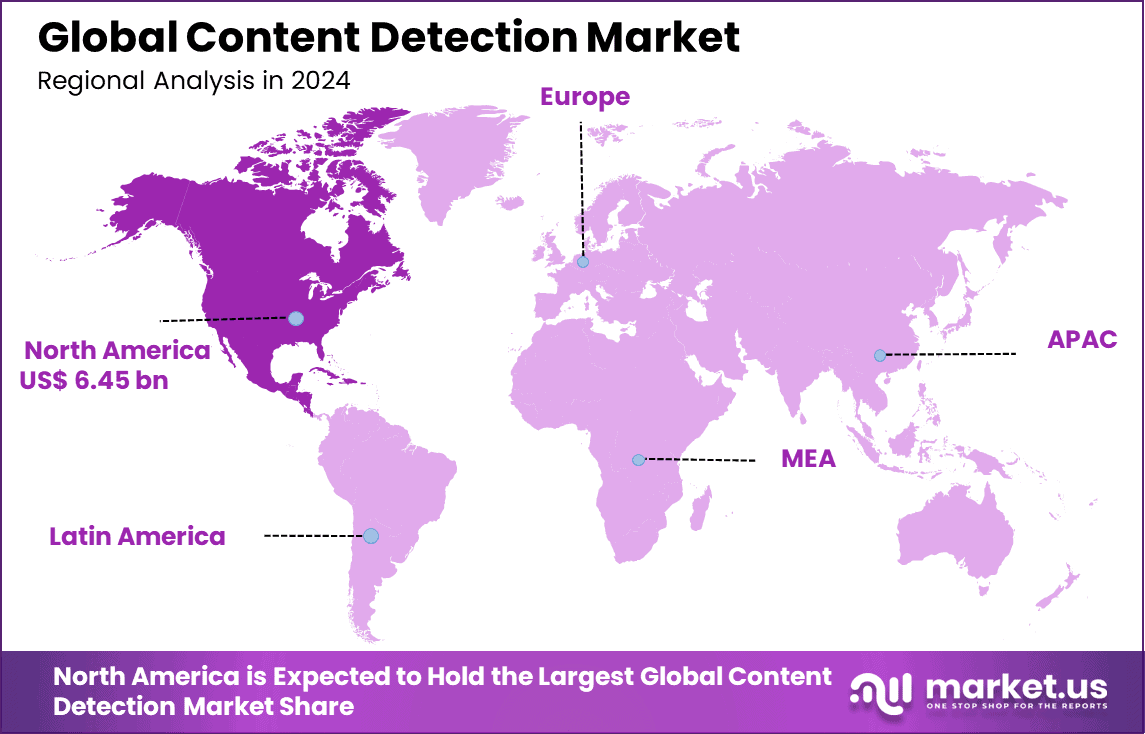

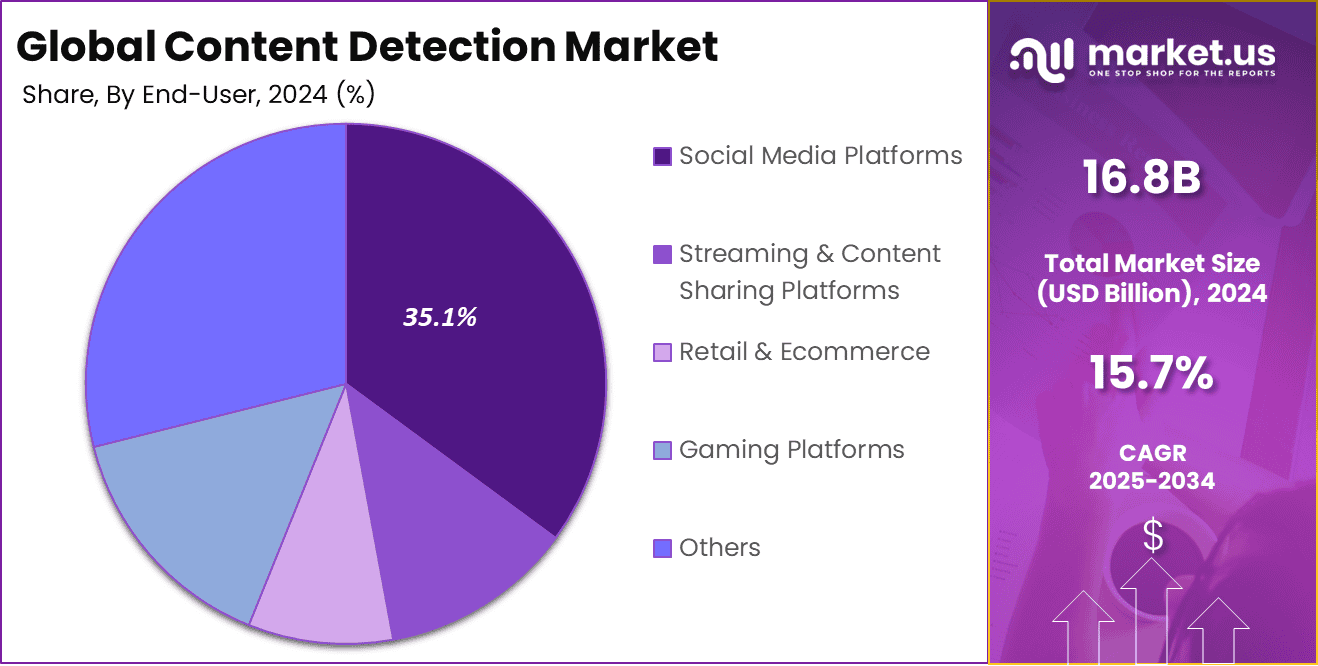

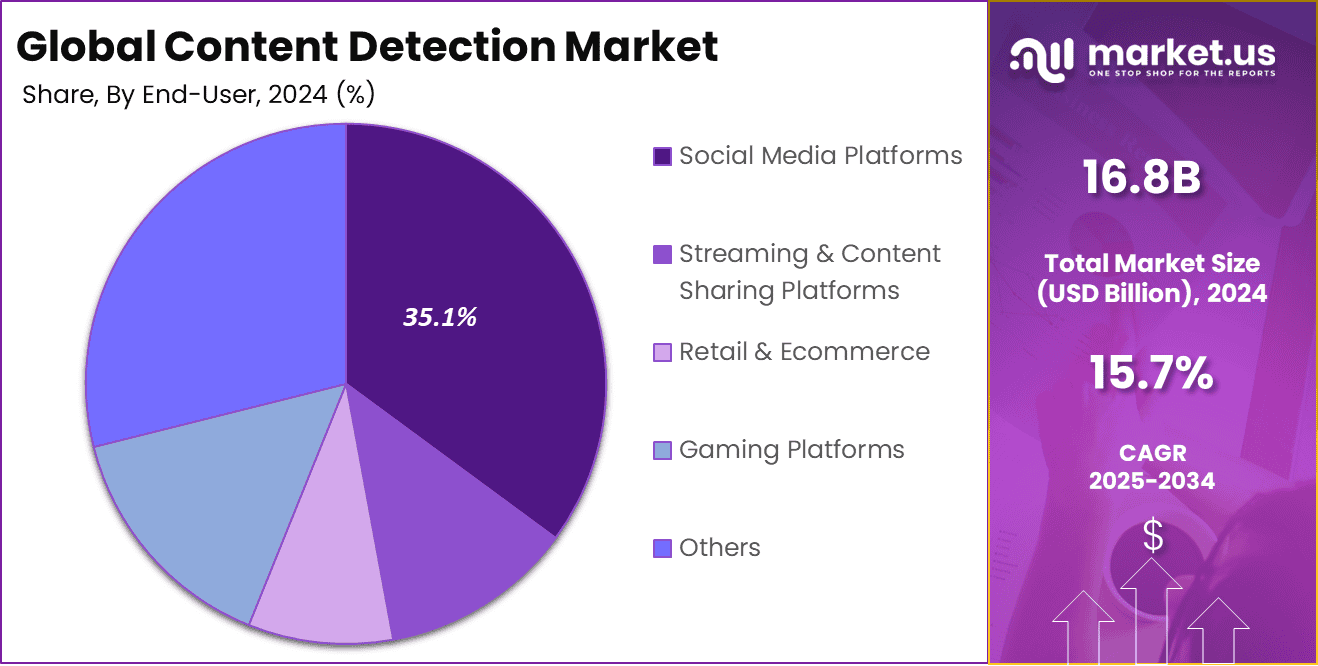

The Global Content Detection Market size is expected to be worth around USD 72.2 billion by 2034, from USD 16.8 billion in 2024, growing at a CAGR of 15.7% during the forecast period from 2025 to 2034. In 2024, North America held a dominant market position, capturing more than a 38.4% share, holding USD 6.45 billion in revenue.

The Content Detection Market encompasses technologies that identify, verify, and moderate digital content across text, images, audio, and video. These solutions serve to safeguard platforms against misinformation, deepfakes, inappropriate or copyrighted content, and other authenticity threats. The market integrates AI, machine learning, watermarking protocols, metadata analysis, and forensic tools to ensure content integrity.

Key Takeaways

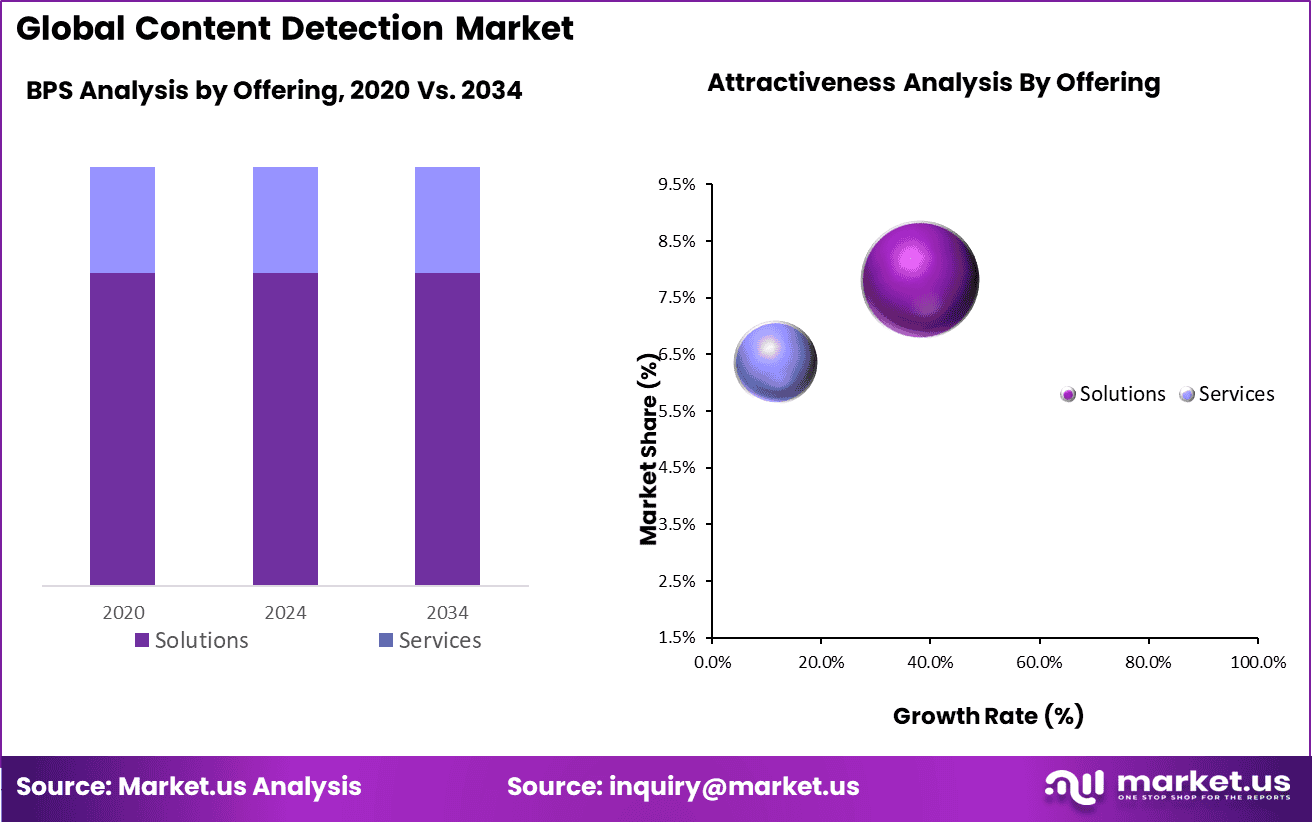

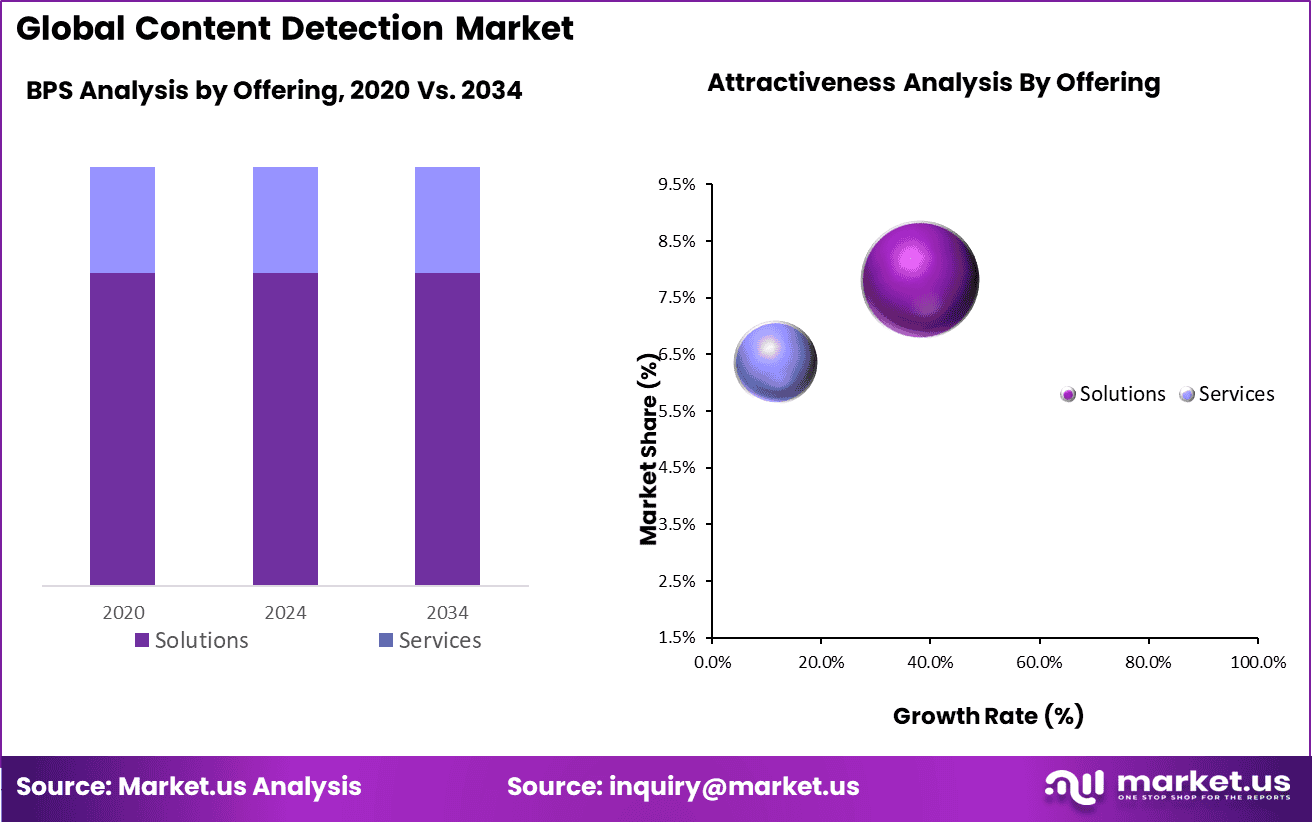

Solutions dominated the offering segment with 58.6%, driven by the demand for AI-powered detection tools that provide scalable, automated, and real-time monitoring capabilities.

Content moderation led in detection type with 45%, reflecting the critical role of identifying and filtering harmful, inappropriate, or non-compliant content to ensure safe digital environments.

Image content detection accounted for 40.3%, supported by the rising need to scan and verify visual media across social platforms, e-commerce, and streaming services.

Social media platforms represented the largest end-user segment at 35.1%, leveraging content detection to maintain brand reputation, comply with regulations, and enhance user trust.

North America held a 38.4% market share, showcasing advanced adoption of AI moderation technologies and strong regulatory focus on online safety.

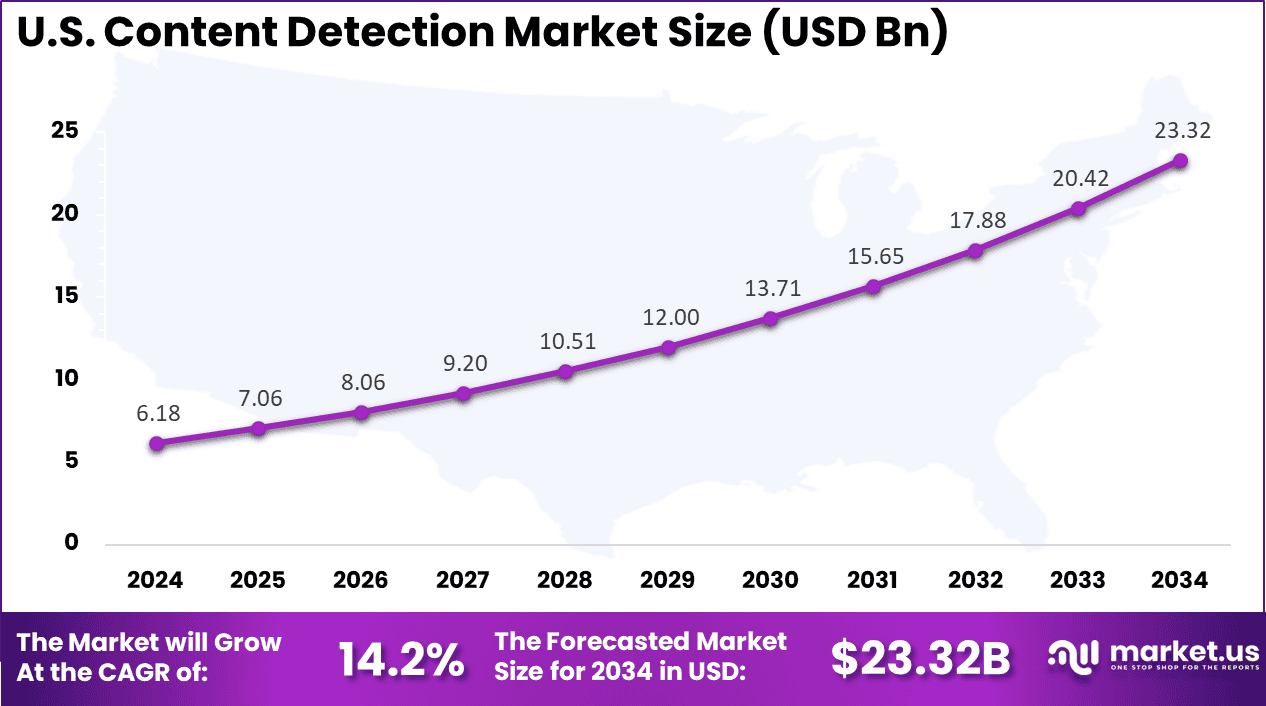

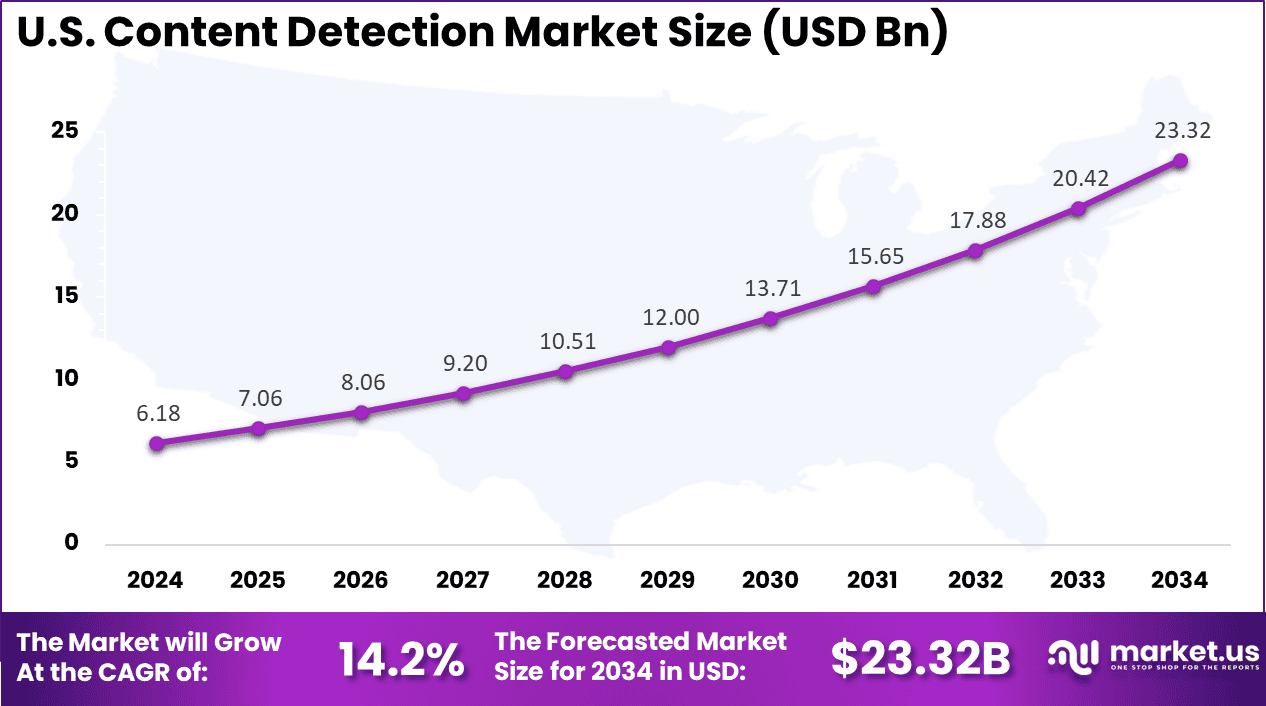

The U.S. market reached USD 6.18 billion with a CAGR of 14.2%, reflecting continued investment in automated content governance solutions.

The top driving factors for this market include the exponential rise of digital content and user-generated material, along with heightened awareness of online safety and brand reputation management. As digital platforms expand, there is an urgent need to moderate content efficiently to avoid legal repercussions and reputational damage.

Additionally, stringent regulatory frameworks such as the European Union’s Digital Services Act and various national data protection laws compel organizations to adopt robust automated content detection technologies. The rapid digital transformation across industries, combined with the integration of AI-powered tools, is fueling demand for scalable, accurate, and real-time content moderation solutions.

For instance, in May 2024, IBM expanded its partnership with Salesforce to join the Zero Copy Partner Network, enabling seamless data integration between IBM’s watsonx and Salesforce Data Cloud. This collaboration aims to enhance AI and data ecosystems, facilitating more efficient and secure content detection solutions.

Role of AI

U.S. Market Size

The market for Content Detection within the U.S. is growing tremendously and is currently valued at USD 6.18 billion, the market has a projected CAGR of 14.2%. The market is expanding rapidly due to its robust digital infrastructure, widespread social media use, and increasing cybersecurity threats.

The rise of digital content across various industries, along with the growing issues of misinformation, deepfakes, and fake content, has fueled demand for advanced detection tools. Stricter regulations on digital safety and data protection are compelling U.S. companies to adopt these technologies to meet legal requirements.

For instance, in May 2025, Google introduced SynthID, an AI content detection tool designed to identify synthetic media generated using Google’s AI systems. This tool enhances transparency in digital content, helping users distinguish between real and AI-generated images and videos. The U.S. remains dominant in the content detection market, with companies like Google leading the way in technological advancements.

In 2024, North America held a dominant market position in the Global Content Detection Market, capturing more than a 38.4% share, holding USD 6.45 billion in revenue. The market is growing due to its advanced technological infrastructure, high adoption of digital platforms, and proactive regulatory environment.

The region’s robust cybersecurity frameworks and increasing concerns over misinformation, deepfakes, and harmful content have driven the demand for advanced content detection solutions. Additionally, the presence of major tech companies and rapid innovation in AI-driven tools has further strengthened North America’s leadership in the market.

For instance, in May 2024, OpenAI launched a tool to detect images created by its DALL·E 3 AI model, highlighting North America’s dominance in the content detection market. Driven by AI and machine learning advancements, North American tech companies like OpenAI lead in developing tools to address concerns over synthetic media, ensuring content authenticity and reducing misinformation risks.

Offering Analysis

In 2024, the Solutions segment held a dominant market position, capturing a 58.6% share of the Global Content Detection Market. This dominance is growing due to the rising need for advanced content detection tools to tackle issues like misinformation, deepfakes, and harmful content on digital platforms.

Both businesses and governments are increasingly adopting solutions that offer real-time moderation, regulatory compliance, and improved security. As digital content continues to grow, the demand for effective detection technologies remains a major driver. Additionally, the rapid pace of product innovations and technological advancements has further strengthened the leadership of this segment in the market.

For Instance, in March 2024, Microsoft introduced Community Sift, a generative AI-powered content moderation solution designed to scale and tailor policies for online communities in real-time. This tool aims to enhance the safety and inclusivity of digital spaces by efficiently identifying and managing harmful content.

Detection Type Analysis

In 2024, the Content Moderation segment held a dominant market position, capturing a 45% share of the Global Content Detection Market. This dominance is driven by the growing need for businesses and platforms to effectively manage and filter user-generated content, ensuring compliance with legal and community standards.

The rapid expansion of user-generated content on platforms like social media, e-commerce, and forums has intensified this demand. Advancements in AI and machine learning have improved the efficiency and accuracy of moderation tools, making them vital for maintaining platform integrity and user safety. Additionally, regulatory pressures are compelling companies to implement robust content moderation solutions.

For Instance, in April 2025, a global content moderation alliance was formed to address the growing concerns over the effectiveness and fairness of content detection on digital platforms. The alliance, which includes tech companies, unions, and advocacy groups, aims to improve moderation practices by ensuring fair labor conditions for content moderators, advocating for transparent algorithms, and promoting ethical practices.

Content Type Analysis

In 2024, the Image segment held a dominant market position, capturing a 40.3% share of the Global Content Detection Market. The dominance is due to the growing adoption of advanced AI technologies that improve the accuracy and scalability of image detection systems.

The rapid expansion of visual content on platforms like social media, e-commerce, and advertising has increased the need for such tools. As images become central to marketing and user engagement, there is a heightened demand for image detection solutions to identify harmful or manipulated content, ensuring platform safety and regulatory compliance.

For instance, In January 2025, Tencent introduced AI-powered content detection tools to identify AI-generated images and text, addressing the risks of deepfakes and synthetic media. These tools leverage advanced machine learning models to enhance content moderation accuracy and efficiency, ensuring authenticity and offering stronger safeguards against misinformation and harmful content in digital spaces.

End-User Analysis

In 2024, the Social Media Platforms segment held a dominant market position, capturing a 35.1% share of the Global Content Detection Market. This dominance is due to the vast amount of user-generated content shared daily on platforms like Facebook, Instagram, and Twitter.

The need to monitor and moderate this content for harmful material, misinformation, and inappropriate behavior has increased. Additionally, regulatory requirements and the need to maintain platform integrity and user safety have pushed social media platforms to invest in advanced content detection solutions.

For Instance, in February 2024, Meta (Facebook) announced the introduction of AI-generated content labeling across Facebook, Instagram, and Threads. This initiative aims to provide transparency by clearly marking images and content created with AI tools. By labeling AI-generated images, Meta enhances user trust and helps combat misinformation and deepfakes.

Key Trends & Innovations

Key Market Segments

By Offering

Solutions

Services

Professional Services

Managed Services

By Detection Type

Content Moderation

AI-Generated Content Detection

Plagiarism Detection

Others

By Content Type

By End-User

Social Media Platforms

Streaming & Content Sharing Platforms

Retail & Ecommerce

Gaming Platforms

Others

Drivers

Stringent Regulatory Compliance

As governments and regulatory bodies enforce more stringent laws around digital content, businesses are increasingly adopting content detection solutions to ensure compliance with these legal standards. These regulations often mandate the removal or flagging of harmful, illegal, or inappropriate content within specific timeframes.

Content detection technologies help organizations maintain compliance by automatically identifying and moderating harmful content, thereby avoiding legal penalties and maintaining the integrity of their platforms.

For instance, in June 2025, Thailand introduced new content moderation regulations aimed at ensuring digital platforms comply with stricter content policies. Governments worldwide, including in regions like the EU and Southeast Asia, are enforcing regulations to curb harmful content, such as hate speech, misinformation, and violent material.

Restraint

High Computational Requirements and Regulatory Complexity

Despite growth, the content detection market faces significant restraints due to the immense computational power needed to process and analyze enormous volumes of real-time content accurately. Deploying AI and ML models at scale requires costly infrastructure, including powerful GPUs and optimized cloud or edge computing resources.

This challenge is compounded by the need to minimize false positives and negatives in moderation to avoid censorship or unchecked harmful content, demanding sophisticated, resource-intensive model training and tuning. Moreover, regulatory complexity poses formidable hurdles. Content detection systems must navigate diverse, constantly evolving laws that vary by region, including data privacy, user rights, and content moderation standards.

For instance, in June 2025, AI content detection tools, such as watermarking, were scrutinized for potentially compromising user privacy. While these tools are designed to identify AI-generated content, they may inadvertently access personal communications or sensitive media. This raises concerns about surveillance and the violation of privacy rights, as users might not always be aware of the data being analyzed.

Opportunities

Expansion in Emerging Markets and Multilingual AI Capabilities

The content detection market holds substantial opportunities in emerging regions such as Asia-Pacific, Latin America, and Africa, where rapid internet adoption and mobile-first usage spur a surge in digital content creation. Governments and enterprises in these regions are growing increasingly focused on managing misinformation, online safety, and digital platform governance, creating demand for scalable content detection solutions tailored to local languages and cultural contexts.

Multilingual and dialect-sensitive AI models present a significant growth avenue as they enable more effective moderation in diverse linguistic environments. Additionally, integrating blockchain technology for content authenticity verification and expanding real-time moderation into new verticals like e-commerce, education, and healthcare offer further expansion possibilities.

The increasing adoption of AI-powered hybrid human-machine content review processes opens opportunities for cost-effective, high-accuracy solutions. Market players investing in customizable, cloud-based, and edge-enabled content detection platforms stand to capture new customers by addressing complex regulatory requirements and user needs in fast-growing digital ecosystems.

Challenges

Ensuring Data Privacy, Transparency, and Building Trust

A central challenge for the content detection market is safeguarding data privacy and building trust among users, regulators, and stakeholders. Content detection involves processing sensitive user data, which raises concerns about surveillance, misuse, and compliance with privacy laws such as GDPR and CCPA.

Maintaining transparency around how content is moderated and how data is handled is critical to user confidence, yet complex AI systems often operate as “black boxes,” limiting explainability. Furthermore, establishing fair, unbiased, and context-aware moderation standards remains difficult, as AI models can inadvertently reflect biases or misinterpret cultural nuances.

Achieving consistency and accuracy at scale requires continuous model refinement, human oversight, and clear policy frameworks. Overcoming skepticism from both content creators and consumers about censorship and free speech protection is essential for wider adoption. Market participants need to prioritize ethical AI practices, transparent governance, and stakeholder collaboration to address these challenges effectively.

Key Players Analysis

Microsoft, IBM Corporation, and Alibaba Cloud have established themselves as leading providers in the content detection market through advanced AI algorithms, cloud infrastructure, and scalable data processing capabilities. These companies invest heavily in natural language processing, computer vision, and real-time analytics to detect manipulated or harmful content across digital platforms.

HCL Technologies, Wipro, Huawei Cloud, and Accenture contribute significantly through AI-driven service offerings, cloud-based deployment models, and tailored enterprise solutions. These players focus on integrating content detection into broader cybersecurity and compliance frameworks, ensuring regulatory adherence while mitigating reputational risks.

Specialized providers such as ActiveFence, Clarifai, Cogito Tech, Hive, Sensity, and others, along with global technology giants like Amazon Web Services, Google, and Meta, drive innovation with niche technologies and AI-powered moderation tools. These companies excel in detecting deepfakes, synthetic media, and emerging content threats using machine learning and human-in-the-loop systems.

Top Key Players in the Market

Microsoft

IBM Corporation

Alibaba Cloud

HCL Technologies

Wipro

Huawei Cloud

Accenture

ActiveFence

Amazon Web Services, Inc.

Clarifai, Inc.

Cogito Tech

Google LLC

Hive

Meta

Sensity

Others

Recent Developments

In May 2025, Google launched the SynthID Detector at Google I/O, a tool designed to identify AI-generated content created with Google’s AI tools. The SynthID Detector enhances transparency in generative media by offering comprehensive detection capabilities across various content modalities.

In July 2024, ActiveFence introduced Explainable AI in its ActiveScore AI models, providing transparency in content moderation decisions. This feature allows moderators to understand the rationale behind content assessments, improving trust and compliance in content detection processes.

In November 2024, Clarifai became a member of the Berkeley AI Research (BAIR) Open Research Commons to collaborate on the advancement of large-scale AI models and multimodal learning. This partnership strengthens Clarifai’s initiatives in applied AI, with a focus on enhancing capabilities in content moderation, computer vision, and the development of ethical AI frameworks

Report Scope