Parkland students and teachers have found that Google’s Gemini can craft a thesis statement, generate a study guide and produce surprisingly effective 20th century poetry.

The Parkland School District piloted the use of the Gemini chatbot in a small group of classrooms last spring and is now rolling out the artificial intelligence tool to teachers who complete a set of training modules.

As schools across the country navigate the use of AI, Parkland is among the Lehigh Valley districts turning to the technology to meet student needs. Other examples include Northern Lehigh’s chatbot that assists English learners and Catasauqua Area’s updated Digital Citizenship curriculum.

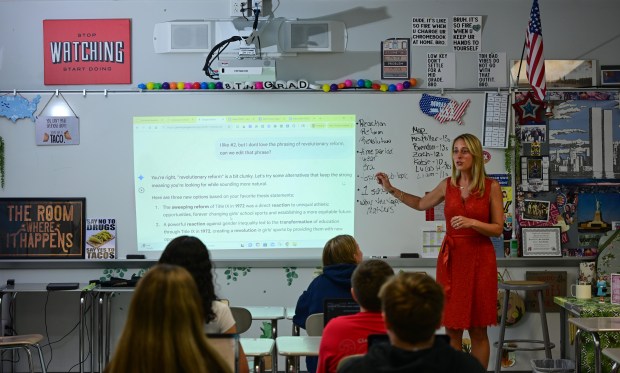

“I know some people are anti [AI], they think it’s just another crutch for kids — I just don’t think so,” said eighth grade history teacher Amy Miller.

As Miller’s students developed thesis statements for their National History Day research projects, she instructed them to talk to the chatbot “like it was a person.”

Students prompted Gemini to make suggested thesis statements more concise or to provide background materials on unfamiliar topics.

The chatbot’s assistance allowed students to do much more on their own and sped up a feedback process that would have previously taken Miller days to complete.

Although Gemini has been the focus of Parkland’s AI pilot, Miller has also experimented with other tools in the Google suite.

She had her students feed their history study guides into Google NotebookLM, which turned the material into podcasts the students could review on the bus on the way to school.

“It is so natural,” Miller said. “You’re literally studying for your test as you’re on the bus in the morning.”

Miller sees her work with AI as one piece of her efforts to ensure her eighth graders are ready for high school.

“That’s just part of our job, preparing them for their next level of education,” Miller said.

Meeting teachers where they are

Miller completed her own AI-themed education this summer, working her way through the district’s six-hour bank of courses that discuss AI ethics and teach skills such as how to effectively prompt a chatbot.

“They didn’t just flip a switch and say, ‘This is now turned on for everyone,’” Miller said. “You as the teacher had to do some work.”

Parkland teachers who completed summer courses or who are working their way through the fall catalog are the second wave of AI adopters in the district. Four high school teachers piloted Gemini use in their classrooms last spring, including English teacher Jarrod Neff.

Informal polls of his 150 students found that about 80% were already using AI on a weekly basis before the classroom pilot started, Neff said.

“I think it was probably happening far, far more than teachers realized,” Neff said.

His No. 1 rule is, “you are the creator and AI is your assistant.”

To reinforce that dynamic, Neff modeled how to use Gemini as a support rather than a starting point. For example, students would first brainstorm on their own and then ask the chatbot for more ideas, or they would peer edit a piece of writing and then use AI for additional review.

This fall Neff has added a metacognition step, asking students to reflect on their AI use and articulate what was effective and what was not.

When his students studied 20th century poetic movements, they imitated the form in their own poems before asking AI to write in that style. Students then compared the two results and reflected on which worked better and why.

“I’m still trying to engage them in the thinking of the process rather than having AI do the thinking for them,” Neff said.

Neff has also engaged students in conversations about the ethics behind AI use, seeking to establish boundaries about which uses are in line with district policy and which would be considered academic dishonesty.

Open and honest conversations about ethical lines have decreased dishonest behavior like plagiarism, Neff said. That dialog is necessary because students who use AI regularly might not realize what teachers consider a violation, he added.

“The gray areas are even grayer now,” Neff said.

AI use can look very different in different content areas, Neff said, so it’s essential to empower teachers to decide the limits of AI use in their own classrooms.

Students weigh in on AI

Parkland students who participated in the Gemini pilot ranked the chatbot’s ability to generate study tools among its most useful features.

Junior Wallace Morris creates outlines and practice quizzes. Sophomore Gabby Luczejko asks Gemini for step-by-step guides to math problems and tricks on how to more easily reach solutions. Senior Stacie Papageorgiou anticipates employing the chatbot to schedule her time at college next year.

The chatbots can also be useful writing coaches, the students reported, especially as their capabilities to offer detailed feedback has improved.

When Papageorgiou was using Gemini in her AP Language course last spring, she found that feedback often seemed incomplete. The chatbot would tell her to add more detail or use more complex sentences without identifying where edits should be made.

Now that she’s using the tool to edit college essays, she’s found that feedback is more specific and better tailored to her work.

Students who want specific feedback need to learn how to write detailed instructions for the chatbot, Papageorgiou said.

Students should also keep in mind chatbots tend to emphasize formal writing and that might conflict with a teacher’s instructions to be creative and use your own voice, Papageorgiou said.

“It really wants you to be formal and really wants you to have a strict writing style,” Papageorgiou said, noting that Gemini sometimes suggested vocabulary that was too advanced.

Knowing how to respond to chatbot feedback is just one example of how students must use common sense to navigate how much they want to depend on AI.

All three students cautioned against overuse of AI.

“Don’t rely on it,” Luczejko said, “just use it for some help and not for everything.”

Morris said getting started on a project is often the most difficult part, and noted that chatbots can be helpful in the brainstorming stage. Beyond that, he advocated for students using their own brain.

“They stop being able to use critical thinking as much,” Morris said of those who overuse AI.

Papageorgiou suggested that AI training start in sixth grade. That is early enough to familiarize students with artificial intelligence tools and also allow time to wean them off those “training wheels” as they learn to tackle academic skills on their own, she argued.

Access to AI should be limited, Papageorgiou said, to avoid encouraging young students to turn off their brain.

“We’re just going to have mindless zombies walking about not knowing their multiplication or addition and subtraction,” Papageorgiou said.

Reporter Elizabeth DeOrnellas can be reached at edeornellas@mcall.com.