As power consumption by machine learning technologies rises, demand grows for AI devices with low power consumption and high computational performance. “Physical reservoirs” are AI devices that perform efficient brain-inspired information processing called reservoir computing and are interesting thanks to their low computational load and low power consumption. However, their lower computational performance compared to software processing has thus far been a drawback.

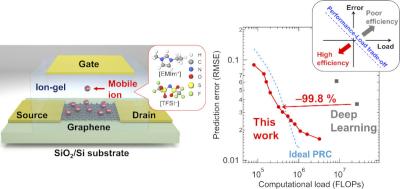

A research team from NIMS, Tokyo University of Science, and Kobe University recently developed an ion-gel/graphene electric double layer (EDL) transistor-based ion-gating reservoir (IGR) that achieved high computational performance comparable to that of deep learning while reducing the computational load by orders of magnitude. By combining graphene, which has high electron mobility and ambipolar behavior, and an ion gel, various responses with different speeds (ions and electrons moving in various manners) develop through complex interactions, enabling the device to respond to input signals with time constants (rates of change) that vary over an extremely wide range.

The device reportedly exhibited the highest-level computational performance among conventional physical reservoirs, comparable to that of deep learning performed using software, while succeeding in reducing the computational load to about 1/100. This high-performance system based on an ion-gel/graphene is also highly compatible with flexible electronics, expected to be the next generation of edge devices.