Yao, P. et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020).

Wan, W. et al. A compute-in-memory chip based on resistive random-access memory. Nature 608, 504–512 (2022).

Ambrogio, S. et al. An analog-AI chip for energy-efficient speech recognition and transcription. Nature 620, 768–775 (2023).

Pi, S. et al. Memristor crossbar arrays with 6-nm half-pitch and 2-nm critical dimension. Nat. Nanotechnol. 14, 35–39 (2019).

Huo, Q. A computing-in-memory macro based on three-dimensional resistive random-access memory. Nat. Electron. 5, 469–477.

Li, Y. Monolithic three-dimensional integration of RRAM-based hybrid memory architecture for one-shot learning. Nat. Commun. 14, 7140 (2023).

Lin, P. Three-dimensional memristor circuits as complex neural networks. Nat. Electron. 3, 225–232 (2020).

Xue, C.-X. et al. 24.1 A 1Mb multibit ReRAM computing-in-memory macro with 14.6ns parallel MAC computing time for CNN based AI edge processors. In Proc. 2019 IEEE International Solid-State Circuits Conference – (ISSCC) 388–390 (IEEE, 2019).

Wan, W. et al. 33.1 A 74 TMACS/W CMOS-RRAM neurosynaptic core with dynamically reconfigurable dataflow and in-situ transposable weights for probabilistic graphical models. In Proc. 2020 IEEE International Solid-State Circuits Conference – (ISSCC) 498–500 (IEEE, 2020).

Mochida, R. et al. A 4M synapses integrated analog ReRAM based 66.5 TOPS/W neural-network processor with cell current controlled writing and flexible network architecture. In Proc. 2018 IEEE Symposium on VLSI Technology 175–176 (IEEE, 2018).

Wen, T.-H. et al. A 28 nm nonvolatile AI edge processor using 4 Mb analog-based near-memory-compute ReRAM with 27.2 TOPS/W for tiny AI edge devices. In Proc. 2023 IEEE Symposium on VLSI Technology and Circuits (VLSI Technology and Circuits) 1–2 (IEEE, 2023).

Le Gallo, M. et al. A 64-core mixed-signal in-memory compute chip based on phase-change memory for deep neural network inference. Nat. Electron. 6, 680–693 (2023).

Xue, C.-X. et al. A CMOS-integrated compute-in-memory macro based on resistive random-access memory for AI edge devices. Nat. Electron. 4, 81–90 (2020).

Jung, S. et al. A crossbar array of magnetoresistive memory devices for in-memory computing. Nature 601, 211–216 (2022).

Hung, J.-M. et al. A four-megabit compute-in-memory macro with eight-bit precision based on CMOS and resistive random-access memory for AI edge devices. Nat. Electron. 4, 921–930 (2021).

Cai, F. et al. A fully integrated reprogrammable memristor–CMOS system for efficient multiply–accumulate operations. Nat. Electron. 2, 290–299 (2019).

Zhong, Y. et al. A memristor-based analogue reservoir computing system for real-time and power-efficient signal processing. Nat. Electron. 5, 672–681 (2022).

Harabi, K.-E. et al. A memristor-based Bayesian machine. Nat. Electron. 6, 52–63 (2023).

Turck, C. et al. Bayesian in-memory computing with resistive memories. In Proc. 2023 International Electron Devices Meeting (IEDM) 1–4 (IEEE, 2023).

Yu, S. et al. Binary neural network with 16 Mb RRAM macro chip for classification and online training. In Proc. 2016 IEEE International Electron Devices Meeting (IEDM) 16.2.1–16.2.4 (IEEE, 2016).

Chen, W.-H. et al. CMOS-integrated memristive non-volatile computing-in-memory for AI edge processors. Nat. Electron. 2, 420–428 (2019).

Wang, W. et al. Computing of temporal information in spiking neural networks with ReRAM synapses. Faraday Discuss. 213, 453–469 (2019).

Ueyoshi, K. et al. DIANA: an end-to-end energy-efficient digital and analog hybrid neural network SoC. In Proc. 2022 IEEE International Solid-State Circuits Conference (ISSCC) 1–3 (IEEE, 2022).

Zhang, W. et al. Edge learning using a fully integrated neuro-inspired memristor chip. Science 381, 1205–1211 (2023).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Khaddam-Aljameh, R. et al. HERMES core – a 14nm CMOS and PCM-based in-memory compute core using an array of 300ps/LSB linearized CCO-based ADCs and local digital processing. In Proc. 2021 Symposium on VLSI Circuits 1–2 (IEEE, 2021).

Khaddam-Aljameh, R. et al. HERMES-core—A 1.59-TOPS/mm2 PCM on 14-nm CMOS in-memory compute core using 300-ps/LSB linearized CCO-based ADCs. IEEE J. Solid-State Circuits 57, 1027–1038 (2022).

Milano, G. et al. In materia reservoir computing with a fully memristive architecture based on self-organizing nanowire networks. Nat. Mater. 21, 195–202 (2022).

Bocquet, M. et al. In-memory and error-immune differential RRAM implementation of binarized deep neural networks. In Proc. 2018 IEEE International Electron Devices Meeting (IEDM) 20.6.1–20.6.4 (IEEE, 2018).

Li, C. et al. Long short-term memory networks in memristor crossbar arrays. Nat. Mach. Intell. 1, 49–57 (2019).

Gao, B. et al. Memristor-based analogue computing for brain-inspired sound localization with in situ training. Nat. Commun. 13, 2026 (2022).

Hu, M. et al. Memristor‐based analog computation and neural network classification with a dot product engine. Adv. Mater. 30, 1705914 (2018).

Jebali, F. et al. Powering AI at the edge: a robust, memristor-based binarized neural network with near-memory computing and miniaturized solar cell. Nat. Commun. 15, 741 (2024).

Yan, B. et al. RRAM-based spiking nonvolatile computing-in-memory processing engine with precision-configurable in situ nonlinear activation. In Proc. 2019 Symposium on VLSI Technology T86–T87 (IEEE, 2019).

Jia, H. et al. Scalable and programmable neural network inference accelerator based on in-memory computing. IEEE J. Solid-State Circuits 57, 198–211 (2022).

Wang, Z. et al. Toward a generalized Bienenstock-Cooper-Munro rule for spatiotemporal learning via triplet-STDP in memristive devices. Nat. Commun. 11, 1510 (2020).

Lin, Y. et al. Uncertainty quantification via a memristor Bayesian deep neural network for risk-sensitive reinforcement learning. Nat. Mach. Intell. 5, 714–723 (2023).

Jain, S. et al. A heterogeneous and programmable compute-in-memory accelerator architecture for analog-AI using dense 2-D mesh. IEEE Trans. Very Large Scale Integr. VLSI Syst. 31, 114–127 (2023).

Rasch, M. J. et al. Hardware-aware training for large-scale and diverse deep learning inference workloads using in-memory computing-based accelerators. Nat. Commun. 14, 5282 (2023).

Chiang, Y.-H. et al. Hardware-robust in-RRAM-computing for object detection. IEEE J. Emerg. Sel. Top. Circuits Syst. 12, 547–556 (2022).

Wang, J. et al. 14.2 A compute SRAM with bit-serial integer/floating-point operations for programmable in-memory vector acceleration. In Proc. 2019 IEEE International Solid-State Circuits Conference – (ISSCC) 224–226 (IEEE, 2019).

Jacob, B. et al. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, 2704–2713 (IEEE, 2018).

Du, C.-Y. et al. A 28nm 11.2TOPS/W hardware-utilization-aware neural-network accelerator with dynamic dataflow. In Proc. 2023 IEEE International Solid-State Circuits Conference (ISSCC) 1–3 (IEEE, 2023).

Park, J.-S. et al. A multi-mode 8k-MAC HW-utilization-aware neural processing unit with a unified multi-precision datapath in 4-nm flagship mobile SoC. IEEE J. Solid-State Circuits 58, 189–202 (2023).

Kim, M. & Seo, J.-S. An energy-efficient deep convolutional neural network accelerator featuring conditional computing and low external memory access. IEEE J. Solid-State Circuits 56, 803–813 (2021).

Jouppi, N. P. et al. In-datacenter performance analysis of a tensor processing unit. In Proc. 44th Annual International Symposium on Computer Architecture 1–12 (ACM, 2017).

Jouppi, N. et al. TPU v4: an optically reconfigurable supercomputer for machine learning with hardware support for embeddings. In Proc. 50th Annual International Symposium on Computer Architecture 1–14 (ACM, 2023).

Asanović, K. et al. The Rocket Chip generator. UC Berkeley Electrical Engineering & Computer Sciences http://www2.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-17.html (2016).

Khwa, W.-S. et al. A 40-nm, 2M-cell, 8b-precision, hybrid SLC-MLC PCM computing-in-memory macro with 20.5–65.0 TOPS/W for tiny-Al edge devices. In Proc. 2022 IEEE International Solid-State Circuits Conference (ISSCC) 1–3 (IEEE, 2022).

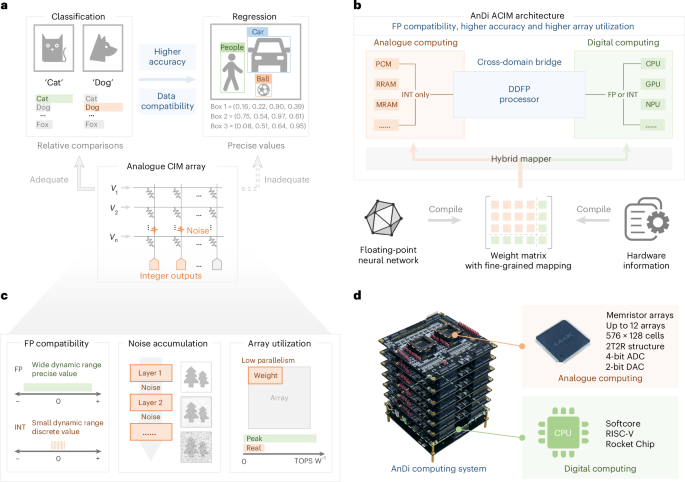

Wen, T.-H. et al. Fusion of memristor and digital compute-in-memory processing for energy-efficient edge computing. Science 384, 325–332 (2024).