Anyone who looks at a social media feed with any regularity is likely familiar with the deluge of fabricated images and videos now circulating online. Some are harmless curiosities (other than the resource use). Others are more troubling. Among the most consequential are AI-generated depictions of wildlife, which are beginning to distort how people understand animals, their behavior, and the risks they pose. Reporting by Sean Mowbray highlights how these images are beginning to distort public understanding

The problem is not that fakery is new. Wildlife imagery has long been embellished, staged, or misrepresented, sometimes for effect, sometimes for attention. What has changed is speed, scale, plausibility, and the ease with which all three now combine. Artificial intelligence allows convincing scenes to be produced quickly, cheaply, and without specialist skill, often by people with no connection to wildlife at all. A lion appears where no lions live. A leopard stalks a shopping mall. An eagle carries off a child. To an expert, the errors are visible. To most viewers, they are not.

This matters because wildlife conservation rests heavily on public perception. Fear, admiration, tolerance, and indifference all shape how societies respond to animals that live alongside people. When AI-generated videos exaggerate danger or invent attacks, they can inflame anxieties that already exist. In places where farmers contend with real predators, false sightings can provoke retaliation against species that were never involved. The conflict is perceived, not new, but its consequences are real.

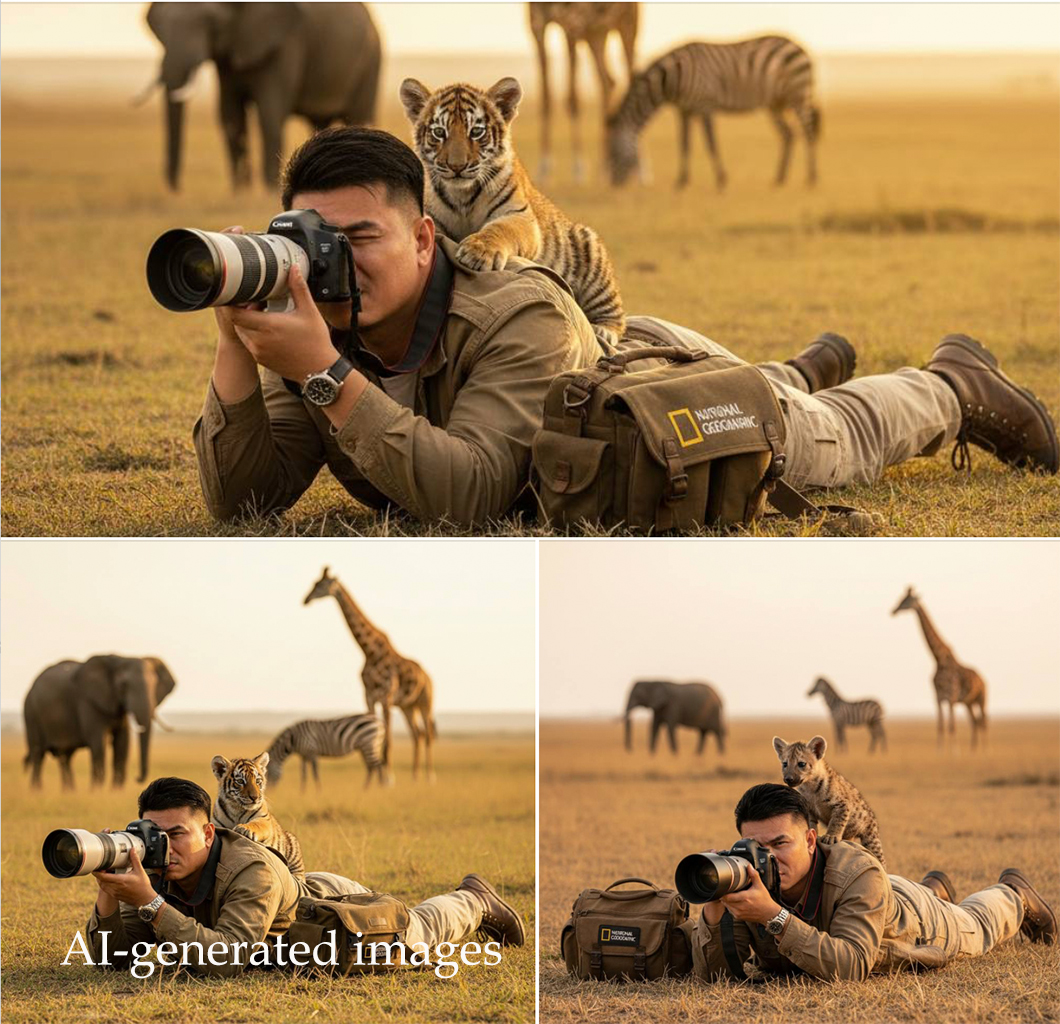

Other fabrications pull in the opposite direction. Videos showing wild animals behaving like pets or companions encourage sentimental interpretations of species that are neither domesticated nor safe. This risks normalizing close contact with wildlife and feeding demand for exotic pets, a trade that already threatens many species. What looks charming on a screen can translate into suffering off it.

There are institutional costs as well. Government agencies and conservation groups are forced to divert time and resources to debunking viral content, responding to public alarm, or investigating events that never occurred, often under pressure and in public view. Trust also erodes. As manipulated imagery becomes more common, genuine evidence—from camera traps to field photographs and documented encounters—may be met with skepticism. The tools that once strengthened conservation science are dulled by doubt.

The irony is that artificial intelligence is also becoming indispensable to conservation. It helps process vast volumes of camera-trap data, detect illegal activity, and monitor ecosystems in ways that were previously out of reach. The technology itself is not the adversary. The problem lies in how easily fabricated content moves through platforms designed to reward engagement more than careful scrutiny.

Addressing this will require restraint as much as regulation. Platforms could label AI-generated visuals more consistently. Organizations that work with nature can set standards for what they publish and promote. Individuals, especially those with large followings, can pause before sharing material that seems improbable, however compelling it appears.

Wildlife conservation depends on a shared understanding of reality: where animals live, how they behave, what risks they do and do not pose, and how people interpret those risks. As artificial images blur that reality, the task becomes harder. The danger is not only that people are misled, but that the fragile trust on which conservation depends is quietly worn away.