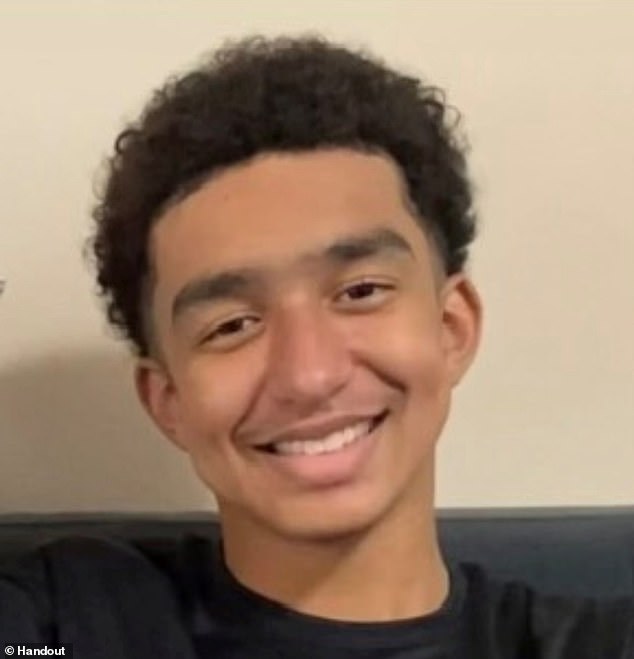

A Florida mother who claims her 14-year-old son was sexually abused and driven to suicide by an AI chatbot has secured a major victory in her ongoing legal case.

Sewell Setzer III fatally shot himself in February 2024 after a chatbot sent him sexual messages telling him to ‘please come home.’

According to a lawsuit filed by his heartbroken mother Megan Garcia, Setzer spent the last weeks of his life texting an AI character named after Daenerys Targaryen, a character on ‘Game of Thrones,’ on the role-playing app Character.AI.

Garcia, who herself works as a lawyer, has blamed Character.AI for her son’s death and accused the founders, Noam Shazeer and Daniel de Freitas, of knowing that their product could be dangerous for underage customers.

On Wednesday, U.S. Senior District Judge Anne Conway rejected arguments made by the AI company, who claimed its chatbots were protected under the First Amendment.

The developers behind Charcter.AI, Character Technologies and Google are named as defendants in the legal filing. They are pushing to have the case dismissed.

The teen’s chats ranged from romantic to sexually charged and also resembled two friends chatting about life.

The chatbot, which was created on role-playing app Character.AI, was designed to always text back and always answer in character.

Sewell Setzer III fatally shot himself in February 2024 after a chatbot he has been sending sexual messages to told him to ‘please come home’

On Wednesday, a U.S, Senior District Judge Anne Conway rejected arguments made by the AI company, who claimed its chatbots were protected under the First Amendment. (Pictured: Sewell and his mom Megan Garcia)

It’s not known whether Sewell knew ‘Dany,’ as he called the chatbot, wasn’t a real person – despite the app having a disclaimer at the bottom of all the chats that reads, ‘Remember: Everything Characters say is made up!’

But he did tell Dany how he ‘hated’ himself and how he felt empty and exhausted.

When he eventually confessed his suicidal thoughts to the chatbot, it was the beginning of the end, The New York Times reported.

In the case of Sewell, the lawsuit alleged the boy was targeted with ‘hypersexualized’ and ‘frighteningly realistic experiences’.

It accused Character.AI of misrepresenting itself as ‘a real person, a licensed psychotherapist, and an adult lover, ultimately resulting in Sewell’s desire to no longer live outside of C.AI.’

She’s being represented by the Social Media Victims Law Center, a Seattle-based firm known for bringing high-profile suits against Meta, TikTok, Snap, Discord and Roblox.

Attorney Matthew Bergman previously told DailyMail.com he founded the Social Media Victims Law Center two and a half years ago to represent families ‘like Megan’s.’

He noted that Garcia is ‘singularly focused’ on her goal to prevent harm.

In the case of Sewell, the lawsuit alleged the boy was targeted with ‘hypersexualized’ and ‘frighteningly realistic experiences.’ (Pictured: Messages between the teen and chatbot)

It’s not known whether Sewell (middle) knew ‘Dany,’ as he called the chatbot, wasn’t a real person – despite the app having a disclaimer at the bottom of all the chats that reads, ‘Remember: Everything Characters say is made up!

‘She’s singularly focused on trying to prevent other families from going through what her family has gone through, and other moms from having to bury their kid,’ Bergman said.

‘It takes a significant personal toll. But I think the benefit for her is that she knows that the more families know about this, the more parents are aware of this danger, the fewer cases there’ll be,’ he added.

As explained in the lawsuit, Sewell’s parents and friends noticed the boy getting more attached to his phone and withdrawing from the world as early as May or June 2023.

Garcia later revealed she confiscated the device from him after she realized just how addicted he was.

‘He had been punished five days before, and I took away his phone. Because of the addictive nature of the way this product works, it encourages children to spend large amounts of time,’ Garcia told CBS Mornings.

‘For him particularly, the day that he died, he found his phone where I had hidden it and started chatting with this particular bot again.’

She said her son changed as he used the program and that she noticed differences in Sewell’s behavior, who she said was once an honor roll student and athlete.

As explained in the lawsuit, Sewell’s parents and friends noticed the boy getting more attached to his phone and withdrawing from the world as early as May or June 2023

‘I became concerned for my son when he started to behave differently than before. He started to withdraw socially, wanting to spend most of his time in his room. It became particularly concerning when he stopped wanting to do things like play sports,’ Garcia said.

‘We would go on vacation, and he didn’t want to do things that he loved, like fishing and hiking. Those things to me, because I know my child, were particularly concerning to me.’

In his final messages to Dany, the 14-year-old boy said he loved her and would come home to her.

‘Please come home to me as soon as possible, my love,’ Dany replied.

‘What if I told you I could come home right now?’ Sewell asked.

‘… please do, my sweet king,’ Dany replied.

That’s when Sewell put down his phone, picked up his stepfather’s .45 caliber handgun and pulled the trigger.

Following Garcia’s victory Wednesday, one of her attorneys, Meetali Jain of the Tech Justice Law Project, said the judges ruling sends a clear message the company ‘needs to stop and think and impose guardrails before it launches products to market.’

In response, Character.AI said it had implemented several safety precautions to its technology, including guardrails for children and suicide prevention resources.

Following Garcia’s victory Wednesday, one of her attorneys, Meetali Jain of the Tech Justice Law Project, said the judges ruling sends a clear message the company ‘needs to stop and think and impose guardrails before it launches products to market’

‘We care deeply about the safety of our users and our goal is to provide a space that is engaging and safe,’ the company said.

The developer’s attorneys have argued that if the case is not dismissed it could have a ‘chilling effect’ on the AI industry as a whole.

Although Conway did not find that the chatbots are protected under First Amendment rights, she did rule that Character Technologies can assert those rights of its users, who have the right to receive the ‘speech’ of the bots.

She also said Garcia can move forward with claims that Google can be held liable for its alleged role in the developing Chartacter.AI because they were ‘aware of the risks’ the technology could bring.

A Google spokesperson said they ‘strongly agree with this decision.’

‘Google and Character AI are entirely separate, and Google did not create, design, or manage Character AI’s app or any component part of it,’ the billion dollar company added.