NVIDIA’s Blackwell GPUs have secured the lead in AI inference performance, leading to higher profit margins for companies using them versus the competition.

NVIDIA’s Full-Stack AI Software & Optimizations Deliver Superb Inference Performance On Blackwell GPU Architecture, AMD Still Needs To Catch Up

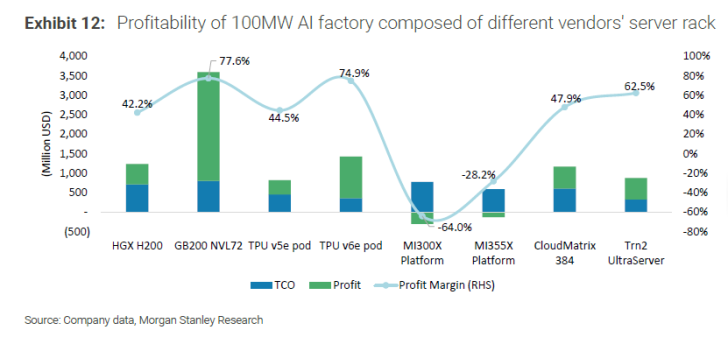

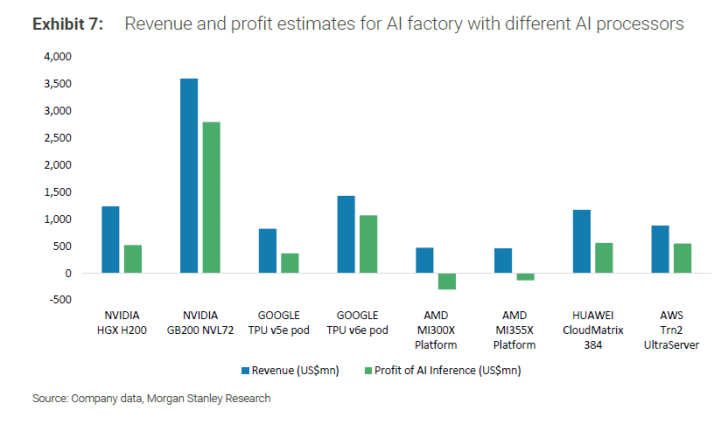

In new data published by Morgan Stanley Research, the firm compares the operational costs and profit margins of versus AI solutions in inference workloads. It is revealed that most AI Inference “factories” or firms that are running several chips for their AI inference use, are enjoying profit margins of over 50% & NVIDIA is taking the lead amongst them.

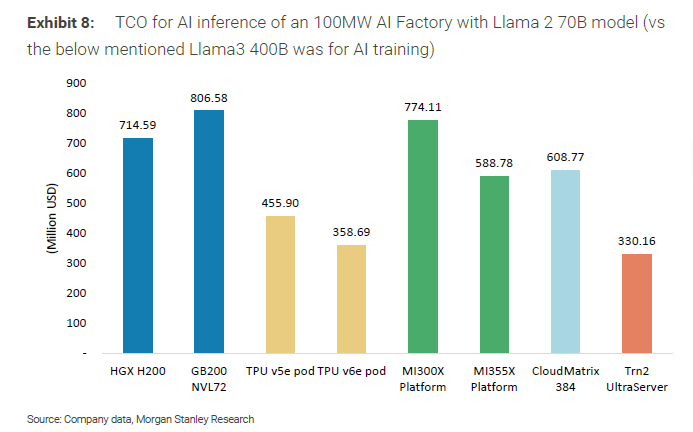

For evaluation, a range of 100MW AI factories were selected, which are composed of different vendors’ server racks. These include NVIDIA, Google, AMD, AWS, and Huawei platforms. Among these, NVIDIA’s GB200 NVL72 “Blackwell” GPU platform delivers the highest profit margin of 77.6%, with an estimated profit of around $3500 million US.

Google comes in second with its TPU v6e pod securing a 74.9% profit margin, and the third spot is secured by AWS Trn2 Ultraserver with a 62.5% profit margin. The rest of the solutions secure around 40-50% profit margins, but what’s most interesting is that AMD’s numbers show that they have a lot of work to do.

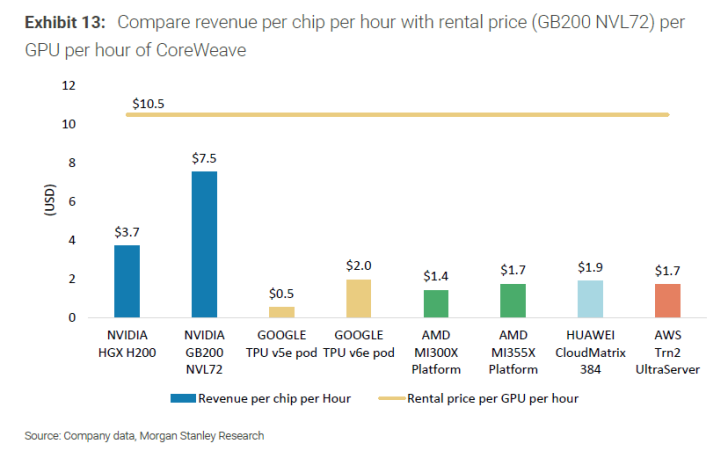

AMD’s latest MI355X platform yields a negative 28.2% profit margin, while the older MI300X platform yields a negative 64.0% profit margin in AI Inference. The firm also breaks down the revenue per chip per hour with rental prices (averaged at $10.5.

NVIDIA’s GB200 NVL72 chip yields a $7.5 revenue per hour, the second spot goes to NVIDIA’s HGX H200 with $3.7 revenue per hour, while AMD yields a $1.7 revenue per hour with its MI355X platform. The other chips mostly yield $0.5-$2.0 revenue per hour, so NVIDIA sits in a whole different league.

NVIDIA’s massive lead in AI Inference is attributed to its FP4 support and continued optimizations to the CUDA AI stack. The company has shown the “Fine Wine” treatment for several of its older GPUs, such as Hopper and even Blackwell, which continue to see incremental performance uplifts every quarter.

AMD’s MI300 and MI350 platforms are also great in terms of hardware, and the company has been doing a lot of software optimization on its end, but it looks like there are still areas where AMD needs to work on & AI inference is one of them.

One other thing highlighted by Morgan Stanley is that the TCO (Total cost of ownership) of the MI300X platforms is as high as $744 Million US, which matches that of NVIDIA’s GB200 platform, sitting at around $800 Million US. So the cost factor doesn’t seem to be in AMD’s favor.

The newer MI355X servers have an estimated TCO of $588 million US, on par with Huawei’s CloudMatrix 384. The higher initial cost could be the reason why NVIDIA is so popular, since they can be on par with AMD in terms of investment costs but offer much higher AI Inference performance, which is said to account for 85% of the AI market in the years ahead.

NVIDIA & AMD are also focusing on an annual cadence to remain competitive with each other. NVIDIA will be unleashing its Blackwell Ultra GPU platform this year, offering a 50% uplift over Blackwell GB200, and that will be followed by Rubin next year, which is going to go into production in the first half of 2026. That will be followed by Rubin Ultra and Feynman. AMD, on the other hand, will launch MI400 next year to take on Rubin, and we can also expect several optimizations on MI400 for AI inference, so it will be an interesting year for the AI segment.

News Sources: WallStreetCN, Jukanlosreve