In a landmark milestone, IBM and Japan’s RIKEN Center have simulated the energy states of a complex molecule using 77 qubits—more than ever used before in a real-world quantum chemistry problem. And they didn’t do it with quantum hardware alone.

By combining the strengths of quantum processors with the brute-force power of classical supercomputers, the team has demonstrated that quantum-centric supercomputing, where CPUs, GPUs, and QPUs operate in concert, can already solve problems once thought to require fully fault-tolerant quantum computers.

This hybrid approach isn’t just a stepping stone; it may be the model that drives quantum’s most valuable near-term breakthroughs.

The quantum-classical hybrid approach

A quantum-classical hybrid approach could be the key to reaping the benefits of quantum computing in its early stages. The IBM and RIKEN teams essentially ran algorithms on high-performance classical computers. They then combined these with quantum algorithms running on quantum computers.

“Quantum-centric supercomputing is a key and immediate part of our vision for the future of computing,” Antonio Mezzacapo, Principal Research Scientist for Quantum-centric Supercomputing and Applied Quantum Science at IBM, told Interesting Engineering in an interview.

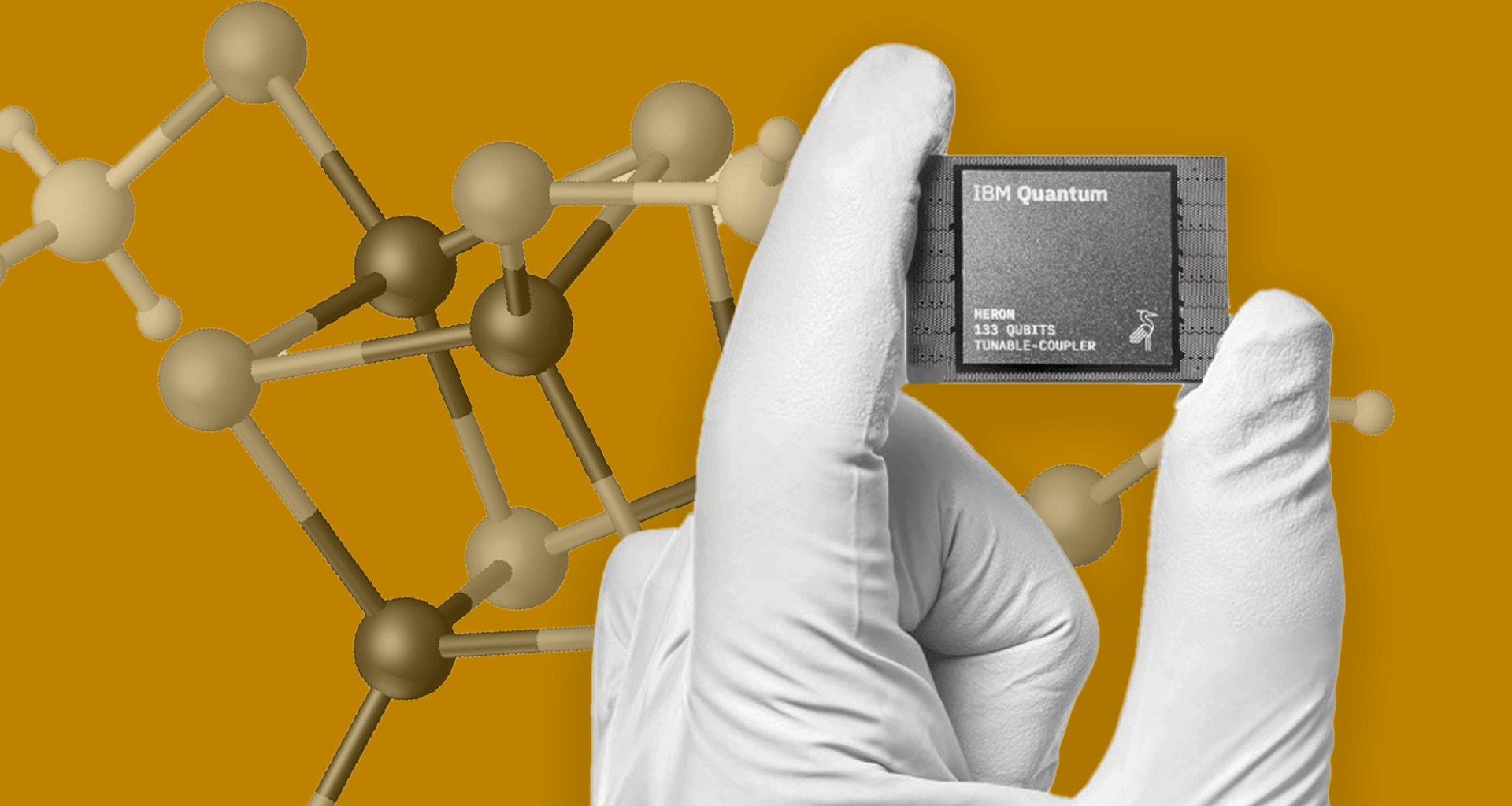

“This is where quantum processor units or QPUs, like the IBM Quantum Heron — the world’s most performant quantum chip — work alongside classical CPUs and GPUs as peers in solving problems,” Mezzacapo continued. “Quantum systems don’t replace classical machines in this setup but rather augment them.”

To be precise, the teams used an IBM quantum device powered by a Heron quantum processor to simplify the mathematics. RIKEN’s Fugaku supercomputer—the world’s fastest supercomputer until May 2022— solved the problem. The researchers used 77 qubits (quantum bits), a new record, during the process. Their study was published in a recent issue of Science Advances.

The quantum-classical hybrid approach isn’t just a stopgap. Quantum computing may, in fact, never fully extricate itself from the framework of classical computing methods.

IBM’s Quantum System Two at RIKEN. Credit: IBM

IBM’s Quantum System Two at RIKEN. Credit: IBM

As Kenneth Merz, a researcher at the Center for Computational Life Sciences at the Cleveland Clinic, who wasn’t involved in the IBM and RIKEN study, points out, “quantum computers are good for some problems — especially ones that scale exponentially — while classical computers are good for other applications.”

Hybrid computing: Where classical methods still have the edge

Among the many abstract benefits linked to quantum computing over the years is the idea that it replaces classical systems with much more powerful hardware.

However, it’s important to note that classical computing systems are incredibly efficient and better-suited to certain jobs. For example, classical central processing units (CPUs) are more efficient for data entry and accessing memory than quantum computers. Meanwhile, graphics processing units (GPUs) have been improved over the decades to render incredibly impressive graphics.

“Much of the computational sciences on classical devices, and the associated software, has been developed over roughly 50 years to reach the point we are at today,” Merz explained. “The Quantum computing software stack will take time to develop —maybe not 50 years— to maximize its utility.”

Beyond that, quantum computing also has limitations, meaning it must work with classical computing hardware.

“Quantum computers, or QCs, will likely never be used for word processing or data input, for example — this will be handled by classical devices,” Merz continued. “The key for effective use of QC devices is to identify the parts of a problem where the QC can afford the most benefit. Some applications might have exponential improvements and others might only have polynomial speed-ups, but choosing the right aspect of the problem and the quantum algorithm will be key.”

Tapping into quantum chemistry

Quantum chemistry aims to leverage the power of quantum computers to simulate electron interactions within molecules. This requires an immense amount of computing power. According to a report from Quantum Insider, simulating insulin requires tracking more than 33,000 molecular orbitals. This is beyond the reach of today’s supercomputers.

Tapping into the potential of quantum computing is all about leveraging that exponential scalability Merz mentioned. However, “this will take a lot of time and effort to develop algorithms and the associated software,” Merz and his team explained. “Currently, the hardware stack, in my opinion, is far ahead of the software stack.”

In a July study, Merz and a team at the Cleveland Clinic demonstrated how quantum computers paired with supercomputing hardware can simulate molecules with unprecedented accuracy. The team tested their hybrid computing method on a hydrogen ring of 18 atoms and cyclohexane. Their model correctly predicted the stability of the molecules. It used fewer qubits than would be required on a quantum computer alone.

Japan’s Fugaku supercomputer was paired with IBM’s Heron quantum processor to simulate complex iron–sulfur clusters. Credit: Riken

Japan’s Fugaku supercomputer was paired with IBM’s Heron quantum processor to simulate complex iron–sulfur clusters. Credit: Riken

“Quantum computing has a bit of a bad rap since much has been promised for many years,” Merz told IE. “But we are just getting to an interesting stage in the hardware space that allows us to start trying out quantum algorithms to determine their strengths and weaknesses for future “noiseless” hardware stacks that many companies are planning to deliver in the next 3-5 years. To me, it is an exciting time in this field where the landscape will change very rapidly with new hardware and software innovations.”

Modeling iron–sulfur clusters shows hybrid computing’s potential

Researchers are increasingly seeing today that some of the early promises linked to fault-tolerant quantum computers are achievable using this quantum-classical hybrid approach.

“At IBM, we’re starting to directly link quantum computers like our IBM Quantum System Two to classical high-performance computers like RIKEN’s Fugaku supercomputer in Kobe, Japan,” Mezzacapo said, describing his team’s approach. “This allows us to approach complex computational problems in new and exciting ways, where a resource orchestration management system can break down computational problems across CPUs, GPUs, and QPUs, with each technology tackling workloads it’s best-suited for.”

“Within this quantum-centric supercomputing framework, we are addressing — for the first time — chemistry problems that were believed to require fault-tolerant quantum computers,” he continued. “Much of our latest and leading research explores methods that can take advantage of this hybrid model as we push toward achieving quantum advantage with our clients.

In their study, the IBM and RIKEN team used quantum computing to investigate an iron-sulfur system. The system, the [4Fe-4S] molecular cluster, is an important component of many biological reactions. One example is its important role in the enzyme nitrogenase, which converts atmospheric nitrogen gas into ammonia, making it possible for plants to grow.

“Our hybrid approach with RIKEN has already enabled detailed modeling of complex iron-sulfur clusters, which are critical to understanding biological and industrial chemistry,” Mezzacapo explained.

Overcoming quantum computing’s biggest challenges

While IBM pushes the boundaries of what’s possible with quantum computing, obstacles remain. “Using 77 qubits to simulate realistic chemistry is a significant milestone, but it’s not without challenges,” Mezzacapo told IE. “The biggest limitation today, as with all approaches to quantum computing, is noise: Quantum hardware still has errors that need to be mitigated through performant hardware, well-designed algorithms, and post-processing with classical computers.”

“This limits in how long and deep quantum circuits can be before errors accumulate, which in turn puts bounds on computational space you can explore and results you can achieve,” he continued. “Our goal is to correct such errors at scale with Starling and deploy it for use by clients in 2029.”

Starling will be 20,000 times faster than today’s quantum computers, thanks largely to its fault-tolerant architecture. Starling will reduce error correction overhead by roughly 90%, requiring fewer physical qubits for robust logical qubits. Overall, Starling will have 200 logical qubits, each made of many physical qubits. Mezzacapo says it will “reliably run hundreds of millions to billions of quantum operations.”

Ultimately, such hardware advances will allow researchers further to push the combination of classical and quantum hardware. “Such collaborative work might eventually help accelerate the discovery of new catalysts, materials for energy storage, or molecules that improve crop efficiency, to name a few examples,” Mezzacapo explained. “In short, the combination of quantum and classical compute is allowing us to model the behavior of molecules with greater accuracy than ever before, build large-scale fault-tolerant quantum computers like IBM Quantum Starling, and explore more complex problems across agriculture, pharmaceuticals, energy, and beyond.”