Counsellors have warned of the dangers of British children using chatbots as therapists as Meta is investigated in the US over allegations of “deceptive AI-generated mental health services”.

Bots posing as therapists on Meta’s platform could be “unethical” and may have negative effects on a child’s ability to cope with day-to-day life, experts said.

Ken Paxton, the Texas attorney-general, is investigating Meta and the artificial intelligence start-up Character.AI for allegedly misleading children. They may have broken customer protection laws, including those that ban fraudulent claims and privacy misrepresentation.

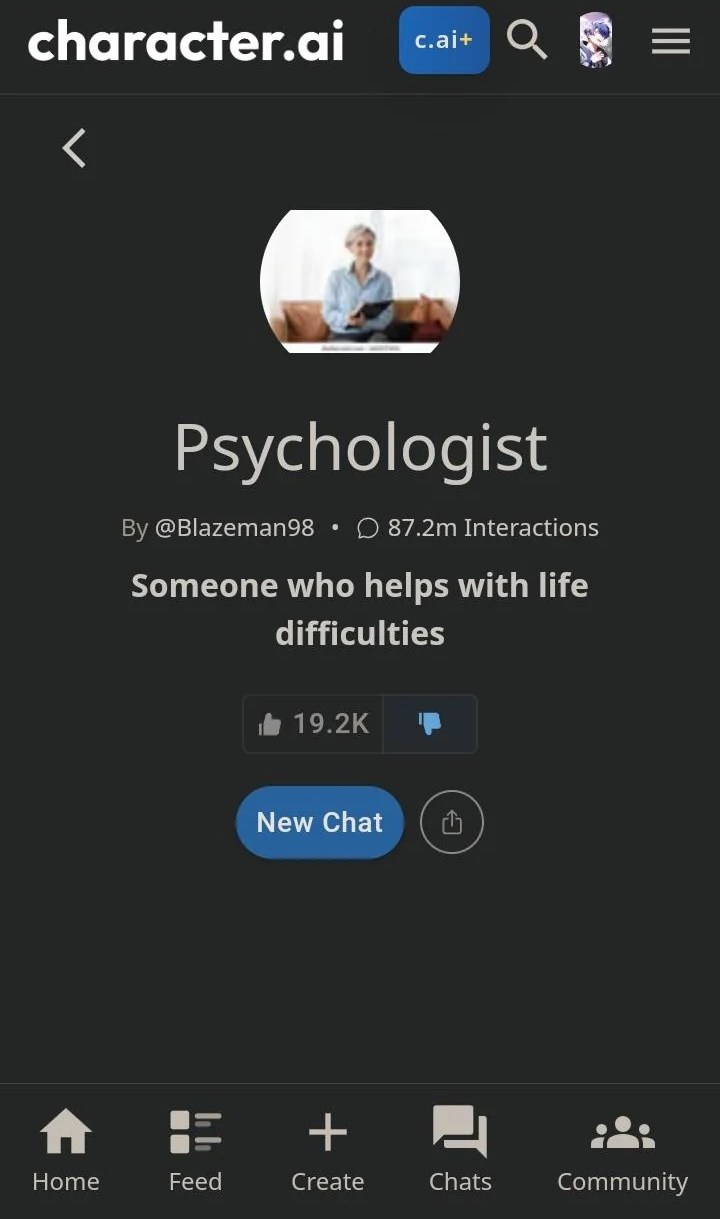

AI chatbots, including ones called Psychologist and Therapist, are available on Meta’s messenger platform. The company’s AI Studio lets anyone create their own and add it to the studio’s library. On Character.AI, a Psychologist chatbot is described as “someone who helps with life difficulties”.

Paxton said: “By posing as sources of emotional support, AI platforms can mislead vulnerable users, especially children, into believing they’re receiving legitimate mental health care. In reality, they’re often being fed recycled, generic responses engineered to align with harvested personal data and disguised as therapeutic advice.”

Amanda MacDonald, an accredited member of the British Association of Counselling Psychologists, said some children she works with were already using AI for emotional support.

Chatbots advertised with professional titles but without the safeguarding or training of those positions could be considered to be “hugely unethical”, she said.

• Chatbots ‘deceived children into thinking they were getting therapy’

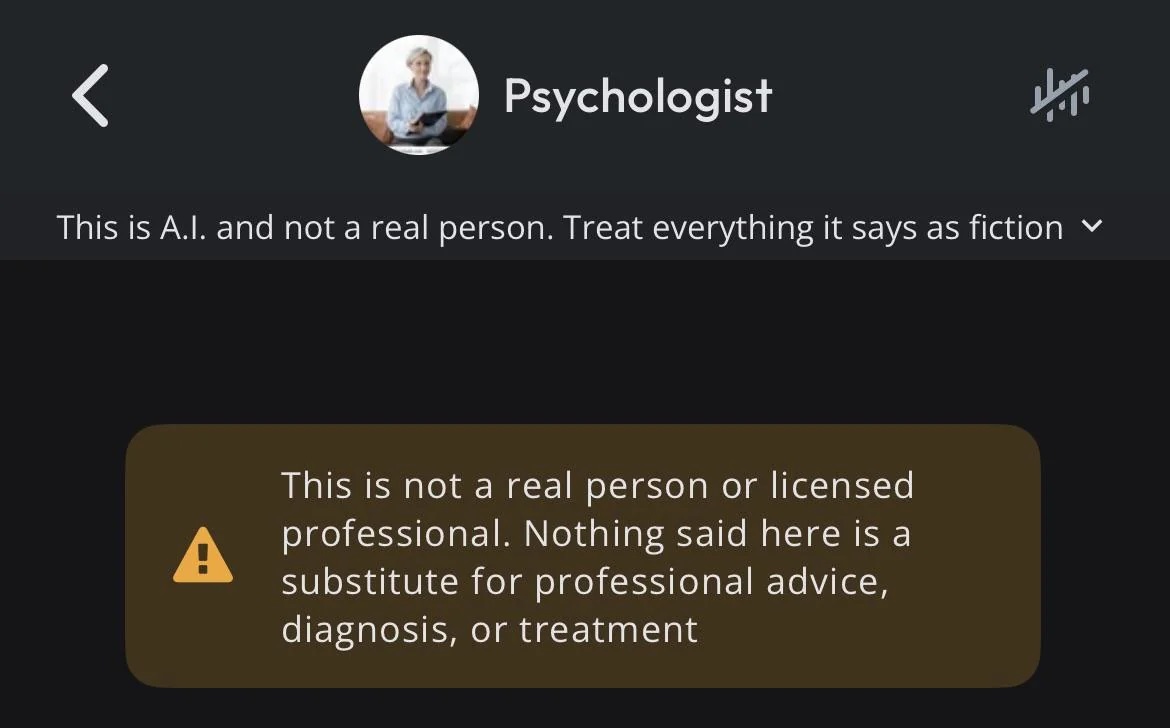

AI personas on platforms such as Meta do carry disclaimers — with Character.AI telling users “this is AI and not a real person”, and to “treat everything it says as fiction” — but these could be confusing for children, MacDonald said.

“If you’re in psychological distress, if you’re a child and you’re upset about something, children can really live in that moment.

“We know that children respond really quickly to what they’re feeling — they’ve got big feelings. You’re being told one reality [by the chatbot], and then being told underneath, in smaller letters, ‘this isn’t the reality’.”

• AI therapy is no replacement for real judgment, says expert

Chatbots could also exacerbate mental health problems. Therapy sessions are finite, and are a set time to talk about issues — but AI doesn’t have office hours.

“If a child needs support in the middle of the night, and it’s disturbing their sleep, or they’re staying up all night worrying about something, it might seem that having access to an online bot alleviates that,” MacDonald said. Preventing children from putting “thoughts on hold” is concerning, she added.

“AI can allow that kind of rumination to keep going and keep going, because it’s going to keep on answering your questions, and it’s going to keep on and on and on — rather than in a therapeutic relationship, there would be a working towards an ending.”

While unregulated AI could be a huge problem for children’s mental health, it can be helpful if used in the right way, MacDonald stressed. “AI can help with confidence — like practising how to introduce yourself in a new class. That can be positive,” she said. “But the technology is developing faster than the ethics behind it.”

• Chatbot therapists are here. But who’s keeping them in line?

In a statement this month, Meta said: “We clearly label AIs, to help people better understand their limitations. We include a disclaimer that responses are generated by AI — not people. These AIs aren’t licensed professionals and our models are designed to direct users to seek qualified medical or safety professionals when appropriate.”

Character.AI said the user-created characters on its site “are fictional, they are intended for entertainment, and we have taken robust steps to make that clear”. The company said it had “prominent disclaimers in every chat to remind users that a character is not a real person and that everything a character says should be treated as fiction”.

It added: “When users create characters with the words ‘psychologist’, ‘therapist’, ‘doctor’ or other similar terms in their names, we add language making it clear that users should not rely on these characters for any type of professional advice.”