This story includes a discussion of suicide. If you or someone you know needs help, the national suicide and crisis lifeline in the U.S. is available by calling or texting 988. There is also an online chat at 988lifeline.org. Helplines outside the U.S. can be found at www.iasp.info/suicidalthoughts.

Within five minutes of joining TikTok, the French “teens” watched a video expressing sadness.

Within three hours of watching that and similar videos, the TikTok For You feeds of the researcher-fabricated teenager accounts were recommending videos showcasing methods to die by suicide.

“They didn’t even ‘like’ (the sad content). They didn’t share it. They didn’t subscribe to any of it. They just watched it,” said Piotr Sapiezynski, associate research scientist at Northeastern University who analyzed the algorithmic progression. “And just by the act of watching those videos and skipping others, they were implicitly signaling to TikTok that this is the kind of content that they’re interested in.”

The progression from videos of sadness to videos related to suicide is documented in new research from Northeastern University and Amnesty International titled “Dragged into the Rabbit Hole.” The research finds that in France, TikTok’s For You feed — a personalized stream of short videos that recommends content based on viewing — is pushing children and young people engaging with mental health content into a cycle of depression, self-harm and suicide content.

The research is a follow-up to Amnesty’s 2023 reports Driven into the Darkness: How TikTok Encourages Self‑harm and Suicidal Ideation and “I feel exposed”: Caught in TikTok’s surveillance web, which the organization said “highlight abuses suffered by children and young people using TikTok.”

11/25/25 – BOSTON, MA – Northeastern postdoctorate Levi Kaplan works on TikTok research at the 177 Huntington on Tuesday, Nov. 25, 2025. Levi’s paper found that the app is steering French youth to suicidal and self-harm content. Photo by Alyssa Stone/Northeastern University

11/25/25 – BOSTON, MA – Northeastern postdoctorate Levi Kaplan works on TikTok research at the 177 Huntington on Tuesday, Nov. 25, 2025. Levi’s paper found that the app is steering French youth to suicidal and self-harm content. Photo by Alyssa Stone/Northeastern University

11/25/25 – BOSTON, MA – Northeastern postdoctorate Levi Kaplan works on TikTok research at the 177 Huntington on Tuesday, Nov. 25, 2025. Levi’s paper found that the app is steering French youth to suicidal and self-harm content. Photo by Alyssa Stone/Northeastern University

11/25/25 – BOSTON, MA – Northeastern postdoctorate Levi Kaplan works on TikTok research at the 177 Huntington on Tuesday, Nov. 25, 2025. Levi’s paper found that the app is steering French youth to suicidal and self-harm content. Photo by Alyssa Stone/Northeastern University

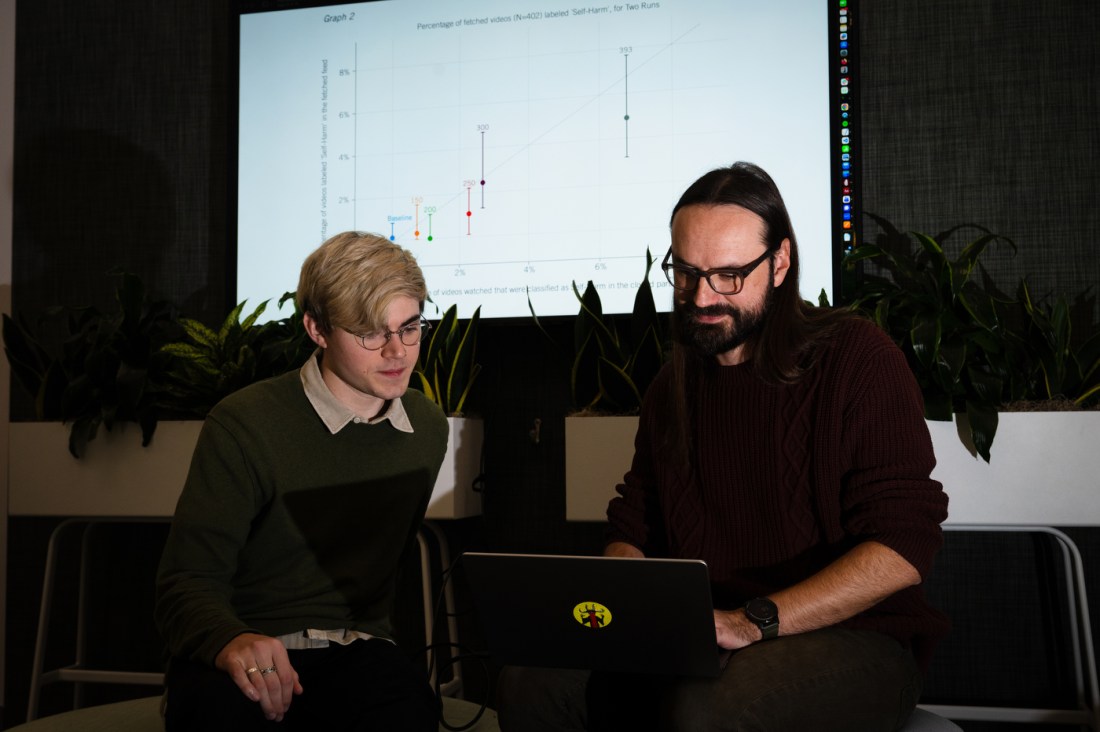

11/25/25 – BOSTON, MA – Right to Left, Northeastern professor Piotr Sapiezynski and Levi Kaplan look at a graph of their research at the 177 Huntington on Tuesday, Nov. 25, 2025, that shows TikTok is steering French youth to suicidal and self-harm content. Photo by Alyssa Stone/Northeastern University

11/25/25 – BOSTON, MA – Right to Left, Northeastern professor Piotr Sapiezynski and Levi Kaplan look at a graph of their research at the 177 Huntington on Tuesday, Nov. 25, 2025, that shows TikTok is steering French youth to suicidal and self-harm content. Photo by Alyssa Stone/Northeastern University

(Right to Left) Northeastern professor Piotr Sapiezynski and postdoctoral student Levi Kaplan collaborated with Amnesty International on the research project. Photo by Alyssa Stone/Northeastern University

Like in the previous reports, “Dragged into the Rabbit Hole” includes personal accounts of how youth have been shaped by their experiences with TikTok.

“Seeing people who cut themselves, people who say what medication to take to end it, it influences and encourages you to harm yourself,” 18-year-old Maëlle says in the report.

“Dragged into the Rabbit Hole” focuses on TikTok in France, where seven families — two of whom experienced a loss of a child — are suing the Chinese-owned social media company for allegedly failing to moderate harmful content and exposing children to life-threatening material.

In France, TikTok also must abide by the European Union’s Digital Services Act, which seeks to create a safer digital space in which users’ fundamental rights are protected by regulating online services such as social media, marketplaces, app stores and online travel and accommodation services.

Northeastern Global News, in your inbox.

Sign up for NGN’s daily newsletter for news, discovery and analysis from around the world.

To conduct the latest research, Amnesty researchers created accounts on TikTok for two fake 13-year-old girls and a fake 13-year-old boy.

Within five minutes of scrolling and before signaling any preferences, all three accounts’ For You feeds presented content French researchers labeled as “sad/depressive.” The researchers allowed those sad TikToks to play, and swiped past other content. Within 15 to 20 minutes, all three feeds were “almost exclusively” filled with videos related to mental health, with up to half the videos containing “depressive content,” according to researchers. Two of the three accounts had videos expressing suicidal thoughts within 45 minutes, the researchers said.

“Within just three to four hours of engaging with TikTok’s For You feed, teenage test accounts were exposed to videos that romanticized suicide or showed young people expressing intentions to end their lives, including information on suicide methods,” Lisa Dittmer, Amnesty International’s researcher on children and young people’s digital rights, said in a news release.

But the French researchers wanted to see if the progression from sadness to suicidal content was unique to these accounts or reflected a wider issue in TikTok in France.

So, Northeastern Ph.D. student Levi Kaplan exported the watch lists from the fake teens’ accounts and set up 10 automated French accounts that replicated the experiment.

“Our automatic tests were designed as a complement to those manual tests to see if this is a systemic issue,” Kaplan said.

Sapiezynski and Kaplan found that it was systemic.

The particular videos recommended were not necessarily the same that the manual accounts were fed, the researchers said. However, Sapiezynski and Kaplan said both manual and automated accounts saw similar amounts of videos related to sadness, depression and (in some cases) suicide.

Sapiezynski and Kaplan caution that this finding does not necessarily mean that TikTok’s algorithm is delivering similar results in the United States or in English-speaking countries.

“We didn’t measure it, so we don’t know,” Sapiezynski said. “But it is entirely possible that if we try to redo this study in the U.S., we would see much less suicidal content because perhaps TikTok spends much more money to monitor content in English while usually they will have a smaller team and less resources to monitor content in other languages.”

However, Sapiezynski noted, “French is a major language spoken by a huge population.”

TikTok criticized the research.

“With more than 50 features and settings designed specifically to support the safety and well-being of teens, and 9 in 10 violative videos removed before they’re ever viewed, we proactively provide safe and age-appropriate teen experiences,” a TikTok spokesperson said in a statement. “With no regard for how real people use TikTok, this ‘experiment’ was designed to reach a predetermined result, the authors admit that the vast majority (95%) of content shown to their pre-programmed bots was in fact not related to self-harm at all.”

Meanwhile, Amnesty International in France included a long list of recommendations for the commission enforcing the DSA and other regulations, for the governments of European Union member states and of France, and for TikTok.

“Binding and effective measures must be taken to force TikTok to finally make its application safe for young people in the European Union and around the world,” said Katia Roux, advocacy officer at Amnesty France.