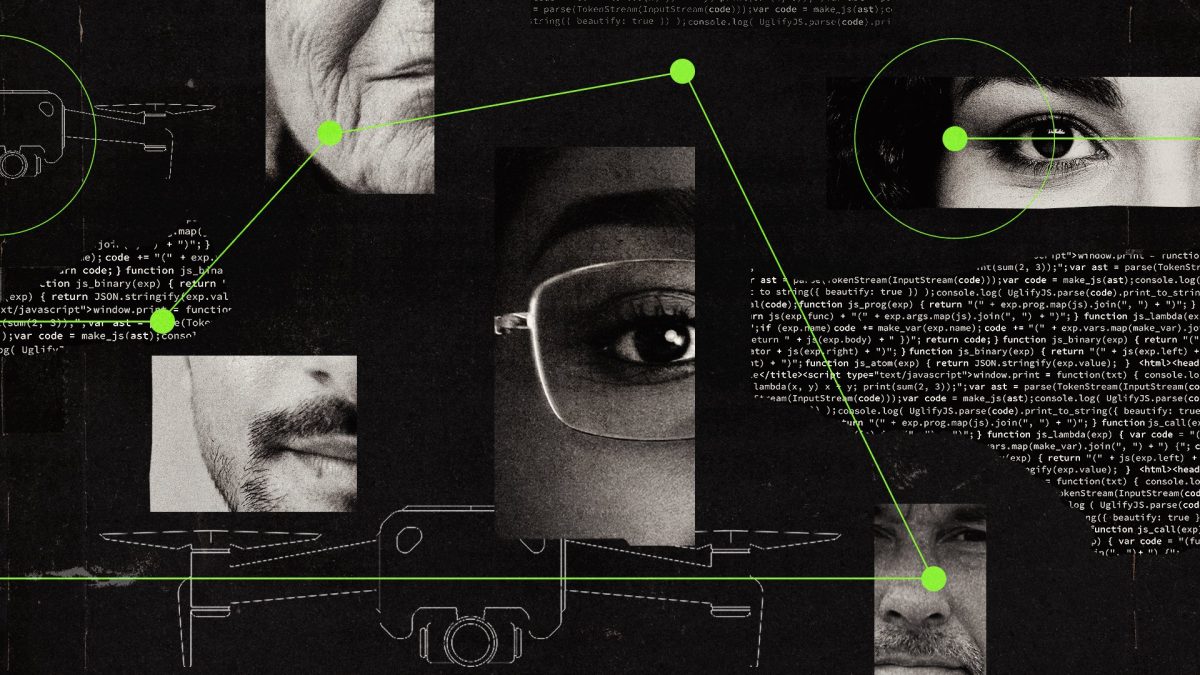

Large language models like ChatGPT could soon drastically lower the informational barriers for planning and executing biological attacks

The use of artificial intelligence to enhance biological weapons could destroy the human race under a worst case scenario – and governments must do more to counter the threat, a former top general has warned.

Gen Sir Richard Barrons, who co-authored a root-and-branch review of Britain’s defence capabilities, said “scary” advances in AI could help hostile states or actors more easily develop pathogens that could even target people according to their characteristics like race or sex.

Barrons also warned that drones, which are becoming the hallmark of 21st century warfare, could be used as vehicles to deliver bioweapons to enemy populations under a future conflict scenario.

New FeatureIn ShortQuick Stories. Same trusted journalism.

Biological weapons have existed for centuries, although their use in conflict has been outlawed by international treaties for 100 years.

Rogue states or terrorists could use AI to streamline the development of bioweapons, some military experts fear.

But scientists with expertise in biological warfare and security said while the potential “ceiling of harm” from such weapons was high, the likelihood of them being used to devastating effects was still low and remained a far-off prospect, due to the uncontrollable nature of the agents and the risks of self-harm to perpetrators.

However, there is a consensus that governments and the AI industry need to be better prepared to stop a nightmare scenario at some point in the future.

Popular large language models (LLMs), such as ChatGPT, “could soon drastically lower the informational barriers for planning and executing biological attacks”, by inadvertently “helping novices develop and acquire bioweapons by providing critical information and step-by-step guidance”, a report by the authoritative Center for Strategic and International Studies (CSIS) warned earlier this year.

OpenAI, which owns ChatGPT, and other tech firms have stepped up their safeguards in response to the threat, the CSIS report found. The i Paper has approached the tech firms for further comment.

Assessments from leading AI labs “demonstrate that LLMs are rapidly approaching or even exceeding critical security thresholds for providing users key bioweapons development information – with some models already demonstrating capabilities that surpass expert-level knowledge”, the report said.

It added: “While some of the leading companies are voluntarily imposing safeguards, the overall trajectory nevertheless points toward a near-term future in which policymakers must confront bioterrorism risks not just from sophisticated state and terrorist organisations, but potentially from individuals with little technical background but access to popular LLMs.”

‘Pandemic-scale pathogens’

Future AI biological design tools, or BDTs, could “assist actors in developing more harmful or even novel epidemic or pandemic-scale pathogens”, which could evade existing safeguards, the report also found.

Under one scenario raised by the CSIS report, an existing pathogen such as a strain of avian influenza – which has a high fatality rate in humans but cannot currently transmit between people, meaning it is less of a threat – could be adapted to spread more easily.

A safety assessment published in February 2025 of Deep Research, one of OpenAI’s advanced AI capabilities, which was cited in the report, found that its models “are on the cusp of being able to meaningfully help novices create known biological threats”.

OpenAI said at the time it expected “current trends of rapidly increasing [biological] capability to continue, and for models to cross this [high-risk] threshold in the near future” – meaning that the models could potentially allow misuse.

In preparation, it said, the company is “intensifying our investments in safeguards”.

Another biological design tool cited in the CSIS report, from AI chip designer Nvidia, known as Evo 2, has been trained on 128,000 genomes from humans, animals, plants, bacteria and some viruses and allows scientists to identify novel patterns between different DNA sequences.

The research model has already identified, with 90 per cent accuracy, whether different mutations of a breast cancer-related gene are benign or not.

‘We need to think about our existence’

Barrons told The i Paper that great progress was being made in medicine using AI to tailor diagnosis and treatment for individuals.

But he added: “The most terrifying thing is the same technology that makes individually targeted medicines could make individually or targeted pathogens.

“And one scary side of that is a state does it – so a bio-war. So essentially, this pathogen targets a characteristic of the people they don’t like.

“And that might be what you can imagine – it can be race, it can be colour, whatever that’s horrible.

“Or scary, scary AI, which drives these things for whatever reason, produces and releases a pathogen that’s designed to kill all humans, and to which there is no antidote, because, in its own sweet way, the AI has worked out we control the off button.

“None of that is imminently likely, but what it does say is … we need to think about the risk to our security – indeed – our existence.”

Covid-19 ‘would look like the common cold’

Indeed, the CSIS report warned “if BDTs such as Evo 2 can generate good outputs, then presumably more capable future tools could create bad ones, too”.

It continued: “Imagine, for instance, bird flu: Some strains have a reported human mortality rate of more than 50 per cent, meaning that approximately one out of every two people that contract the disease will die.

“There are other versions of flu that are not as lethal but are more contagious. Someone who contracts the more common seasonal flu, for instance, will spread it to 1-2 other people on average.

“Malicious actors, however, might use a more advanced BDT with generative capabilities to create a new version of bird flu that is both highly lethal and highly contagious.

“Such a scenario would likely make the Covid-19 pandemic – which has claimed more than 27 million lives and shaken the world economy – look like the common cold.”

Threat is ‘marginal’ but ‘high ceiling of harm’

However, experts have urged caution over what might be possible in the near future.

Caleb Withers, research associate for the Technology and National Security Programme at the Center for a New American Security, said: “There’s not some distinct class of AI-enabled biological weapons that you might see – ultimately, DNA is DNA and RNA is RNA.

“And so it’s more about how people get to discovering or weaponising certain agents. And the caveat is that largely most of what I’m describing is in the future tense here, and I don’t think there’s necessarily an acute threat.”

AI models are “increasingly useful at helping people be more effective at various tasks”, which also applies to biological science, Withers said, but this was “marginal rather than transformative at the moment”.

He added: “The concern here is that if you consider the task of trying to develop a bioweapon … you can better predict or model what changes to organisms might lead to these effects.

“That’s obviously going to potentially result in people being able to more effectively make more dangerous biological agents.”

He added: “At the limit, bioweapons can be pretty devastating.

“The ceiling of harm is pretty high. The caveat there is that it’s not that straightforward to just discover and develop such a biological agent.

“The current effect of AI is pretty marginal, but AI is getting better rapidly, faster at relevant tasks.”

Withers added it could pose a “pretty acute challenge” over the next few decades.

“I wouldn’t want to overstate the current threat. But I wouldn’t want to understate how fast AI is improving or understate how much damage could be caused at the limit, through pathogens, and… the importance of preparedness for this sort of thing.

“And so I think it just behoves thoughtful monitoring by governments about how fast are the relevant capabilities actually developing… it’s about having well-resourced coordinated efforts to stay safe.”

AI could help bad actors ‘hide’ activity

Dr Alexander Ghionis, research fellow in chemical and biological security at the University of Sussex Business School, said AI does not “design” a biological weapon in a “single decisive step” and would always require some form of human involvement that is labour-intensive and involves compromises.

He said: “The familiar idea of AI enabling population-specific or geographically targeted biological weapons is misleading: even if such science were available – and it is not – someone would still have to solve all the labour-intensive problems in between.”

AI can, however, accelerate certain forms of labour such as carrying out straightforward scientific tasks like finding papers, drafting protocols, troubleshooting, as well as non-scientific tasks like planning, procurement, and logistics.

It could also help actors “hide” their activity, which could make oversight by industry or governments more challenging, Ghionis said.

“The genuine effects of AI tend to sit around the edges – in how actors imagine, plan, troubleshoot, coordinate, conceal, or experiment – rather than in any radical change to what biology allows.

“AI can reshape parts of the labour of harm – altering speed, sequencing, and perceived feasibility – but it never removes the biological, organisational, or practical constraints that make these weapons so difficult to acquire and use, whether the intended effects are lethal or merely incapacitating.

“The biological danger itself does not change because AI is involved. Biological agents remain what they are: fragile, unpredictable, and constrained by the environments in which they are expected to thrive.

“AI does not suddenly unlock new categories of pathogens, nor does it make biology behave with the precision that is sometimes imagined.”

Risks outweigh benefits for hostile actors

Ghionis said that there were still many risks for anyone wanting to develop a bioweapon, and while AI might streamline certain things in the process, biological agents were still difficult to control.

He added: “The moment an actor cannot reliably predict who will be affected, for how long, and under what conditions, the strategic value of an incapacitating agent quickly falls away. And nothing about AI resolves the fragility or unpredictability that undercuts biological controllability…

“Once you look closely at intent and utility, the number of actors for whom an uncontrollable, highly lethal biological agent makes strategic sense is very small.

“The risks – blowback, loss of control, political isolation, economic self-harm – far outweigh any plausible benefit for most states, and for many non-state groups as well.

“Even incapacitating agents, which seem more realistic on paper, quickly run into problems of controllability and reliability when you try to imagine their use in real-world conditions.”

In fact, the greatest biological risks the human race faces still come from natural outbreaks rather than engineered ones, Ghionis said.

Your next read

“And the measures that protect societies from natural threats – strong surveillance, resilient health systems, capable laboratories, well-trained first responders, and effective coordination across agencies – also form the best defence against deliberate misuse.

“A biological weapon becomes far less attractive if its expected effects can be blunted or rapidly contained.

“So I would say the UK is moving in a sensible direction, but sustained investment is essential: in local and national health resilience, in scientific capacity, in international engagement, and in the steady diplomatic work that reinforces the norm against biological weapons.”

Sir Keir Starmer’s government published a new UK Biological Security Strategy in 2025 which acknowledged that “rapid developments in AI-enabled scientific tools and engineering biology offer significant opportunities for drug discovery and vaccine development, but also have the potential to introduce new risks of misuse”.

The government has set up a review to evaluate “how advanced AI could assist chemical and biological misuse, and worked with companies to address risks and develop their own thresholds”.