Centralised, Decentralised and distributed business diagram with icon template for presentation and … More website

getty

If there’s one thing that we deeply need in the integration of AI in our world, it’s philosophy.

Many of the experts who speak at modern conventions and write papers about LLMs would agree with me that we need an understanding of why things work the way they do, and how we can use them.

Ben Franklin made history by tying something made of metal to a string, and thinking about why lightning works the way it does.

He was part of a philosophical community of his time. We need something like that.

With that in mind, I was impressed by a presentation by Abhishek Singh at this April event on “Chaos, Coordination and the Future of Agentic AI.”

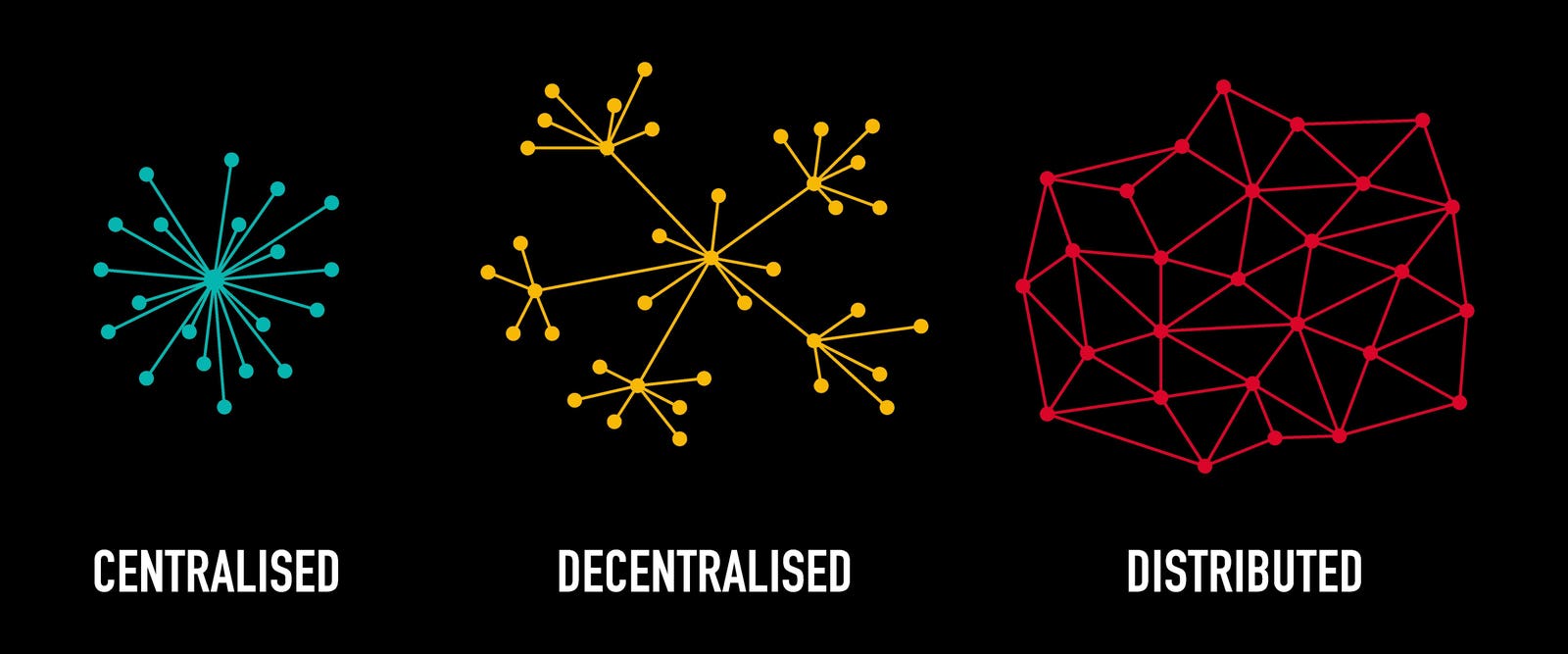

Decentralizing the Web

Singh is one of a number of researchers working on the essential idea of decentralizing the web, and how that relates to the new technologies that we have.

In the presentation, he talked about a “trilemma” of intelligence (and if that isn’t quant-speak, I don’t know what is) related to continuity, heterogeneity, and scalability.

Referring to “chaos theory 2.0,” he talked about the connection of decentralized networks and algorithms as a primary goal in what he called an “emergent phenomenon” of AI agency.

“One way to think about how these two mental models fit together is: the way we are doing solving intelligence right now is by this idea of one big, large (system) sitting at one large, big tech company and being capable of doing all the tasks at the same time. And the other perspective, which is more coming from the decentralized angle, is … lots of small brains interacting with each other. None of the single small brains is powerful enough, but then together, (they are.)”

When I hear something like this, I always invoke Marvin Minsky‘s principle of Society of the Mind, partly because he’s a hero of mine, and partly because I think this is so central to the issues that we’re looking at.

Decentralization and its Challenges

Singh also points to various challenges with instituting plans around decentralization.

Some of them involve privacy, and verification, and orchestration.

Others have to do with how we design crowd UX or engineer the network in question.

Singh mentions complex models, and large scale collaboration, as containing inherent problems to be solved.

Others have to do with incentives.

“Several individuals and organizations host open datasets and volunteer compute for altruistic reasons,” Singh writes in the aforementioned paper. “Explicit incentive structures for contributing to decentralized systems can risk crowding out altruism and diminishing participation from those seeking to help rather than to profit. It has been observed that extrinsic rewards can override intrinsic motivations over time. When we compensate people for activities they once did voluntarily, they tend to lose intrinsic interest in those activities. Therefore, designing effective incentive programs requires careful consideration of community norms, social motivations, and human psychology as well. The goal should be to complement extant altruism without supplanting it. Hybrid approaches that balance incentives with opportunities for voluntary participation could help (i.e. combining economic and reputation-based incentives).”

So all of that has to go into the hopper when it comes to making these design choices.

In Its Own Words

I’m going to admit here that this paper I was handed on decentralized AI with Singh as an author is fairly dense, and goes over the minutia of how decentralized AI will work, as well as the challenges.

So I put it into Google Notebook to get a sort of human response on the part of the two disembodied personas used in the generated conversation tool.

In the first few minutes, they went over some of the basic ideas, describing monolithic data centers as a big vulnerability, and talking about “stark reminders” (they used the phrase more than once) of what happens when they’re compromised.

Then there’s also data ownership.

Then they had this conversation about the definition of Decentralized AI (just for fun, compare this with Singh’s definition above):

“At its heart, decentralized AI is about enabling different entities, companies, individuals, even our devices, to collaborate on AI development and deployment,” said the female voice. “But the crucial difference is that this collaboration happens without needing a single central authority calling all the shots.”

“Right,” answered the male voice. “No big boss.”

“Exactly,” said his companion. “Think of it as creating a way for different parties who have their own distributed resources, their own data, their own computing power, to work together, even if they don’t fully trust each other, or maybe don’t want to hand over control to one central player. So instead of one giant AI brain in like a central server somewhere, yeah, it’s more like a network of smaller, interconnected working together.”

They went on like that for a while. It seemed to me that the Notebook LM “people” were headed in a sort of simplistic direction. So I went back to the paper itself to look at some of the larger pieces of its presentation.

Here’s a piece from the conclusion:

“This paper has elucidated the merits, use cases, and challenges of decentralized AI. We have argued that decentralizing AI development can unlock previously inaccessible data and computing resources, enabling AI systems to flourish in data-sensitive domains such as healthcare. We have presented a self-organizing perspective and argue that five key components need to come together to enable self-organization between decentralized entities: privacy, verifiability, incentives, orchestration, and crowd UX. This self-organized approach addresses several limitations of the current centralized paradigm, which relies heavily on consolidation and trust in a few dominant entities. …. We posit that decentralized AI has the potential to empower individuals, catalyze innovation, and shape a future where AI benefits society at large.”

You can also look at various Venn diagrams showing how to bridge some of the problems inherent in traditional AI systems.

The Vehicle for Decentralization

Now, there’s one more thing I noticed in Singh‘s presentation – at the very end, he mentioned an acronym that’s likely to be very critical to decentralized AI.

It’s called NANDA or Networked Agents and Decentralized AI, and it’s being worked on by a team, including Singh and my colleague Ramesh Raskar at MIT.

For full disclosure, they name me as a collaborator in a less direct sense, on the website.

But the people working on this have front row seats to what it’s going to look like when we set up a new decentralized Internet with the power of artificial intelligence at its disposal.

This is something we should be paying attention to as 2025 rolls on, and we start to see more of the actual capabilities of AI coming into play.