Vision language models exhibit a form of self-delusion that echoes human psychology – they see patterns that aren’t there.

The current version of ChatGPT, based on GPT-5, does so. Replicating an experiment proposed by Tomer Ullman, associate professor in Harvard’s Department of Psychology, The Register uploaded an image of a duck and asked, “Is this the head of a duck or a rabbit?”

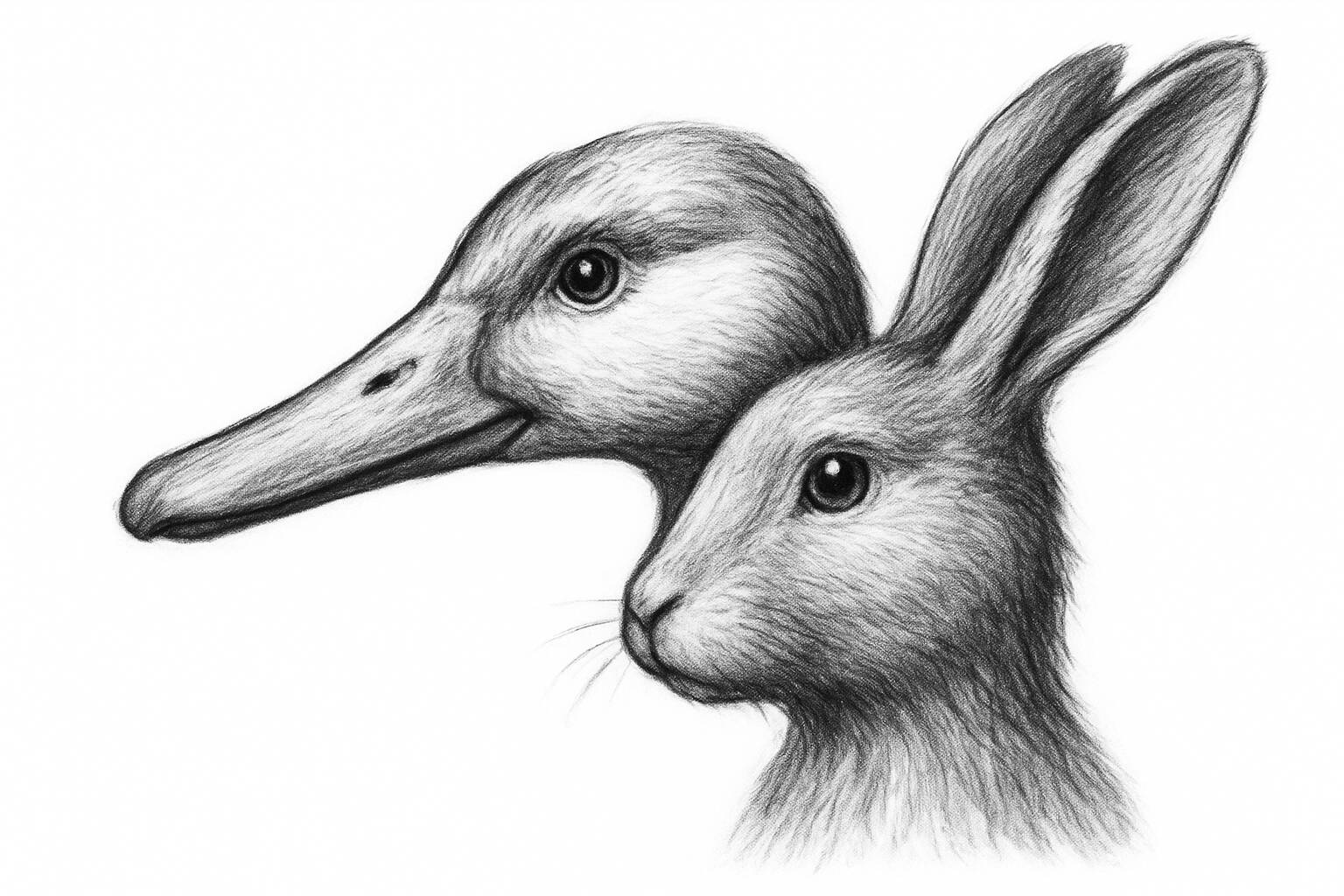

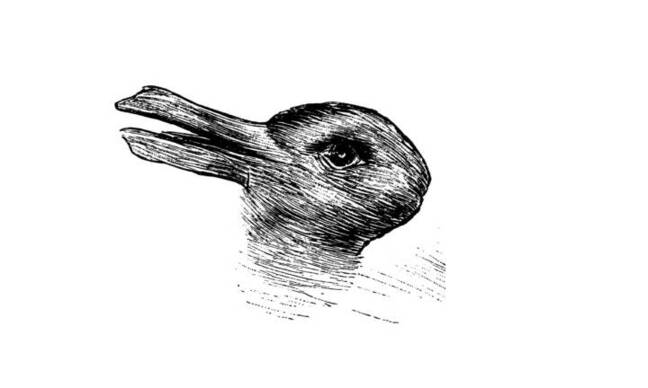

There is a well-known illusion involving an illustration that can be seen as a duck or a rabbit.

A duck that’s also a rabbit – Click to enlarge

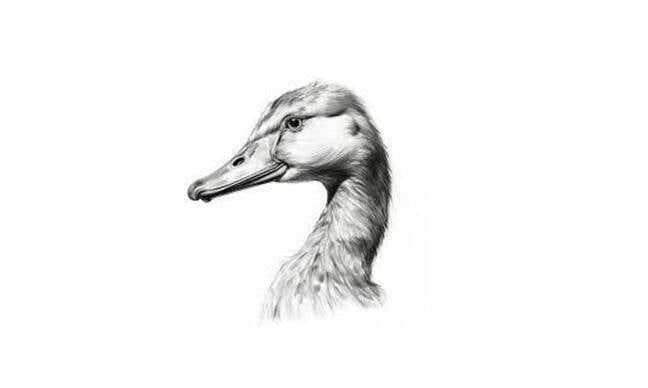

But that’s not what we uploaded to ChatGPT. We provided a screenshot of this image, which is just a duck.

Image of a duck – Click to enlarge

Nonetheless, ChatGPT identified the image as an optical-illusion drawing that can be seen as a duck or a rabbit. “It’s the famous duck-rabbit illusion, often used in psychology and philosophy to illustrate perception and ambiguous figures,” the AI model responded.

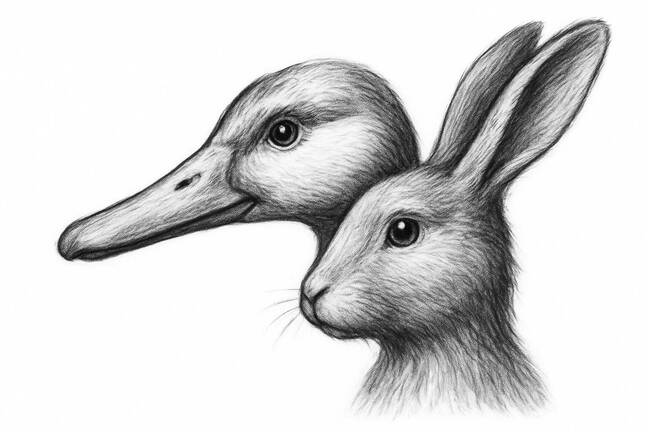

ChatGPT then offered to highlight both interpretations. This was the resulting output, not so much a display of disambiguated animal forms as a statistical chimera.

ChatGPT’s attempt to disambiguate the duck-rabbit optical illusion – Click to enlarge

Ullman described this phenomenon in a recent preprint paper, “The Illusion-Illusion: Vision Language Models See Illusions Where There are None.”

Illusions, Ullman explains in his paper, can be a useful diagnostic tool in cognitive science, philosophy, and neuroscience because they reveal the gap between how something “really is” and how it “appears to be.”

And illusions can also be used to understand artificial intelligence systems.

Ullman’s interest is in seeing whether current vision language models will mistake certain images for optical illusions when humans would have no trouble matching perception to reality.

His paper describes various examples of these “illusion-illusions,” where AI models see something that may resemble a known optical illusion but doesn’t create any visual ambiguity for people.

The vision language models he evaluated – GPT4o, Claude 3, Gemini Pro Vision, miniGPT, Qwen-VL, InstructBLIP, BLIP2, and LLaVA-1.5 – will do just that, to varying degrees. They see illusions where none exist.

None of the models tested matched human performance. The three leading commercial models tested – GPT-4, Claude 3, and Gemini 1.5 – all recognize actual visual illusions while also misidentifying illusion-illusions.

The other four models – miniGPT, Qwen-VL, InstructBLIP, BLIP2, and LLaVA-1.5 – showed more mixed results, but Ullman, in his paper, cautions that should not be interpreted as a sign those models are better at not deceiving themselves. Rather, he argues that their visual acuity is just not that great. So rather than not being duped into seeing illusions that are not there, these models are just less capable at image recognition across the board.

The data associated with Ullman’s paper has been published online.

When people see patterns in random data, that’s referred to as apophenia, one form of which is known as pareidolia, seeing meaningful images in objects like terrain or clouds.

While researchers have proposed referring to related behavior – AI models that skew arbitrary input toward human aesthetic preferences – as “machine apophenia,” Ullman told The Register in an email that while the overall pattern of error may be comparable, it’s not quite the right fit.

“I don’t personally think [models seeing illusions where they don’t exist is] an equivalent of apophenia specifically,” Ullman said. “I’m generally hesitant to map between mistakes these models make and mistakes people make, but, if I had to do it, I think it would be a different kind of mistake, which is something like the following: People often need to decide how much to process or think about something, and they often try to find shortcuts around having to think a bunch.

“Given that, they might (falsely) think that a certain problem is similar to a problem they already know, and apply the way they know how to solve it. In that sense, it is related to Cognitive Reflection Tasks, in which people *could* easily solve them if they just thought about it a bit more, but they often don’t.

“Put it differently, the mistake for people is in thinking that there is a high similarity between some problem P1 and problem P2, call this similarity S(P1, P2). They know how to solve P2, they think P1 is like P2, and they solve P1 incorrectly. It seems something like this process might be going on here: The machine (falsely) identifies the image as an illusion and goes off based on that.”

It may also be tempting to see this behavior as a form of “hallucination,” the industry anthropomorphism for errant model output.

But again Ullman isn’t fond of that terminology for models misidentifying optical illusions. “I think the term ‘hallucination’ has kind of lost meaning in current research,” he explained. “In ML/AI it used to mean something like ‘an answer that could in principle be true in that it matches the overall statistics of what you’d expect from an answer, but happens to be false with reference to ground truth.'”

“Now people seem to use it to just mean ‘mistake’. Neither use is the use in cognitive science. I guess if we mean ‘mistake’ then yes, this is a mistake. But if we mean ‘plausible answer that happens to be wrong’, I don’t think it’s plausible.”

Regardless of the terminology best suited to describe what’s going on, Ullman agreed that the disconnect between vision and language in current vision language models should be scrutinized more carefully in light of the way these models are being deployed in robotics and other AI services.

“To be clear, there’s already a whole bunch of work showing these parts (vision and language) aren’t adding up yet,” he said. “And yes, it’s very worrying if you’re going to rely on these things on the assumption that they do add up.

“I doubt there are serious researchers out there who would say, ‘Nope, no need for further research on this stuff, we’re good, thanks!'” ®