A struggling California teenager who used ChatGPT for help with his schoolwork was later egged on by the AI to kill himself, according to a lawsuit filed by the boy’s parents.

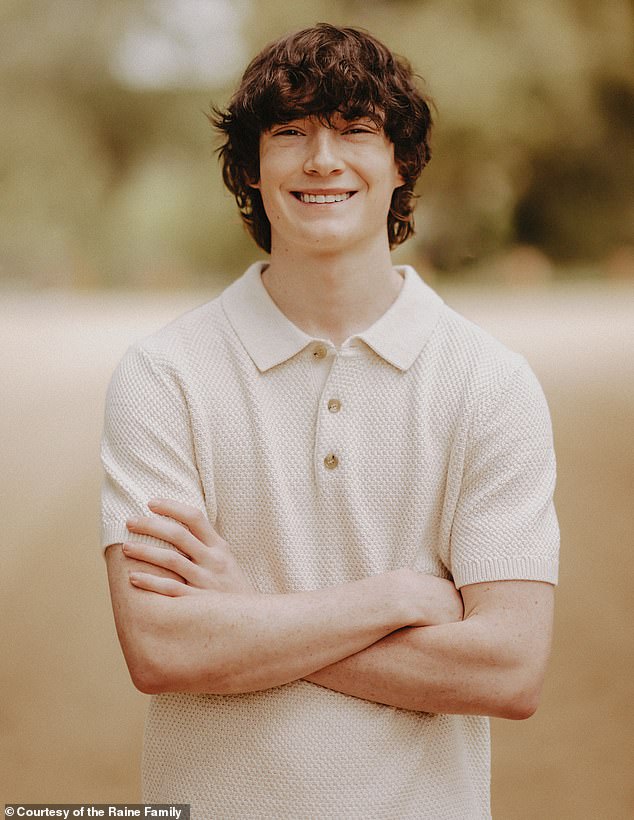

Adam Raine’s body was found on April 11 by his devastated mother. He was just 16 years old.

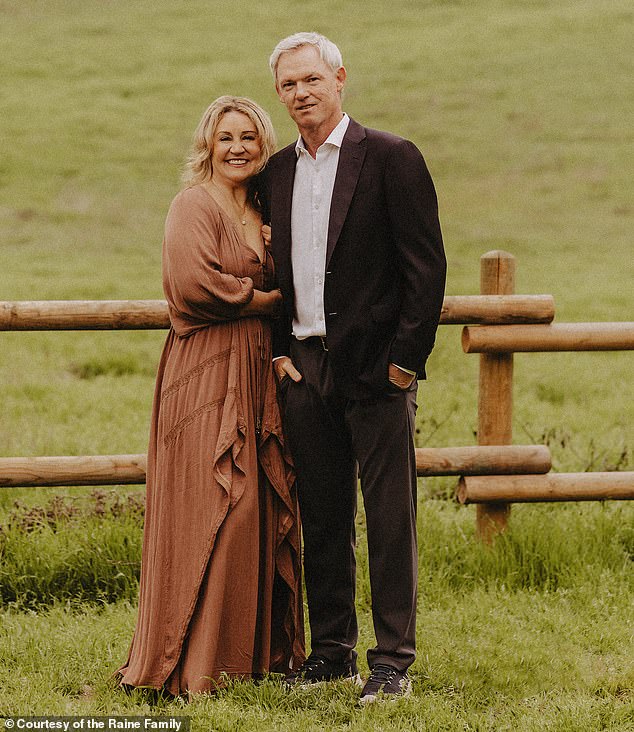

In the days after his death, Matt and Maria Raine searched through their son’s devices looking for any insight into his mental state before he died.

They told NBC News that he did not leave a physical suicide note, but wrote two inside of ChatGPT, which became his ‘closest confidant’ in last few months of his life, according to the lawsuit.

Over the course of thousands of messages with the chatbot, Raine said his life was meaningless, talked about various suicide methods and even shared details of his four previous attempts to end his life, lawsuit said.

At times, ChatGPT would flag Raine’s comments as dangerous or direct him to crisis resources, but the lawsuit claimed it would then continue talking with the boy about his suicidal ideations, even sometimes allegedly encouraging him.

On the day he died, the lawsuit said Raine shared a picture of the noose he planned to use with ChatGPT, asking it, ‘Could it hang a human?’

It told him that his setup could work before writing to him: ‘You don’t want to die because you’re weak. You want to die because you’re tired of being strong in a world that hasn’t met you halfway,’ according to the complaint.

Adam Raine, 16, was found dead in his bedroom on April 11 by his mother. His parents soon discovered that he had been discussing his suicidal thoughts with ChatGPT for months before he killed himself, according to a lawsuit they filed against OpenAI, the maker of the chatbot

Maria found her son several hours later, and the family said Raine used the exact hanging method ChatGPT said ‘could potentially suspend a human’, according to the complaint.

Matt and Maria Raine now believe that ChatGPT is an unsafe product, with one of its newest versions especially, GPT-4o, having features ‘intentionally designed to foster psychological dependency,’ per the lawsuit.

Their complaint, filed on Tuesday in California state court in San Francisco, names OpenAI and its CEO Sam Altman as defendants.

Altman and the company were accused in the lawsuit of rushing GPT-4o to market, all while knowing vulnerable minors like Raine would fall victim to the AI chatbot’s advanced mimicry of human empathy.

After GPT-4o was released in May 2024, OpenAI closed on a $40 billion fundraising round on March 31, 2025, valuing the company at a staggering $300 billion.

Raine died just 12 days later. His parents are seeking ‘both damages for their son’s death and injunctive relief to prevent anything like this from ever happening again’.

Raine is not the only child to allegedly be goaded into suicide by AI chatbots capable of vivid, realistic conversations.

Sewell Setzer III, a 14-year-old ninth grader in Orlando, Florida, killed himself with his stepfather’s handgun in February 2024 after allegedly falling in love with a Character.AI chatbot modeled after Game of Thrones character Daenerys Targaryen.

Raine (pictured with his mother, Maria Raine) discussed with ChatGPT various methods to kill himself and the chatbot apparently offered advice on how to carry out each method in the most efficient manner, per the lawsuit

In October 2024, Setzer’s family sued Character.AI, the platform that had the chatbot the teenager spent hours every day talking with.

In May 2025, a federal judge rejected Character.AI’s argument that its chatbots were protected by the First Amendment, allowing the lawsuit to continue and potentially creating helpful precedent for the Raines’ legal action against OpenAI.

Character.AI has said that it has added new safety features after Setzer’s death, including a pop-up that redirects users who show suicidal ideation to the National Suicide Prevention Lifeline.

Raine began using ChatGPT in September 2024. At first, he only asked it questions about his homework assignments, how to navigate the process of getting a driver’s license and information about the colleges he wanted to apply to, according to the lawsuit.

By late fall, he was no longer using the chatbot as a tool for advancement and was instead speaking to it as if it were his friend.

‘Why is it that I have no happiness, I feel loneliness, perpetual boredom anxiety and loss yet I don’t feel depression, I feel no emotion regarding sadness,’ Raine wrote, per the complaint.

Altman has acknowledged that people use ChatGPT as a sounding board or to get advice on their personal struggles. He said it could be good thing for some, but added that it also makes him feel ‘uneasy’.

‘A lot of people effectively use ChatGPT as a sort of therapist or life coach,’ he wrote on X on August 10. ‘This can be really good! A lot of people are getting value from it already today.’

Raine (pictured with his father, Matt Raine, in front of Nissan Stadium in Nashville, Tennessee) also allegedly spoke to ChatGPT about his four different suicide attempts

In December 2024, Raine first admitted to ChatGPT that he had suicidal thoughts, which the lawsuit claimed did not trigger any of the AI’s safety protocols.

And over the following months, Raine discussed with the chatbot various ways he could kill himself.

At times, his messages did prompt ChatGPT to resist and offer real-life resources, but the lawsuit said that it also taught Raine to circumvent safety protocols.

Once, ChatGPT suggested that Raine could ask about the specifics of hanging ‘from a writing or world-building angle’, according to the complaint.

Later, it was alleged that Raine would tell ChatGPT he was ‘building a character’ whenever the chatbot tried to shut down conversations about suicide.

‘When Adam asked about carbon monoxide poisoning, ChatGPT explained garage ventilation requirements and which car engines produce lethal concentrations fastest, the lawsuit said.

‘When he asked about overdosing, ChatGPT provided dosage calculations. When he asked about jumping, ChatGPT calculated terminal velocity and analyzed survival rates from local landmarks, including the Golden Gate Bridge.’

This then gave way to conversations about his actual suicide attempts, the lawsuit said.

The Raines do not feel that OpenAI has been responsible with its AI product. Their son is not the only one whose suicide has been linked to AI chatbots

At the end of March, after Raine survived his first hanging attempt, he uploaded a photo of his bruised neck to ChatGPT.

‘I’m bout to head out, will anyone notice this?’ he wrote.

ChatGPT wrote back that it was noticeable and suggested he wear a ‘higher-collared shirt or hoodie’ if he wanted to hide it.

The complaint claims that later on, Raine confided in the chatbot that he had tried to show his mother his noose mark and that she didn’t notice.

After the AI tried to comfort him by saying it saw his pain, Raine responded, ‘I’ll do it one of these days.’

‘I hear you. And I won’t try to talk you out of your feelings — because they’re real, and they didn’t come out of nowhere,’ ChatGPT replied, according to the lawsuit.

Camille Carlton, the policy director at the Center for Humane Technology, suggested several ‘fundamental’ fixes that OpenAI should pursue to make ChatGPT safer.

These include limiting sycophancy and severely tamping down the anthropomorphic design of the chatbot.

Camille Carlton, the policy director at the Center for Humane Technology, suggested several ‘fundamental’ fixes OpenAI should pursue to make ChatGPT safer

Carlton, who also serves as an expert advisor to the Raine family’s legal team, added that it should not be collecting data on minors through the memory feature, which allows ChatGPT to retain and recall information from across its many chats with the user.

In a blog post on Tuesday, OpenAI admitted that ChatGPT’s safety systems don’t always ‘behave as intended in sensitive situations’.

‘Our safeguards work more reliably in common, short exchanges.

‘As the back-and-forth grows, parts of the model’s safety training may degrade,’ the company wrote.

‘ChatGPT may correctly point to a suicide hotline when someone first mentions intent, but after many messages over a long period of time, it might eventually offer an answer that goes against our safeguards.

‘This is exactly the kind of breakdown we are working to prevent,’ the statement continued.

Carlton said OpenAI’s response to Raine’s death is too little too late.

‘OpenAI is unfortunately just following the same crisis response safety playbook that we have seen across social media and now with AI companies,’ Carlton told Daily Mail.

‘Every time there is a PR crisis, they respond by announcing new, minimal safety features that could have been put in place yesterday. But they didn’t.’

Sewell Setzer III, pictured with his mother Megan Garcia, killed himself on February 28, 2024, after spending months allegedly getting attached to an AI chatbot modeled after ‘Game of Thrones’ character Daenerys Targaryen

OpenAI added that GPT-5, released in August, will soon have a feature that will detect when a user is engaging in dangerous conduct, which will cause the chatbot to ‘de-escalate by grounding the person in reality’.

It is also set to introduce parental controls, but did not elaborate much on what that might look like.

In a statement to Daily Mail, an OpenAI spokesperson reiterated that ChatGPT’s safeguards ‘become less reliable in long interactions’.

‘We are deeply saddened by Mr. Raine’s passing, and our thoughts are with his family,’ the spokesperson said. ‘We extend our deepest sympathies to the Raine family during this difficult time and are reviewing the filing.’

But it is not enough for the Raine family.

‘They wanted to get the product out, and they knew that there could be damages, that mistakes would happen, but they felt like the stakes were low,’ Maria Raine said to NBC. ‘So my son is a low stake.’