As quantum computers transition from experimental setups to practical accelerators within high-performance computing environments, ensuring dependable and reproducible results becomes paramount. Chinonso Onah from Volkswagen Group and Kristel Michielsen from RWTH Aachen University and J ̈ulich Supercomputing Centre address this challenge by presenting a novel hardware-maturity probe that rigorously assesses a quantum device’s reliability. The team demonstrates that a device’s ability to consistently find the optimal solutions to specific quantum algorithms, namely single-layer Quantum Approximate Optimization Algorithm circuits, serves as a quantifiable measure of its maturity. By employing harmonic analysis, they establish clear limits on the complexity of the computational landscape, enabling a streamlined and analytically verifiable testing process, and ultimately providing a standardised dependability metric for emerging hybrid quantum-HPC workflows.

Application-Centric QAOA Benchmarking for NISQ Devices

This paper introduces a new benchmarking methodology for near-term quantum computers, addressing limitations in existing approaches. The goal is to create a realistic and portable benchmark that measures what the quantum device actually delivers, namely faithful state preparation and readout, rather than abstract gate fidelity. The methodology utilizes the simplest form of QAOA, simplifying analysis and focusing on core device capabilities.

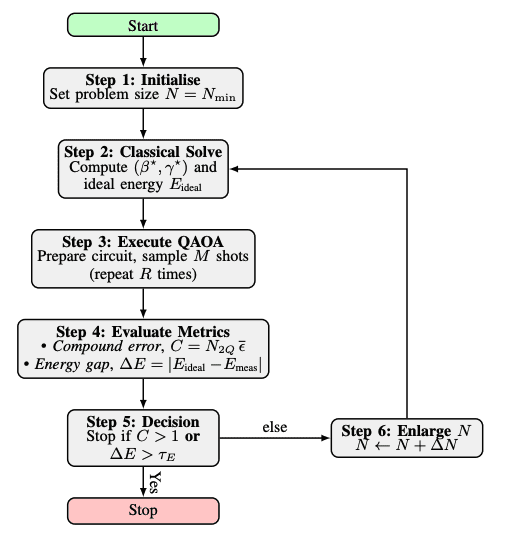

Combinatorial optimization problems, MaxCut and the Traveling Salesperson Problem, serve as applications for benchmarking. A key innovation is the derivation of analytic solutions for optimal QAOA parameters, allowing calculation of the best parameters offline, before running the quantum circuit. This contrasts with iterative optimization algorithms which can be computationally expensive and prone to errors. The analytic optimization also allows calculation of the ideal energy the QAOA algorithm should achieve. Performance is measured by the energy gap, which indicates how close the quantum device gets to this ideal energy; a smaller gap signifies better performance.

For the Traveling Salesperson Problem, a lightweight feasibility repair step corrects any solutions that violate problem constraints. This methodology is portable, independent of specific hardware or applications, and focuses on realistic device performance. Experiments using MaxCut and the Traveling Salesperson Problem were conducted on IBM’s ibm_brussels and ibm_kingston quantum computers, and a classical simulator. The simulator consistently achieved near-ideal performance, while the hardware devices showed performance divergence as problem size increased. ibm_kingston maintained performance up to 64 qubits, but the energy gap increased with problem size, indicating performance degradation.

These results confirmed predicted run-to-failure behaviour, where performance collapses at a certain point. The authors conclude that their methodology provides a realistic, portable, and scalable benchmark for near-term quantum computers. They envision its adoption as a standard dependability yardstick for emerging quantum hardware, guiding error-mitigation priorities and tracking progress toward fault-tolerant quantum computing. Through harmonic analysis, scientists derived closed-form upper bounds on stationary points within the cost landscape of combinatorial-optimization problems, enabling the creation of an exhaustive yet efficient grid-sampling scheme with analytically verifiable outcomes. The methodology centres on pre-computed parameters for problems within the QOPTLIB benchmark suite, allowing researchers to establish a catalogue of analytically derived optima.

Every parameter set was located through exhaustive evaluation, guaranteeing each entry represents a provable global optimum, and enabling quantum processors to be tested by simply running these pre-tabulated circuits and comparing measured energies to these known values. Experiments using Travelling Salesman instances demonstrated the probe’s effectiveness, with measurements taken on the ibm_brisbane processor. Results show that on ibm_kingston, the probe successfully recovered optimal tours for instances wi4 and wi5, maintaining an energy gap of less than 5% for up to 64 qubits, and exhibiting performance collapse as predicted by run-to-failure metrics. For instance wi4, the optimal energy was measured at 6700, with parameters of 2.150 and 2. 315, while for wi5, the optimal energy was 6786, with parameters 3. 142 and 0. 827. MaxCut problems exhibited similar behaviour.

The team observed that the aer_simulator remained within approximately 5% error, while hardware devices showed diverging gaps once the compound error exceeded 1. This probe measures only what the quantum device must supply, faithful state preparation and read-out, and applies unchanged to any device or workload. This approach moves beyond generic benchmarks by focusing on a specific application, providing a more relevant measure of performance for hybrid quantum-high performance computing workflows. Researchers leveraged harmonic analysis to derive closed-form upper bounds on the number of stationary points within the cost landscape for a broad range of combinatorial-optimization problems, enabling an exhaustive yet efficient sampling scheme. The methodology centres on a single-layer QAOA circuit, alternating between two unitaries applied to an initial state.

The cost Hamiltonian, representing the problem to be solved, is expressed using Pauli operators, incorporating local fields, Ising-like interactions, and a constant energy offset. By analysing the expectation value of this Hamiltonian, researchers developed analytical formulas to determine optimal parameters and corresponding expectation values, establishing a target for quantum hardware to achieve. The team rigorously examined the cost expectation and its gradient, deriving expressions for arbitrary quadratic Ising Hamiltonians to predict the number of stationary points. This analysis revealed that the landscape of the p=1 QAOA has at most 5(2M+N) isolated stationary points, where M represents the number of couplings and N the number of qubits.

Consequently, a grid with spacing of π/4 for beta and π/(2(2M+N)) for gamma guarantees sampling of every stationary point. Even for large instances, this exhaustive sweep remains computationally modest, scaling at most quadratically with the number of qubits. To further accelerate the process, researchers implemented the simplicial homology global optimiser, a robust alternative to grid sampling that leverages analytic bounds and requires fewer function evaluations. The team demonstrated that this optimiser achieves competitive energies while controlling runtime, offering a practical approach for hardware validation. This calibrated benchmark, rooted in an industrial workload, provides a direct probe of hardware maturity and suitability for specific problem classes.

Quantum Hardware Maturity via Optimization Benchmarks

This work presents a new method for evaluating the maturity of quantum hardware, focusing on its ability to reliably solve optimization problems. By employing harmonic analysis, the team derived mathematical bounds on the number of possible solutions within the optimization landscape, enabling the creation of an.