“What comes after the smartphone?”

It has been a while since we explored the answer – the next massive consumer electronics product.

No, it’s not some kind of next-gen version of a smartphone that hovers mysteriously between our thumb and forefinger, like in the Amazon Sci-Fi series Upload.

A Phone from the Future? | Source: Upload TV Series

And no, it’s not some kind of new smartwatch that projects holographic images.

The next big wave will be augmented reality (AR) glasses, which are more recently being reframed as smart glasses. Or, not surprisingly, AI glasses.

A Convergence of Key Technologies

In September 2024, in The Bleeding Edge – The Next Computing Interface, we explored Meta’s Orion AR system, which at the time was a prototype AR system with heavy glasses, a wrist band, and a computing puck for horsepower.

Meta’s Orion AR System Circa 2024 | Source: Meta

Orion’s capabilities were impressive, but the glasses were too chunky, and the cost to manufacture the system was around $10,000 – clearly not something for the mass market.

But as with all computing technologies, semiconductors and electronic components get smaller and more powerful by the year. So it was just a matter of time before Meta could shrink the technology down to a price point ready for consumers.

And earlier this month, at Meta’s annual developer conference, it announced its new Meta Ray-Ban Display glasses, which are expected to go on sale to the public today.

Meta Ray-Ban Display | Source: Meta

Finally, all of the key technologies have come together and have been miniaturized to fit into the form factor of a pair of “heavy” sunglasses. Two cameras, full color, high-resolution display in the lenses, batteries, speakers, microphone, connectivity (with a smartphone), and computation – all packed into a pair of Ray-Bans.

Even better, the base price for the Ray-Ban Display glasses and the Meta Neural Band shown in the picture above is $799, the same price as the Apple Watch Ultra 3 sports smartwatch.

What’s exciting about this product release is that Meta finally got the user interface right.

A Hands-Free Interface

AR glasses were never about having a constant overlay to everything in the real world – contrary to what most think.

AR glasses are the next generation of a computing interface – a hands-free interface – that allows us to keep our smartphones in our pockets or purses and simply live our lives… while at the same time having access to useful applications that we normally use throughout the day.

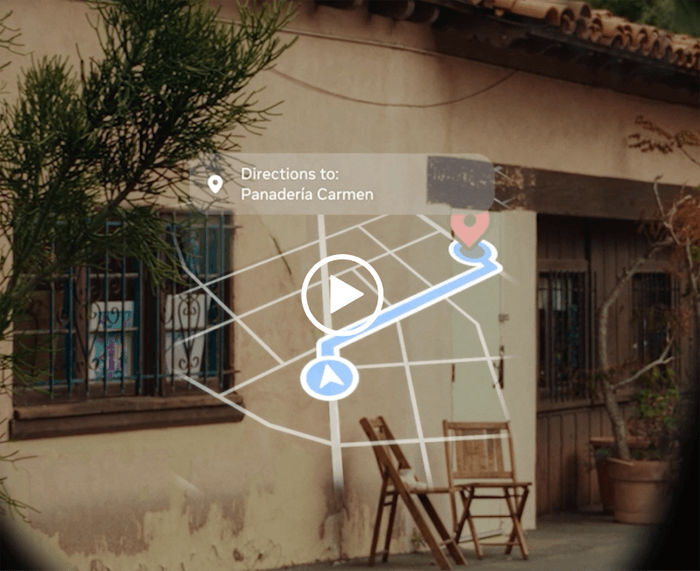

Meta’s Display glasses enable users to see text messages, preview photos taken, translate signs or menus, translate a conversation in another language in real-time, take live video calls, and even show the person on the other end of the line what you’re experiencing in real-time.

They even provide navigation instructions right to the lens of the glasses. What a great way to avoid looking like a tourist or being a target for pickpockets. No need to be looking up and down at your smartphone to figure out where to go any longer.

Navigation with Meta Display | Source: Meta

And it’s important to note that the lenses only turn on to display information when they are needed to. They aren’t “on” the entire time that we wear them. This makes them non-intrusive and also preserves battery life. This was a smart approach taken by Meta.

And the key to Meta’s latest AR product design as a computing interface is what is now called the Meta Neural Band. It’s a simple strap that wraps around the wrist and effectively replaces the kinds of buttons, dials, or touchscreens that we normally see on a smartwatch.

The technology behind the Meta Neural Band comes from CTRL-Labs, a company that I had been tracking since 2016 and had been writing about in The Bleeding Edge. Facebook, now Meta, acquired CTRL-Labs for $1 billion in 2019, a very large number for a company that was very much in research and development mode for surface electromyography (EMG) technology.

CTRL-Labs took on a seemingly impossible task – testing its EMG technology on nearly 200,000 humans.

EMG technology is used to measure signals that the brain sends to the hands for muscle control. Using AI, this enables the translation of intended muscle use for the purpose of gesturing into software controls.

Below is a short video of a user gesturing with his hand to control the software on the Meta Display AR glasses…

Meta Neural Band in Use | Source: Meta

This is such a great use of technology.

The Neural Band in future iterations will look more like a fashion item, much in the way that Apple has done with the watch bands for Apple Watch.

And because of the work that has been done with EMG technology, it isn’t even necessary to make large gestures with your fingers to control the software on the Meta Display AI glasses. Because of AI, very subtle, even imperceptible, gestures can be used to control the software.

This is the future. An AI-powered computing interface that uses voice commands and EMG – that frees us from pounding on a keyboard or hunched over our smartphones, pecking away on a screen.

Hence, the new product positioning of AI glasses as opposed to AR glasses.

This is, of course, all wrapped into Meta’s remarkable pivot made in early 2023.

An Entirely New Category

Some of us may remember the original strategy put in place by Facebook CEO Mark Zuckerberg in 2021. It was the impetus for changing the corporate name from Facebook to Meta.

The “meta” was for metaverse. Zuckerberg’s goal was to make a single, dominant metaverse where everyone would flock to for interacting online. It was never going to work – something we explored in The Bleeding Edge – There Is More than One Metaverse.

To Zuckerberg’s credit, after losing $13.7 billion on Metaverse-related investments in 2022 – and after watching OpenAI’s ChatGPT hit 100 million users in just two months compared to the 2.5 years it took Instagram to do the same – Zuck basically shut his metaverse initiative down and pivoted 100% to artificial intelligence.

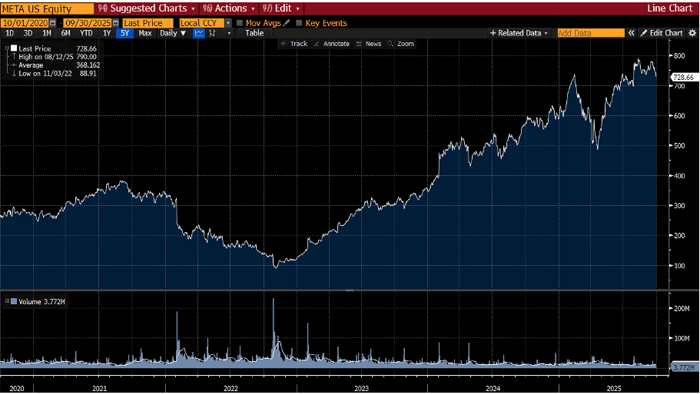

5-Year Stock Chart of META | Source: Bloomberg

After collapsing almost 77% from its 2021 highs, META’s share price has flown 721% higher following the pivot from Meta’s metaverse to full-throttle artificial intelligence. Fantastic.

Facebook/Meta has never been known for innovating and building its own products. It’s famous for copying product ideas from others and scaling them well.

But what the company is well-known for is smart acquisitions. The price tags paid for Oculus, Instagram, and WhatsApp all seemed ridiculous at the time. But Instagram and WhatsApp turned out to be fortunes for Meta, and many of the assets acquired from Oculus are finally coming to fruition now with AR.

And the CTRL-Labs acquisition has now turned into a commercial product with incredible capabilities and potential.

More than 2 million pairs of Meta’s previous-generation smart glasses have been sold with limited functionality. They worked for consumers, though, because they looked like normal sunglasses (with a couple of cameras) and they had nice hooks for social media applications.

The Meta Display with the Neural Band, however, is an entirely new category. And Meta is gearing up for manufacturing 10 million units in 2026.

The technology provides utility and ease of use that anyone at any age can benefit from. It will likely find that the size of the opportunity is much larger.

And the reality is that Meta is now in the lead, which means Google is nervous.

The Key Is Visual

Meta has thrown down the gauntlet, now.

Google will have to reply with its own combination of Android XR AI glasses operating system, combined with its Gemini generative AI.

This isn’t just a battle for selling new consumer electronics devices. This is a battle for who will control the operating system for the majority of all AR glasses.

And the company that wins that fight will take the lion’s share of related advertising revenue.

And that’s not all…

The key to artificial general intelligence is visual…

Frontier AI models need video from the real world to understand how to manifest AI in a form that can interact with and navigate the world in the same way that humans do.

What better way for Google or Meta to collect video training data than with AR glasses? They are doing for humans what Tesla has already done for cars.

Hands-free.

Jeff

Want more stories like this one?

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.