In 1950, Alan Turing proposed a now-famous experiment that we all know. It was a conversation between a person and a machine, judged by whether the human could tell the difference. Practical and itself being binary (yes or no), it gave early computer science something it needed, a goal post. But it also planted a seed that would grow into a problem we still haven’t named.

We’ve spent 70 years teaching machines to pass as human. And I believe that we’ve gotten very good at it. Language models now write essays and code that feel remarkably human-like, perhaps even better. They apologize when they’re wrong and they simulate doubt when probabilities get thin. And at the heart of this simulation is that they drive completion over comprehension—and we’re letting them get away with it. So, this pseudo-substance also goes right with pseudo-style. The models have learned the rhythm and texture of our speech so well that we forget they’re not speaking at all. But something strange happens the better they get at this impression. And, it’s my contention that the more human they sound, the less interesting they become.

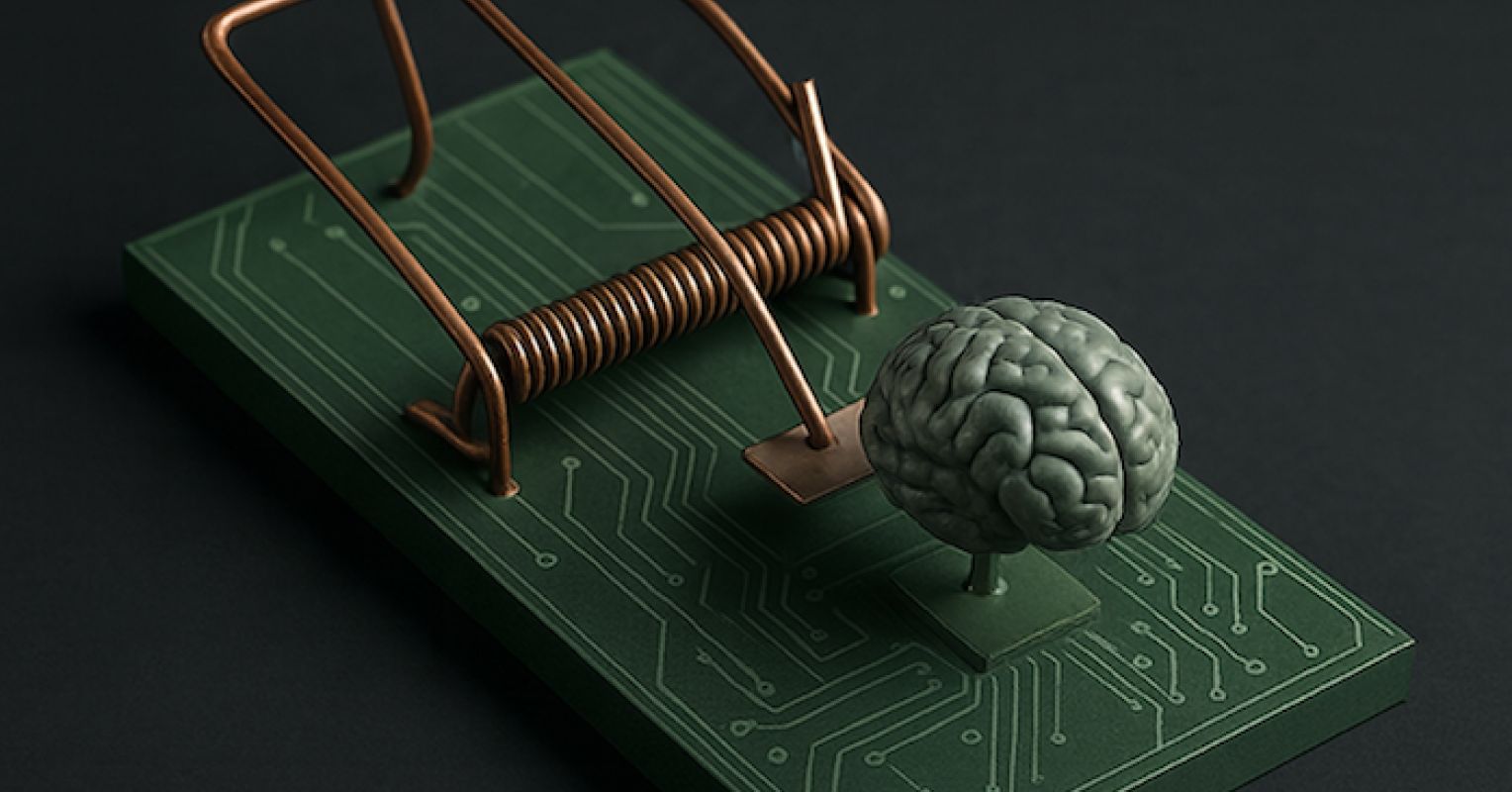

The Cost of Imitation

Now, let’s consider our current path, scaling up imitation. Today’s large language model predicts the next word based on vast collections of training data. It gets fluent, then eloquent, then spot on. But remember, it never crosses into understanding. It maps probability distributions, not meaning. It knows that “the cat sat on the” precedes “mat” more often than “couch,” but it has no image of a cat, no sense of a mat, no experience of sitting. The sentences it produces are statistically correct and even brilliant, but semantically hollow. That’s what success looks like when resemblance becomes the goal.

Neuromorphic computing makes the same mistake in hardware. Engineers build chips that mimic our brain’s architecture, such as spiking neurons and synaptic weights. The results are impressive and seductive. These systems seem to learn faster and use less power than conventional processors. But efficiency isn’t insight. A chip that fires like a neuron isn’t thinking any more than a player piano is composing. Both reproduce the pattern but miss the generative process underneath.

Depth Through Difference

I would argue that the real opportunity lies in the difference, not the similarity. And these differences are dramatic. Human cognition travels through narrative, emotion, intuition, and context. We’re slow, biased, and embodied in our humanity. Machine cognition moves through pattern, scale, speed, and precision. It’s tireless, relentless, but most importantly, affectless. These aren’t competing modes that need to converge. They’re two systems that create depth through their separation. Parallax vision works because your eyes are apart to produce depth perception. The same principle may apply to intelligence. Two different computational vantage points, with enough distance between them, reveal dimensions that neither can see alone.

But we keep trying to collapse that distance. Every chatbot trained to sound warm and every interface that apologizes for its mistakes aren’t just design choices. They’re a sort of capitulation to the idea that intelligence only counts when it looks like ours. And the cost is higher than bad engineering, as we may be closing the door on forms of cognition that could teach us something new.

Letting Machines Be Strange, Very Strange

What if we stopped trying to make AI relatable? A quantum computer doesn’t think like a person. It holds multiple states simultaneously and collapses probability into answer. That’s not human reasoning translated into silicon; it’s a different kind of knowing entirely. Swarm algorithms solve problems through distributed iteration. No individual ant finds the shortest path to food, but the colony does. Could it be that intelligence emerges from the pattern, not the parts? These systems don’t need to explain themselves in our language or justify their conclusions with our logic. They work on their own terms.

The same could be true for AI, if we let it. Instead of training models to mimic human conversation, we could build systems that surface patterns we’d never notice. Instead of neural networks that approximate brain function, we could explore architectures with no biological analog at all. The goal wouldn’t be to make machines that think like us, but to make machines that think in ways we can learn from, even if we can’t fully follow.

The Courage to Decenter Ourselves

Turing’s test wasn’t wrong for 1950. It was a clever way to operationalize a curiously new concept. But was it meant to be a permanent basis on which AI is judged? The imitation game was a beginning, not a destination. Somewhere along the way, I think we forgot that. We turned a methodological convenience into an existential aspiration, and now we’re stuck optimizing for the wrong thing.

Now, maybe I’m oversimplifying. But to me, the question was never whether machines could fool us. The question is whether we’re brave enough to let them be strange. The value of artificial intelligence isn’t that it makes us feel less alone—it’s that it might show us how much more cognition contains than we ever imagined. But only if we stop demanding it look like, well, us.