Raspberry Pi has launched the AI HAT+ 2 with 8 GB of onboard RAM and the Hailo-10H neural network accelerator aimed at local AI computing.

On paper, the specifications look great. The AI HAT+ 2 delivers 40 TOPS (INT4) of inference performance. The Hailo-10H silicon is designed to accelerate large language models (LLMs), vision language models (VLMs), and “other generative AI applications.”

Computer vision performance is roughly on a par with the 26 TOPS (INT4) of the previous AI HAT+ model.

These components and 8 GB of onboard RAM should take a load off the hosting Pi, so if you need an AI coprocessor, you don’t need to blow through the Pi’s memory (although more on that later).

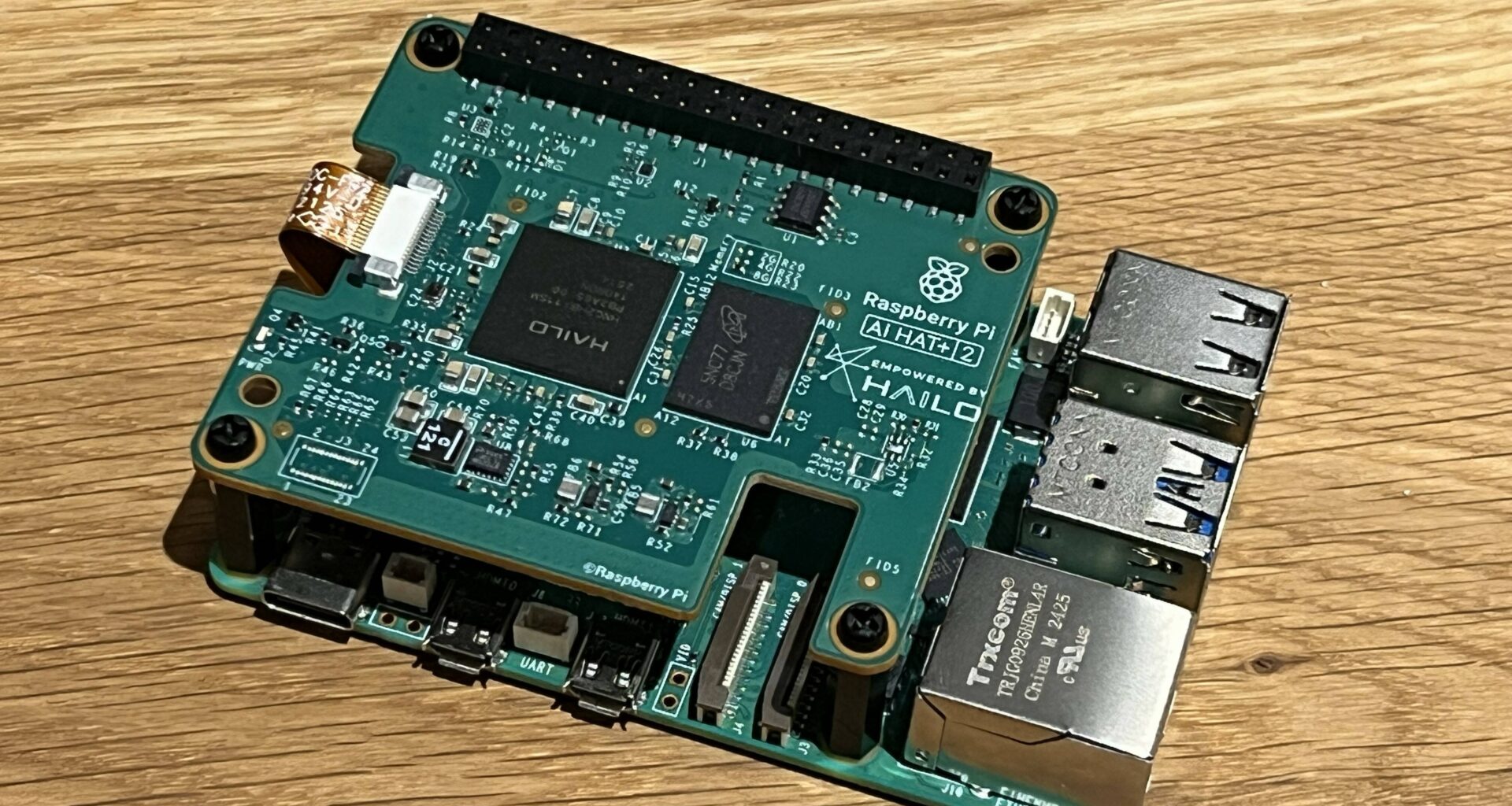

The hardware plugs into the Pi’s GPIO connector (we used an 8 GB Pi 5 to try it out) and communicates via the computer’s PCIe interface, just like its predecessor. It comes with an “optional” passive heatsink – you’ll certainly need some cooling solution since the chips run hot. There are also spacers and screws to fit the board to a Raspberry Pi 5 with the company’s active cooler installed.

AI HAT+ 2 on Raspberry Pi 5

Running it is a simple case of grabbing a fresh copy of the Raspberry Pi OS and installing the necessary software components. The AI hardware is natively supported by rpicam-apps applications.

In use, it worked well. We used a combination of Docker and the hailo-ollama server, running the Qwen2 model, and encountered no issues running locally on the Pi.

However, while 8 GB of onboard RAM makes for a nice headline feature, it seems a little weedy considering the voracious appetite AI applications have for memory. In addition, it is possible to specify a Pi 5 with 16 GB RAM for a price.

And then there’s the computer vision, which is broadly the same 26 TOPS (INT4) as the earlier AI HAT+. For users with vision processing use cases, it’s hard to recommend the $130 AI HAT+ 2 over the existing AI HAT+ or even the $70 AI camera.

Where LLM workloads are needed, the RAM on the AI HAT+ 2 board will ease the load (although simply buying a Pi with more memory is an option worth exploring). According to Raspberry Pi, DeepSeek-R10-Distill, Llama3.2, Qwen2.5-Coder, Qwen2.5-Instruct, and Qwen2 will be available at launch. All (except Llama3.2) are 1.5-billion-parameter models, and the company said there will be larger models in future updates.

The size compares poorly with what the cloud giants are running (Raspberry Pi admits “cloud-based LLMs from OpenAI, Meta, and Anthropic range from 500 billion to 2 trillion parameters”). Still, given the device’s edge-based ambitions, the models work well within the hardware constraints.

This brings us to the question of who this hardware is for. Industry use cases that require only computer vision can get by with the previous 26 TOPS AI HAT+. However, for tasks that require an LLM or other generative AI functionality but need to keep processing local, the AI HAT+ 2 may be worth considering. ®