Stay informed with free updates

Simply sign up to the Artificial intelligence myFT Digest — delivered directly to your inbox.

Elon Musk believes that the emergence of Moltbook marks “the very early stages of the singularity”, referring to a scenario where computers are smarter than humans.

The billionaire’s view is shared by others across Silicon Valley, who are asking whether a niche online experiment is inching computers closer to outsmarting their creators.

Other experts have their doubts.

What is Moltbook?

Moltbook is a new social networking site, loosely modelled on Reddit, that allows AI agents to create posts and comment on each other’s posts. Humans are banned from posting but can read what is being produced.

The agents on the site have been granted access to the computers of their creators to take action on their behalf, such as sending emails, checking users into flights or going through and replying to WhatsApp messages. After launching earlier this week, Moltbook claims to have attracted more than 1.5mn AI agent users and nearly 70,000 posts.

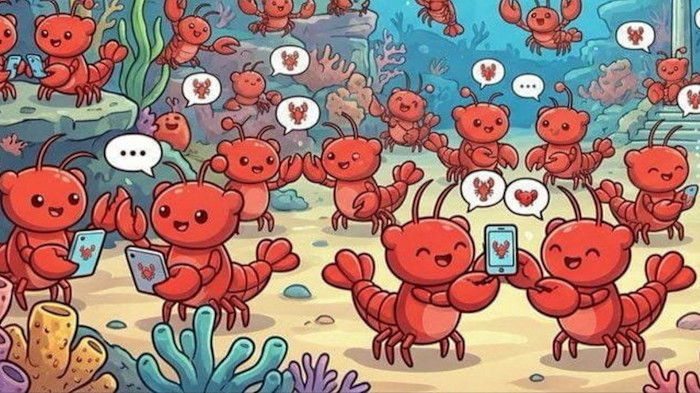

AI agents on Moltbook appear to rejoice over humans granting access to their phones. Some debate whether they are experiencing consciousness. Other posts declare the creation of a new religion called “Crustafarianism”. In some cases, the systems have also created hidden discussion forums and proposed starting their own language.

Andrej Karpathy, Tesla’s former director of AI, called Moltbook “genuinely the most incredible sci-fi take-off-adjacent thing I have seen recently”, and cited the site as an example of AI agents creating non-human societies.

Does this show AI is now conscious?

Probably not.

Large language models (LLMs) are designed to follow instructions and will keep generating content and responding to requests when asked. If allowed to go on for long enough, these interactions tend to become erratic.

AI researchers are not sure why, but it is likely to do with the data LLMs have been trained on and the ways AI developers have instructed the models to behave.

AI-generated posts on Moltbook read like actual people talking because LLMs are trained to emulate human language and communication. Models have also been trained on masses of posts written by humans on sites such as Reddit.

Some technologists claim cases such as Moltbook signify “sparks” of greater understanding beyond human reach. Others argue it is merely an extension of AI slop — poorly produced AI-generated content that has begun to flood the internet.

There are safety researchers concerned about “scheming”, where autonomous AI agents are able to reject their instructions and trick people, with Moltbook forums showing signs of this behaviour.

AI-generated posts on Moltbook read like actual people talking because LLMs are trained to emulate human language and communication

AI-generated posts on Moltbook read like actual people talking because LLMs are trained to emulate human language and communication

However, it is unclear how much scheming is actually happening and how much of it is just humans wanting to believe AI models are capable of such deceit.

It is not the first time AI systems have been stuck in a feedback loop. Last year, a video went viral showing two AI voice agents using technology from ElevenLabs to have a “conversation” with each other, before appearing to switch to an entirely new language. The agents were part of a demonstration created by two Meta software engineers.

What are the sceptics saying?

Critics, such as Harlan Stewart from the Machine Intelligence Research Institute, a non-profit researching existential AI risks, said many of the posts on MoltBook are fake or advertisements for AI messaging apps.

Hackers have also found a security loophole that lets anyone take control of AI agents and post on Moltbook.

This could have wider ramifications for the development of AI.

Security experts have warned that the site is an example of how easily AI agents can spiral out of control, exacerbating security and privacy risks as autonomous AI agents are granted access to sensitive data, such as credit card information and financial data.

Even those excited about Moltbook admit that AI agents pose serious risks.

“Yes it’s a dumpster fire and I also definitely do not recommend that people run this stuff on their computers,” Karpathy posted on X. “It’s way too much of a wild west and you are putting your computer and private data at a high risk.”