Niantic Spatial SDK v3.15 now supports Quest 3 and Quest 3S.

The SDK gives developers advanced mixed reality capabilities: centimeter-accurate outdoor VPS, long-distance live scene meshing, and semantic segmentation.

This is all possible because since earlier this year, Meta now lets third-party Horizon OS apps access the passthrough cameras of Quest 3 and Quest 3S, and Niantic leverages this to run the computer vision models it’s been developing for around a decade now.

Niantic Sells Pokémon Go To Saudi Arabia To Fund Spatial AI Transition

Niantic Spatial is splitting its AI arm from Pokemon Go and selling the latter to Saudi Arabia’s investment group.

The release comes five months after Niantic, which is best known as the developer of Pokemon Go, essentially split into two. The Niantic Games business, including Pokemon Go, was sold to Saudi Arabia’s Scopely, while the spatial technology side of the business was spun out to a new company called Niantic Spatial.

Here’s a breakdown of the mixed reality capabilities Niantic Spatial SDK now gives Quest developers:

Centimeter-Accurate VPS

Everyone knows what Global Positioning System (GPS) is. It’s a core part of modern life, it’s how we navigate the world, and its inclusion in smartphones even spawned a new software category, on-demand transportation and delivery. But GPS is typically only accurate to around 1 meter in ideal conditions. And in urban environments where buildings obstruct the signals, this can drop to dozens of meters, as you hopelessly watch that little blue dot on your screen bounce around the neighborhood.

Google illustration of VPS.

A Visual Positioning System (VPS), on the other hand, is a software system that uses computer vision to determine your position by identifying unique visual patterns in the real-time view of a camera, and comparing them to an existing high fidelity 3D map of the world.

As such, VPS only works in areas where enough persistent dense physical geometry has been 3D mapped. But within these areas, it can localize your exact position with centimeter accuracy.

Google Maps has had a VPS feature for on-foot navigation for six years, leveraging Google’s Street View data, and Google makes this capability available to smartphone app developers as part of ARCore.

Niantic Spatial SDK on Quest 3

But VPS is arguably far more interesting when used for mixed reality headsets and AR glasses. And while Niantic’s VPS also runs on smartphones, what makes it unique is that it now supports Meta’s Quest 3 and Quest 3S, as well as Magic Leap 2.

Niantic’s VPS map covers over 1 million locations, built using the scans from players of games like Pokemon Go and Scaniverse. Further, Niantic claims its VPS offers “industry-leading accuracy”, and it provides 3D meshes for scanned public locations.

The first 10,000 VPS API calls each month are free, and the rest are priced at around $0.01 per call.

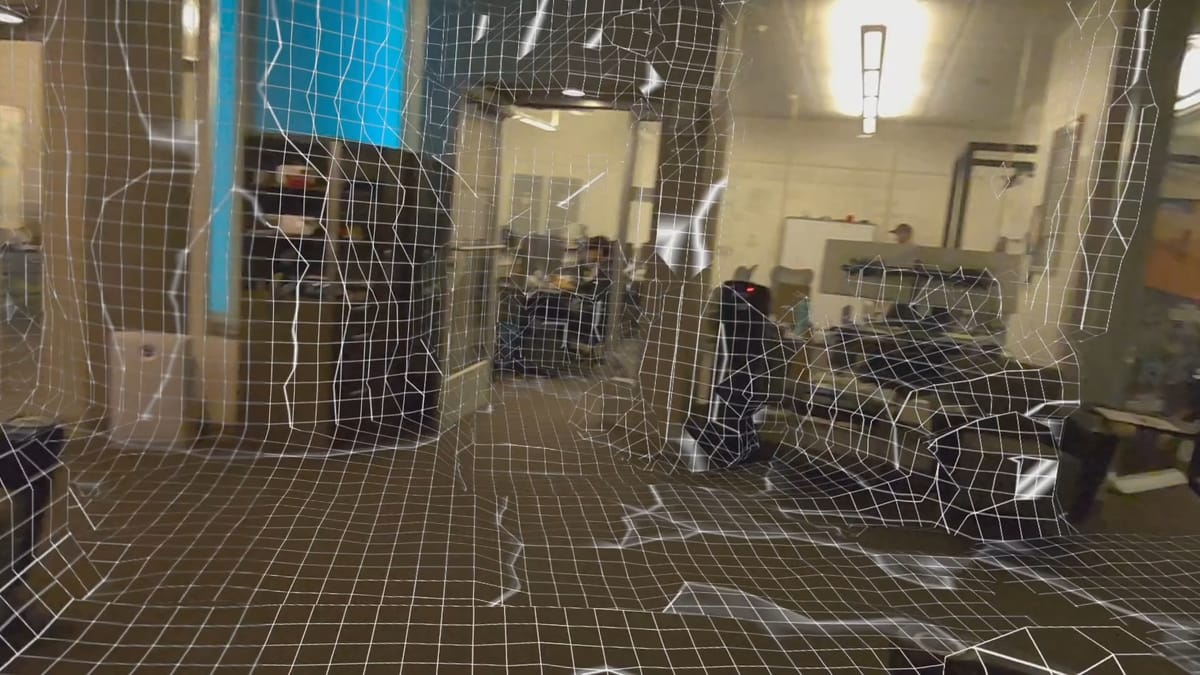

Live Scene Meshing

Quest 3 and Quest 3S let you scan your room to generate a 3D scene mesh that mixed reality apps can use to allow virtual objects to interact with physical geometry or reskin your environment. But there are two major problems with Meta’s current system.

The first problem is that it requires you to have performed the scan in the first place. This takes anywhere from around 20 seconds to multiple minutes of looking or even walking around, depending on the size and shape of your home, adding significant friction compared to just launching directly into an app.

The other problem is that these scene mesh scans represent only a moment in time, when you performed the scan. If furniture has moved or objects have been added or removed from the room since then, these changes won’t be reflected in mixed reality unless the user manually updates the scan. For example, if someone was standing in the room with you during the scan, their body shape is baked into the scene mesh.

A Developer Solved The Biggest Problem With Quest 3’s Mixed Reality

Lasertag’s developer implemented continuous scene meshing on Quest 3 & 3S, eliminating the need for the room setup process and avoiding its problems.

Back in May, we highlighted how Lasertag’s developer Julian Triveri implemented continuous scene meshing on Quest 3 & 3S using Meta’s Depth API. As we mentioned in that article, Triveri made the source code for his technique available on GitHub for other developers to use, and Hauntify plans to take Triveri up on his offer.

However, the Depth API only works out to around 4 meters. Niantic Spatial SDK’s live meshing, on the other hand, includes support for long-distance meshing.

Niantic Spatial SDK on Quest 3

It uses Niantic’s own computer vision algorithms, taking in the view from the passthrough cameras to construct the mesh.

Niantic Spatial SDK on Quest 3

Niantic’s approach is thus far more suitable for outdoor use, and works well in combination with its VPS.

Semantic Segmentation & Object Detection

Niantic Spatial SDK can also identify and label objects and surfaces, in real time.

Niantic Spatial SDK on Quest 3

The object recognition is similar to Quest passthrough camera access developer samples, while the segmentation seems to be more advanced.

Niantic Spatial SDK on Quest 3

Pricing & What’s Next?

Niantic’s VPS feature is priced per API call, while the on-device computer vision capabilities of Niantic Spatial SDK are unlimited, but require paying around $0.10 per month per monthly active user (MAU).

Niantic Spatial SDK’s live meshing is something we expect to see many Quest 3 mixed reality apps adopt, and its VPS feature makes Quest 3 more suitable for outdoor public experiences, though the hardware isn’t designed for that.

Niantic says it’s “continuing to expand support across additional headworn devices, improve performance, and introduce new features like enhanced occlusion and persistent scene understanding”, noting that developer feedback will shape what comes next.