We validated our TRIUMPH model, developed using a machine learning approach, in an international multicenter external cohort. The model showed numerically superior discrimination compared to the HALT-HCC, MORAL, and AFP models and had greater clinical utility in the net benefit analysis. These findings support the use of machine learning to create more precise risk prediction models.

The TRIUMPH model’s strength comes from two key factors. First, it was developed using a large LT cohort from the University Health Network, which included nearly 20% LDLT15. In contrast, the HALT-HCC, MORAL, and AFP models were based solely on deceased donor LT cohorts. Incorporating a large LDLT population in model development supports a more balanced evaluation of LT recipients and enhances generalizability. This is reflected in TRIUMPH’s numerically higher performance in both the LDLT subgroup and the Kaohsiung Chang Gung Memorial Hospital subgroup. Second, the machine learning approach used in the TRIUMPH model identified more associations and incorporated a broader range of risk factors. It included not only morphological factors like the size and number of lesions but also biomarkers such as AFP levels and neutrophil counts. Additionally, it accounted for dynamic changes occurring during bridging therapies on the waitlist. Incorporating multiple aspects of HCC is crucial for establishing effective selection criteria16. Compared to the TRIUMPH model, only HALT-HCC7 achieved statistically noninferior performance, consistent with the original development cohort findings. Although this difference wasn’t statistically significant, subgroup analysis showed a numerical advantage for TRIUMPH, particularly among LDLT recipients and non-US cohorts. HALT-HCC, developed at a US center (Cleveland Clinic), performed best in US validation centers (UCSF, UCLA). This highlights the influence of regional practices and patient characteristics on the model’s performance. Despite both models originating from North American centers, TRIUMPH’s superior performance outside North America may stem from its more diverse development cohort and machine learning approach incorporating more predictors.

The TRIUMPH model also showed better clinical utility in the net benefit decision analysis which balanced the true positive rate (preventing futile liver transplants in patients likely to experience recurrence) against the false positive rate (denying transplants to patients who could potentially be cured). Across various risk thresholds (reflecting the probability of LT based on waitlist status and organ availability), the TRIUMPH model achieved a higher net benefit than other models, particularly from thresholds 0.0 to 0.6. This range is realistic given that the literature reports a 1-year probability of 54% and an overall probability of 60–73% for LT in patients with HCC on the UNOS waitlist18,19,20.

One example of machine learning implemented in organ allocation is the Optimized Prediction of Mortality (OPOM), evaluated by OPTN/UNOS21. While OPOM was designed to improve risk stratification for HCC patients with exception points22,23, it only predicts waitlist dropout, neglecting the critical prognostic factor of post-transplant survival. The TRIUMPH model, however, shows strong performance and utility in predicting post-transplant recurrence. Therefore, TRIUMPH could serve as a complementary tool, integrating this crucial post-transplant survival aspect into the organ allocation system. Nevertheless, incorporating any new model like TRIUMPH into allocation policy is a major undertaking that remains a future goal, requiring extensive validation, logistical considerations, and consensus within the transplant community.

Apart from the TRIUMPH model, three other machine learning-based models have been developed to predict post-transplant HCC recurrence: MoRAL-AI24, RELAPSE25, and TRAIN-AI26. The MoRAL-AI model, which uses a deep neural network, incorporates variables such as tumor diameter, AFP, and PIVKA-II. It demonstrated improved discrimination compared to the conventional MoRAL model, however, its generalizability is limited due to its focus on LDLT recipients in South Korea and the requirement for PIVKA-II, which is not routinely analyzed. The RELAPSE model, which employs random survival forests and classification techniques, achieved a higher c-index compared to the TRIUMPH model, although this advantage likely stems from its inclusion of post-transplant variables. However, pre-transplant variables are crucial for decision-making regarding organ allocation, as they are the only factors available before surgery. TRAIN-AI, developed on a large international cohort and validated on a smaller North American cohort, followed the opposite approach of the TRIUMPH model. While TRIUMPH captured dynamic changes in tumor lesions during bridging therapy using objective data, TRAIN-AI relied on the modified Response Evaluation Criteria in Solid Tumors (mRECIST). This criterion, which can vary between institutions and radiologists27,28, may introduce bias. Nevertheless, TRAIN-AI achieved a high c-index of 0.77 in both internal and external validations, outperforming other pre-existing models. The DeepSurv methodology, used by TRAIN-AI, was also considered for our development cohort. However, the TRIUMPH approach—enhancing the traditional Cox model with elastic net regularization—was preferred due to its superior performance. While DeepSurv is well-suited for large development cohorts like that used in TRAIN-AI, its complexity poses a risk of overfitting in smaller datasets. Given our relatively small sample size, we selected the TRIUMPH model as a more appropriate approach to mitigate this risk.

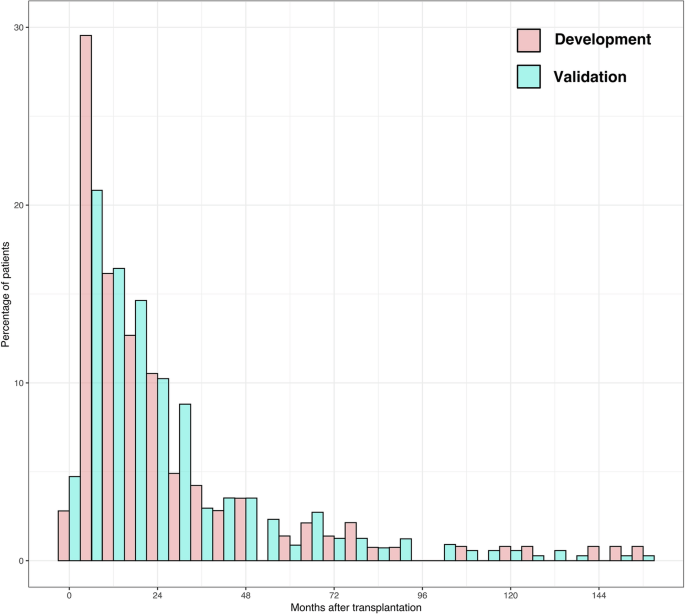

This study faces several limitations that impact its findings. Firstly, its design as a retrospective and multicentric study introduces the possibility of selection biases which may arise due to the varied approaches to management across different centers. Additionally, the development cohort from Toronto differed significantly from the validation cohort, which had a higher HBV prevalence and lower proportion of locally advanced HCC. This discrepancy may have negatively impacted the model’s validation performance and highlighted potential regional biases that affected the model’s performance. Future work incorporating data from diverse international centers during model development would help refine the model and improve its generalizability. While the inclusion of both living and deceased donor grafts in the TRIUMPH model could be viewed as a commingling of data, this approach was intentional. Donor-specific models may better capture differences in transplant settings or graft characteristics, but the TRIUMPH model was designed to reflect the reality of centers offering both types of transplantation. It provides a unified tool to guide decision-making for patients eligible for either pathway. Notably, there is no evidence to date that graft quality directly influences oncologic outcomes in an as-treated analysis. Given that models specific to LDLT or DDLT already exist, the strength of TRIUMPH lies in its validated performance across a mixed-donor cohort—reflecting real-world practice and enhancing generalizability across diverse transplant programs. Finally, while other frequently used models, such as Metroticket 2.029 (based on competing risk analysis) and RETREAT14 (which incorporates pathological predictors), could have been included as comparators, their inclusion was not feasible due to unmet model requirements.

In conclusion, the TRIUMPH model outperforms other commonly used scores in predicting post-transplant HCC recurrence, offering both higher accuracy and greater clinical utility. This suggests that integrating the TRIUMPH model into future organ allocation strategies for HCC patients could enhance the overall benefit of liver transplantation. Our study highlights the potential of machine learning approaches to advance organ allocation in transplant medicine. However, despite technological advancements, it remains essential to develop robust machine learning models with large, diverse cohorts to ensure generalizability and avoid overfitting. This will require ongoing collaboration within the international transplant community and a commitment to incorporating machine learning innovations into transplant practices.