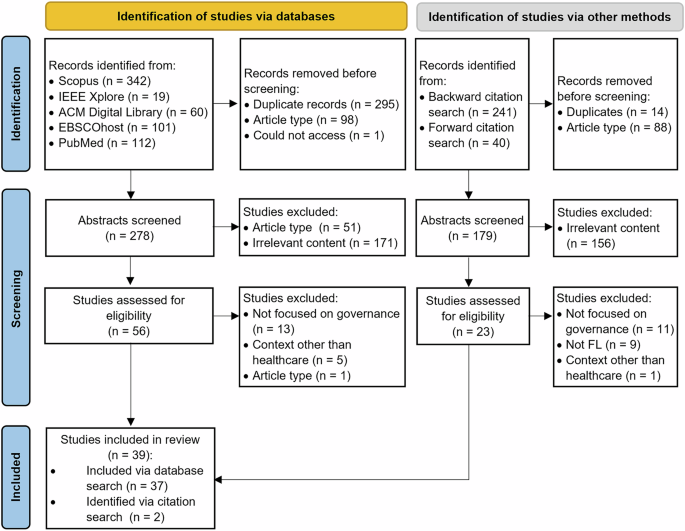

As illustrated in Fig. 1, 39 papers were included in the review, which examined the governance of one or more techniques (i.e., FL, ML, FDN). Of the 39 papers (Supplementary Table 1), governing ML in healthcare was the predominant focus (n = 31), with limited research on governing FDN (n = 5) or FL (n = 7) in healthcare. Because articles can explore multiple techniques, the sum of the papers investigating the governance of ML, FL, and FDN are greater than the total number of papers included. Most papers were conceptual, with only 12 empirical papers, half of which presented case descriptions of their organisation’s efforts without reporting actual data.

Fig. 1: Article screening and selection process.

The PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) flow diagram detailing the article screening process.

Procedural mechanisms

Procedural mechanisms specify the parameters that guide the appropriate use of FL (and associated techniques) in healthcare. Four procedural governance subthemes which encompass twelve procedural mechanisms, were identified (Table 1): data privacy, formal guidelines and agreements, initial model utility, and ongoing monitoring. All procedural mechanisms were reported in ML studies. For FL and FDN, deterrence against misuse, initial evaluation, and model registration were not reported. The absence of studies investigating a mechanism for a specific technique does not suggest that the mechanism is irrelevant for the given technique, rather they have not been the focus of the studies included in the review.

Table 1 Procedural mechanism theme

In terms of data privacy, across all techniques (FL, ML, FDN, the need to protect the privacy of health consumers has been a core consideration. Concerns surrounding the potential for the reidentification18,27,28 and misappropriation of health data19,29 have been raised. This highlights the need for data access control mechanisms29 and data de-identification28. In FL, despite data remaining within the data owner’s infrastructure, the possibility exists for reidentification5. To mitigate this concern in FL, studies have suggested using synthetic datasets during initial training, encrypting data before learning, implementing differential privacy, authenticating access, and limiting the output provided to data users5,10.

Across all techniques (FL, ML, FDN), studies have indicated that formal guidelines and agreements are required. Contractual agreements should be established between internal and external stakeholders before data provisioning to ensure data protection and intended use purposes are upheld30. In FL, contractual agreements are complex due to the sheer number of parties involved and the need for any amendments to be synchronised5. Clear and formalised policies, procedures, and standards need to be developed and practised. In healthcare, these techniques (FL, ML, FDN) are often guided by FAIR (data is findable, accessible, interoperable, reusable) principles10 and ideally leverage common data models (e.g., OMOP (Observational Medical Outcomes Partnership))31 and interoperability standards (e.g., HL7 FHIR (Fast Healthcare Interoperability Resources))28, which facilitate the secondary use of data. Using commonly agreed upon policies, procedures, and data standards is important in FL as it helps ensure consistent data pre-processing across nodes, thereby enhancing the integrity and reliability of the FL model32.

Regarding initial model utility, ML studies have reported the need for ML models to be registered33 and independently evaluated34 before their use. Model registration involves recording details related to the model’s data, characteristics, and intended use to improve transparency33. Initial model evaluation measures the model’s efficacy with clinical data against pre-established performance indicators33,35. This is necessary to facilitate trust and ensure that the model will not be used in a discriminatory manner36.

The potential for harm from using these techniques (FL, ML, FDN) has necessitated ongoing monitoring and risk management in their development and use37. Ongoing monitoring is needed to detect security breaches, access privilege violations, and misappropriation19,36. ML studies have also reported the need to deter ML model misuse, with financial penalties to prevent maleficent actors from unauthorised and discriminatory use of ML27,38. Monitoring is also needed to continuously assess the efficacy of ML, with refinements39 and maintenance19 necessary. Across all techniques (FL, ML, FDN), the sustainability of the predictive models needs to be actively managed. In FL, sustainability considerations are particularly important as reproducibility can be hampered if a data owner withdraws support10.

Relational mechanisms

Relational mechanisms shape the interactions between the stakeholders implicated by FL (and associated techniques) in healthcare. Four relational governance subthemes, which encompass ten mechanisms, were identified (Table 2): capability, ethics, involvement, and institutional support. All were reported as important considerations of FL, ML, and FDN.

Table 2 Relational mechanism theme

Across all techniques (FL, ML, FDN), studies reported the need for ethics and consent procedures. Ethical considerations emphasise that “new uses of people’s data can involve both personal and social harms, but so does failing to harness the enormous power of data”39. These techniques should be underpinned by ethical principles that guide clinical care, medical research, and public health, such as respect for autonomy, equity, transparency, beneficence, accountability, and non-maleficence37,40,41. Given that a central tenet of ethics is informed consent41, individuals should know how their data is used, by whom, and any commercial benefits40. This is also applicable in FL, as despite data not being shared, ethical considerations remain surrounding how the data is used and by whom10. However, obtaining informed consent is considered impractical due to the large volumes of health data used42. This has also led to discussions surrounding opt-in versus opt-out consent39. In addition, individual consent is not necessarily a requirement for ethical use of data in circumstances where potential beneficence outweighs risk in light of appropriate protections. In these cases, gatekeeper consent from data custodians who have weighed ethical considerations has been the norm, and may also become the norm for FL10.

The importance of stakeholder involvement was also reported for all techniques (FL, ML, FDN). Although de-identified data reduces the requirement for informed consent22, the public needs to be informed and accepting of how their data will be shared and used37. This requires strong consumer involvement and communication with community juries to foster the development of a social licence27,43. If health data is used in ways, or by actors, that are at odds with the interests of health consumers10, the consumer social licence would be violated10. In addition to health consumers, strong engagement amongst all stakeholders (e.g., healthcare organisations, clinicians, regulators, developers, researchers, public/private organisations, vendors) is required35,37. In FL, stakeholder engagement is necessary to establish a shared understanding of the vision and objectives of the project amongst all nodes10. This requires significant coordination between the nodes32 and negotiation regarding decentralised and centralised infrastructure provisioning costs10. Consumer involvement and broader stakeholder engagement are necessary to engender trust44.

Meaningful stakeholder engagement and clinical involvement will require capability development amongst all stakeholders implicated in digital health data governance, including clinicians37 and health consumers40. Education and training are needed to improve data, algorithm, and digital literacy. This will enable clinicians to act as an “intermediary between developers and regulators”37 and to understand how to interpret and act upon insights from AI technologies in their day-to-day work35. This will also enable health consumers to make informed decisions and meaningfully shape ML initiatives29,40,45. In FL, significant efforts are needed to develop the capabilities of the data stewards within each node, which will involve training sessions paired with auxiliary documentation regarding pre-processing and monitoring activities32.

Studies report that institutional support is necessary for creating an environment conducive to using all techniques (FL, ML, FDN), including cultural management, leadership, and financial provisions. These techniques require a cultural shift involving the development and maintenance of cultural values such as trust, transparency, learning, and accountability in the use of data19,35. In FL, cultural differences between stakeholders must be adequately managed (e.g., commercialisation versus open science)10. Strong leadership and a vision in which AI is positioned as foundational to underpinning improvements in health and care are required for these techniques to succeed10,41. Financial considerations are also necessary, requiring sustainable business models46 and support from funding bodies10. In FL, debates have been raised regarding whether financial incentives should be provided by the FL model providers to the data owners9.

Structural mechanisms

Structural mechanisms specify the roles and responsibilities necessitated by FL (and associated techniques) in healthcare. Three structural governance subthemes, which encompass twelve structural mechanisms, were identified (Table 3): establishing oversight bodies, establishing roles, and establishing and considering health consumers. The term health consumer rather than patient is used to denote “anyone who has used, currently uses, or will use health care services …[and] represent[s] the person’s more active role in making healthcare and medical decisions with their clinicians”47. As evident in Table 6, there was variation in how these were considered by studies investigating FL, ML, and FDN.

Table 3 Structural mechanism theme

Oversight bodies include ethical boards, advisory boards, notified bodies, regulatory boards, and publication review groups that develop and implement safeguards surrounding the use of health data need to be established. Ethical boards, including human research ethics committees and institutional review boards, oversee the ethical conduct of research18,27. In the context of FL, questions remain about where the ethical board should be situated10. In traditional ML, ethical approval and oversight are typically sought from the data user’s institution10. In FL, as data is not shared and the analysis is performed within the data owners’ infrastructure, it may be appropriate for ethical approval to be sought from the data owner’s institution, which is the responsibility of data stewards at each node10. Advisory boards should have diverse membership across health, legal, and security domains, as well as health consumer advocacy groups, to meaningfully oversee and provide guidance related to the use of data31,48. Notified bodies are delegated responsibility from regulatory bodies to audit and approve ML-equipped medical devices before widespread adoption49. Publication review groups need to be established48 to ensure publications resulting from the use of data are consistent with ethical considerations, policies, and procedures. In FL, publishers need to encourage transparent predictive model sharing rather than data sharing9.

There are diverse roles across FL, ML and FDN, which need to be established. These include data-safeguarding entities, developers, and project management teams. Data safeguarding entities include data owners, custodians, and stewards responsible for securing data18 and overseeing its use10. These actors will be unlikely to leverage FL and associated techniques if they are not provided with clear directives and policies10. Regarding the governance of FL, the need to go beyond custodianship and consider the pertinent role of data stewards was also discussed10. Data stewards seek to maximise the benefits of data use while upholding data subjects’ privacy and ensuring the data is not misused. They are also responsible for performing the analysis within their designated node. The data owner organisation or a trusted intermediary can perform this role. Developers are responsible for developing the predictive models33 and, in some instances, software-assisted medical devices37. They are also responsible for the ongoing monitoring of performance37. Developers can be internal or external to the organisation where the data is collected. Regardless of where they are situated, developers need to maintain the privacy of the data they use38. Project management teams with project leads are recommended50,51 to ensure the predictive models are developed effectively and efficiently.

During the development and use of ML, health consumers play a key role as evidenced by the relational mechanism of consumer involvement. However, many studies are silent on the role of health consumers, often referring to them as the data subject38. Others have demonstrated the utility of citizen juries in eliciting the views of health consumers27. The notion of consumer-driven data commons was also raised as an approach “to enable groups of consenting individuals to collaborate to assemble powerful, large-scale health data resources for use in scientific research, on terms that the group members themselves would set”18.