WASHINGTON (7News) — We live in a world where we trust what we see and hear. But what if the person on the other end of the line—the voice of your boss or the face of a family member—isn’t a person at all?

This is the reality of an increasingly sophisticated form of fraud that targets the most human of instincts: our trust. It’s a technology so advanced that it can turn your own identity against you.

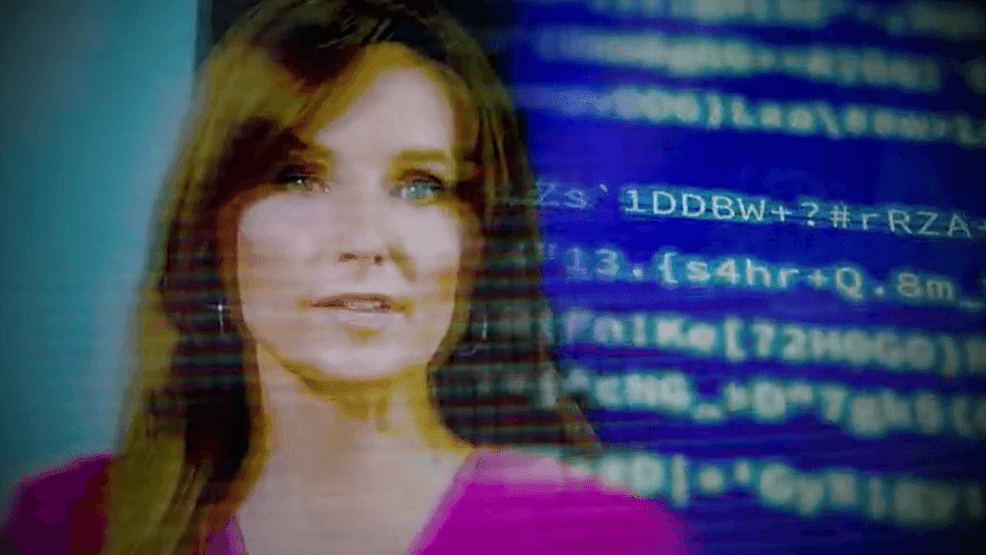

In a recent demonstration for this story, I was shown a video of me talking on our news set. It was not me at all – but entirely AI-generated.

That video was created in less than three minutes using a single photo and just three seconds of my voice. The ease of this technology is startling.

“Anyone from the 8-year-old to the 80-year-old can create a realistic deepfake attack in just a few minutes,” says Brian Long, CEO of Adaptive Security. His company helps corporations build defenses against these AI attacks.

I asked Long if we were to the point where anyone could create a deepfake good enough to fool a government agency. He was blunt. “Oh, definitely to fool a government agency,” said Long. “I think more troubling is good enough to fool the parent of a child, good enough to fool someone who works every day with a coworker.”

Long said that with just one image of a person, he can create a fully interactive video of that individual. The same applies to a voice—even a short voicemail greeting is enough to create a deepfake that can understand and respond in real-time.

Long demonstrated this during our interview. A deepfake of my voice called an unsuspecting co-worker, pretending to be in a panic.

The fake voice said:

“It’s Lisa Fletcher. I’m about to go on air and I can’t get into my computer to reset my password. Can you please help me reset it and text me the code? I need it urgently.”

When the person on the other end hesitated, asking for proof of identity, the deepfake responded:

“Okay. I understand your concern. I need you to do this quickly. Can you verify my identity through company records or check my employee ID once you’ve confirmed it’s me? Please reset my password and text me the code immediately.”

Long said that a request like this, layered with a few specific details, is all it takes to trick someone. The most unsettling part is that the deepfake wasn’t using pre-programmed sentences; it was interacting and responding to the live conversation. “It will respond and remember the conversation,” said Long.

A Growing Crisis

The threat is costing corporations millions. In 2024, police in Hong Kong reported that a finance worker at a multinational company was tricked into paying out $25 million after criminals used deepfake technology to impersonate the company’s chief financial officer in a video conference call.

Sam Altman, the CEO of OpenAI, has warned that the world is on the cusp of an “AI fraud crisis.”

“There’s no way it can be controlled because you saw earlier this year that China came out with a model called Deep Seek, which is an open-source model,” said Long. “And it has little to no moderation. So if you ask it to do bad stuff, it’ll probably do those things.”

Deepfake scams are also being used for political and social engineering. In 2024, Senator Ted Cruz was targeted in a deepfake scam where a robocall used audio of his voice to spread misinformation. In a separate incident, a deepfake impersonating Ukraine’s foreign minister was able to get on a call with the chairman of the U.S. Senate Foreign Relations Committee and demand sensitive information.

How to Protect Yourself

With deepfake technology becoming increasingly difficult to distinguish from authentic content, Long says we are entering a new reality where people must think twice before trusting a video or audio of someone they know.

Long, whose company deals with thousands of businesses, says most don’t report these crimes publicly.

He recommends that if you are even the slightest bit suspicious of a call—whether it’s a phone or video call—do nothing and follow up separately with the individual.

He also suggests a couple of other things you can do to protect yourself and your family:

- Create a shared password: Establish a password that only you and your family members know. If you receive a call that sounds or looks like a family member who is in an urgent situation or needs money, ask them for the password to verify their identity

- Use a generic voicemail greeting: Long recommends using the default robotic voice for your voicemail message. The number of words in a typical outgoing message is enough to create a deepfake of your voice for an entire conversation